Experimentation is essential for product teams. But if you do it wrong, you might as well not do it at all. To make your experiments worthwhile, predictable, and sustainable, you need a system that aligns your tests around business growth and customer problems.

- Experimentation is highly valuable because it helps teams work with a growth mindset, update their intuition, and stay close to what their customers need.

- The problem is that many teams experiment in an ad hoc way or goal their experiments incorrectly—which leads to a lack of sustainable learning and wins.

- When experiments don’t produce learnings, organizations lose faith in experimentation as a decision-making tool and don’t incorporate it into their internal processes

- To avoid this problem, organizations should implement an experimentation framework.

- The framework helps make sure experiments are properly aligned around the right business growth lever and focused on a customer problem.

Why you need an experimentation framework

Experimentation allows teams to work with a growth mindset, where they operate with the understanding that their knowledge about the product and its users can change. They can apply scientific methods to bridge the perception and reality gap that naturally occurs within scaling products and align with what customers actually need.

When teams experiment in an ad hoc way, experimentation programs fail and organizations cut experimentation out of their internal decision-making processes. A framework avoids that situation by ensuring your experiments benefit your users and, thus, your business.

Experimentation is key to making decisions that have a meaningful business impact. Intuition alone is great, and might bring you good results, but your decision-making process won’t be sustainable or reliable.

Experimentation helps you develop a growth mindset

When experimentation is an integral part of your work, it helps you to move away from a fixed mindset—where you never update what you believe about your product—and work with a growth mindset. Rather than relying on your assumptions, you continuously learn and update your knowledge. Then, you can make the best possible decisions for your business and customers.

Experimentation helps you update your instincts and make better decisions

If you don’t experiment, you make decisions based on intuition or simply what the loudest voice in the room thinks is right. With regular experimentation, you can make decisions based on learnings from data.

You might successfully make intuitive decisions for a long time, but it’s difficult to scale intuition across a company as it grows. You also can’t know when your intuition becomes outdated and wrong.

As an organization grows and changes, your intuition—what you believe about your products, customers, and the best path of action—is constantly expiring. When you learn from experimentation, you can hone and update your intuition based on the data you get.

Experimentation helps you stay close to your customers

Experimentation allows you to keep the perception and reality gap (the space between what you think users want and what they actually want) to a minimum. When you’re in the early stages of your product and working to find product-market fit, you’re close to customers. You talk to them, and you’re aware of their emotions and their needs.

But as you start scaling, the perception and reality gap grows. You have to contend with lower-intent customers and adjacent users. You can’t talk to customers like you did in the initial product development stages because there are too many of them. Experimentation helps you find the areas where your intuition is incorrect so you can reduce the perception gap as you scale.

Why experimentation programs fail

Experimentation programs often fail when people use experimentation as a one-off tactic rather than a continuous process. People also goal their experiments incorrectly because they expect their experiments to deliver wins rather than learnings.

Experiments are ad hoc

Teams often view experiments as an isolated way of validating someone’s intuition in a specific area. Ad hoc experimentation may or may not bring good results, but those results aren’t predictable, and it’s not a sustainable way of operating.

Experiments have incorrect goals

When people expect experiments to deliver lifts, they’re goaling their experiments incorrectly. Although getting wins from your experiments feels nice, losses are more valuable. Losses show you where you held an incorrect belief about your product or users, so you can correct that belief moving forward.

Experiments aren’t aligned to a growth lever or framed around a customer problem

Experiments cause problems when you don’t align them to the growth lever the business is focused on because that means they’re not useful for your organization. Equally, only focusing on business outcomes instead of framing experiments around a customer problem creates issues. If you only think about a business problem, you interpret your data in a biased way and develop solutions that aren’t beneficial to the user.

What happens when experimentation programs fail?

When experimentation programs fail or are implemented incorrectly, organizations lose confidence in experimentation and rely too heavily on intuition. They stop trusting them as a path to developing the best possible customer experience. When that happens, they don’t adopt experimentation as part of their decision-making process, so they lose all the value that experiments bring.

Let’s take a look at some examples of experimentation gone wrong. Here’s what happens when you experiment without using a framework that pushes you to align your experiments around a business lever and a customer problem.

Free-to-paid conversion rate

An organization is focused on monetization and needs to monetize its product. They task a team with improving the free-to-paid conversion rate.

The company says: “We have a low pricing-to-checkout conversion rate, so let’s optimize the pricing page.” The team decides to test different colors and layouts to improve the page’s conversion rate.

However, the experimentation to optimize the pricing page isn’t framed around the customer problem. If the team had talked to customers, they might have found that it’s not the pricing page’s UX stopping them from upgrading. Rather, they may not feel ready to buy yet or understand why they should buy.

In this case, optimizing the pricing page alone would not yield any results. Let’s imagine the team instead focuses their experimentation on the customer problem. They might try running trials of the premium product so that customers are exposed to its value before they even see the pricing page.

The work you end up doing, and the learnings you gain, are completely different if you start your experiments with the business problem (“there is a conversion rate that we need to increase”) versus if you start with the customer problem (“they are not ready to think about buying yet”).

Onboarding questionnaire

An organization is focused on acquisition, so the product team is looking to minimize the drop-off rate from page two to page three of their onboarding questionnaire. If they only think about the business problem, they might simply remove page three. They assume that if the onboarding is shorter, it will have a lower drop-off rate.

Let’s say that removing page three works, and the conversion rate of onboarding improves. More people complete the questionnaire. The team takes away a learning that they apply to the rest of their product: We should simplify all the customer journeys by removing as many steps as possible.

But this learning could be wrong because they didn’t think about the customer side of the problem. They didn’t investigate why people were dropping off on page three. Maybe it wasn’t the length of the page that was the problem but the type of information they were asking for.

Perhaps page three included questions about personal information, like phone number or salary, that people were uncomfortable giving so early in their journey. Instead of removing the page, they could have tried making those answers optional or allowing users to edit their answers later to get more people to pass that part of onboarding.

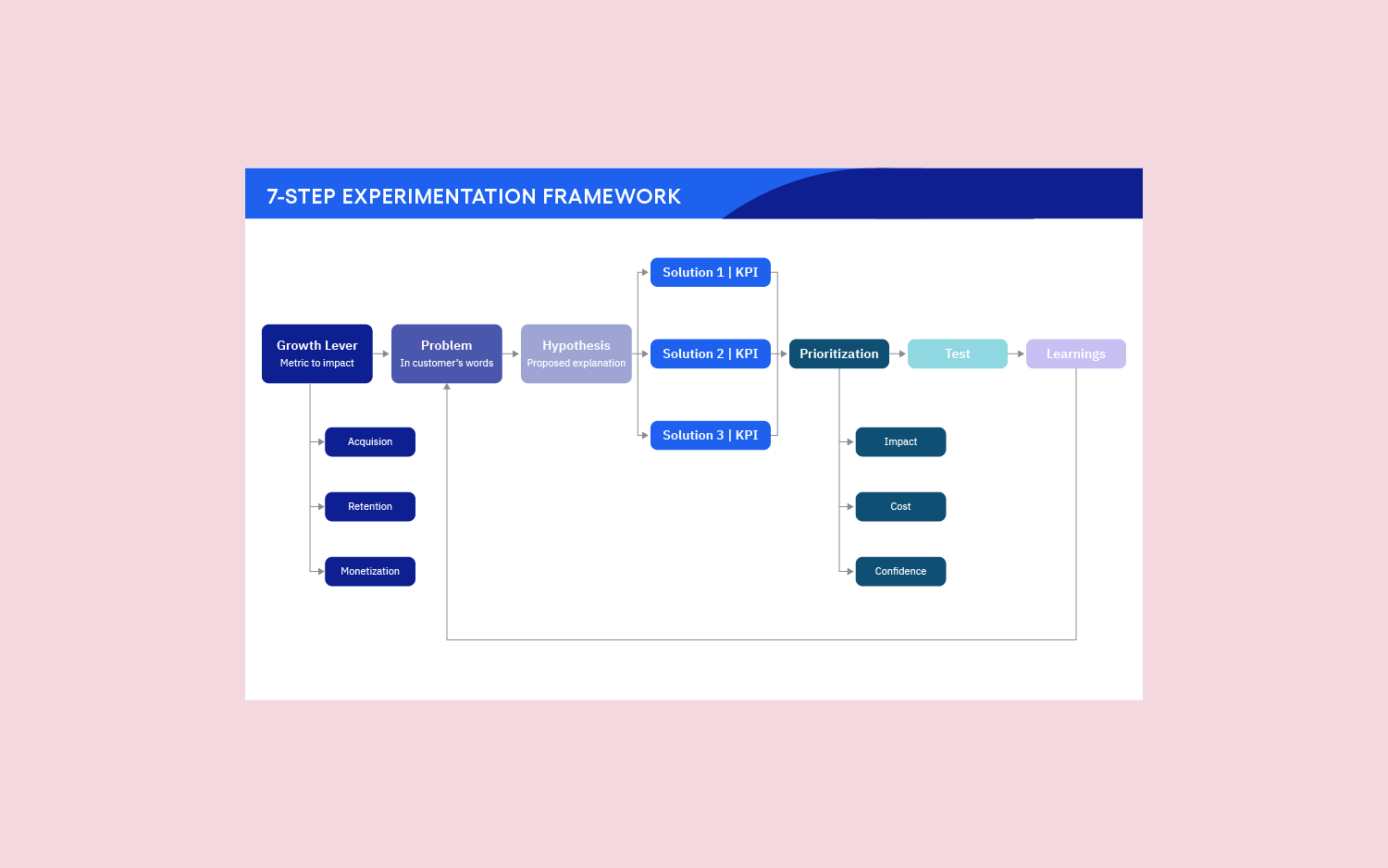

A 7-step experimentation framework

Follow these steps to make your experiments sustainable. It will help keep your experimentation aligned around business strategy and customer problems.

Use this simple framework to get started with your backlog list—make each bubble a column in your Airtable or Sheets.

1. Define a growth lever

For an experiment to be meaningful, it needs to matter to the business. Choose an area for your experiment that aligns with the growth lever your organization is focused on: acquisition, retention, or monetization.

Let’s say we’re focusing on acquisition and we notice drop-off on our homepage is high. To frame our experiment, we can say:

- Accelerating acquisition is our priority, and our highest-trafficked landing page (the homepage) is underperforming.

2. Define the customer problem

Before you go any further, you need to define the problem the experiment is trying to address from the customer’s perspective.

You found product-market fit by identifying the customer problem that your product solves. Yet when many organizations move to distributing and scaling their product, they switch their focus to business problems. To be effective, you need to continuously evolve and learn about your product-market fit by anchoring your distribution and scaling in customer problems.

You will iterate on the customer problem based on your experiment results. Start by defining an initial customer problem by stating what you think the problem is.

For our homepage example, that might be:

- Customers are confused about our value proposition.

3. Develop a hypothesis

Now, define your interpretation of why the problem exists. As with the customer problem, you’ll iterate on your hypothesis as you learn more. The first version of your customer problem and hypothesis gives you a starting point for experimentation.

Potential hypotheses for our homepage example include:

- Customers are confused due to poor messaging.

- Our page has too many action buttons.

- Our copy is too vague.

4. Ideate possible solutions with KPIs

Come up with all the possible solutions that could resolve the customer problem. Create a way of measuring the success of each solution by indicating which key performance indicator (KPI) each solution addresses.

Download our Product Metrics Guide for a list of impactful product KPIs around acquisition, retention, and monetization and how to measure them.

A solution + KPI for our homepage example might be:

- Solution: Iterate on the copy

- KPI: Improve the visitor conversion rate

5. Prioritize solutions

Decide which solutions you should test first by considering three factors: the cost to implement the solution, its impact on the business, and your confidence that it will have an impact.

To weed out solutions that are low impact and high cost, prioritize your solutions in the following order:

- Low cost, high impact, high confidence

- Low cost, high impact, lower confidence

- Low cost, lower impact, high confidence

Then you can move on to high-cost solutions, but only if their impact is also high.

Different companies may attach different weights to these factors. For instance, a well-established organization with a large budget will be less cautious about testing high-cost solutions than a startup with few resources. However, you should always consider the three factors (cost, impact, and confidence of impact).

Another benefit of experimentation is that it will help hone your ability to make a confidence assessment. After experimenting, check if the solution had the expected impact and learn from the result.

6. Create an experiment statement and run your tests

Collect the information you gathered in steps 1-5 to create a statement to frame your experiment.

For our homepage example, that statement looks like:

- Accelerating acquisition is our priority, and our highest trafficked landing page—the homepage—is underperforming [growth lever] because our customers are confused about our value prop [customer problem] due to poor messaging [hypothesis], so we will iterate on the copy [solution] to improve the visitor conversion rate [KPI].

Define a baseline for the metric you’re trying to influence, get lift, and test away.

7. Learn from the results and iterate

Based on the results from your tests, return to step two, update your customer problem and hypothesis, then keep running through this loop. Stop iterating when the business priority (the growth lever) changes, for instance, when acquisition has improved, and you want to focus on monetization. Set up your experiments aligned to the new lever.

Another reason why you should stop iterating is when you see diminishing returns. This might be because you can’t come up with any more solutions, or you don’t have the proper infrastructure or enough resources to solve your customer problems effectively.

Make better decisions faster

To deliver targeted experiments to users and measure the impact of product changes, you need the right product experimentation platform. Amplitude Experiment was built to allow collaboration between product, engineering, and data teams to plan, deliver, track, and analyze the impact of product changes with user behavioral analytics. Request a demo to get started.

If you enjoyed this post, follow me on LinkedIn for more on product-led growth. To dive into product experimentation further, check out my Experimentation and Testing course on Reforge.