The Story of Ask Amplitude

A behind-the-scenes look at how we built an AI assistant to make chart-building even faster and easier than before.

It’s no secret that the recent developments in AI and large language models (LLMs) have opened up a world of possibilities in technology and products. For the last three months, we‘ve been working hard to apply AI to the most important action users take in Amplitude—creating a chart.

We’re excited to be able to share both the feature and the technology behind it.

From question to insight

Answering questions and finding insights to drive decision making is the key value that behavioral data unlocks. And until now, our customers have had to construct charts step-by-step in the UI. Though this was already leaps and bounds easier than writing SQL, there were still many steps involved (what type of chart do I build, what events do I need, what properties or groups do I use, and so on).

We’re excited to announce that we’ve created an AI assistant to empower you in the chart-building process. You can now shortcut the “building” process and go straight from a question to an insight. Amplitude customers can try it out today.

Here are some questions it can help you answer:

- Which category has the highest rate of purchase after viewing a recommended item?

- What is the average length of sessions where users play at least five songs?

- How long does it take for companies on free vs paid plans to invite their first three users?

AI as an analytics assistant

The way we’ve built Ask Amplitude reflects one of our most important beliefs—it’s only through self-service exploration that you can get at the immense value that lies within product data. The roots of this belief go back to what we saw in many of our customers before they adopted Amplitude.

Data science and analytics teams were completely overwhelmed with requests, scrambling to put together SQL queries and dashboards, only to get three more questions for every one they answered. A single answer is never the end goal, but just a stepping stone to developing a complete picture of customer behavior. To solve that, companies have turned to self-service analytics to equip employees to answer their own questions. Like the adage suggests, “Teach a man to fish, and you feed him for a lifetime.”

In that light, Ask Amplitude is not designed to be a black box question-answering AI. It’s designed to be an assistant supporting your journey of learning how to leverage product data.

When you ask a question like, “Which of my videos has the highest conversion rate from watching to subscribing on iOS?” the goal of Ask Amplitude is not just to tell you what the latest viral cat videos are. It’s to teach you how to build a funnel analysis, which events in your taxonomy represent watching videos and subscribing, and which properties contain the video and platform information. The resulting chart is a foundation of knowledge for you to build on and answer all sorts of follow-up questions on your own.

By using LLMs to frame analytics in the natural language of the user, even more people will take their first step toward unlocking the power of self-service product data. And at each step beyond that, Ask Amplitude will help them build more and more confidence in their understanding of that data.

Managing the explosion of content

One of the biggest risks of AI-generated content in any domain is the proliferation of low-quality noise drowning out the important signal. This is true of self-service analytics even without AI. But adding AI features without this foresight could make that noise ten times worse. When there are ten different charts that represent, "Official Revenue KPI"—it's a bug, not a feature.

We’re keenly aware of this problem and have built Ask Amplitude to explicitly mitigate it. Before trying to generate a new chart, the first thing we do is perform a semantic search of content already inside your Amplitude instance—created and curated by your colleagues—to find any potential matches. Semantic search is a big improvement over traditional search for users who may use different terms to refer to the same concepts, such as “hours of video streamed” versus “total watch time.”

We expect that, most of the time, users will find a relevant piece of content without having to create a new chart at all. This ensures that the content in Amplitude remains high-quality and users can learn from each other.

The future of Ask Amplitude

It’s incredible what advancements in AI and LLMs have enabled in such a short time, but we know Ask Amplitude is only the beginning of how this technology will impact the way we do analytics. We’re rethinking the entire experience of how our customers understand their product data from the ground up—and we could not be more excited about the possibilities.

If you’re an Amplitude customer, we invite you to join our AI Design Partner program to stay up-to-date on all of the latest innovations we’re testing out.

Building Ask Amplitude

Transparency is one of our core principles for building with AI, so we want to share as much as we can about the development process of Ask Amplitude and how it works under the hood.

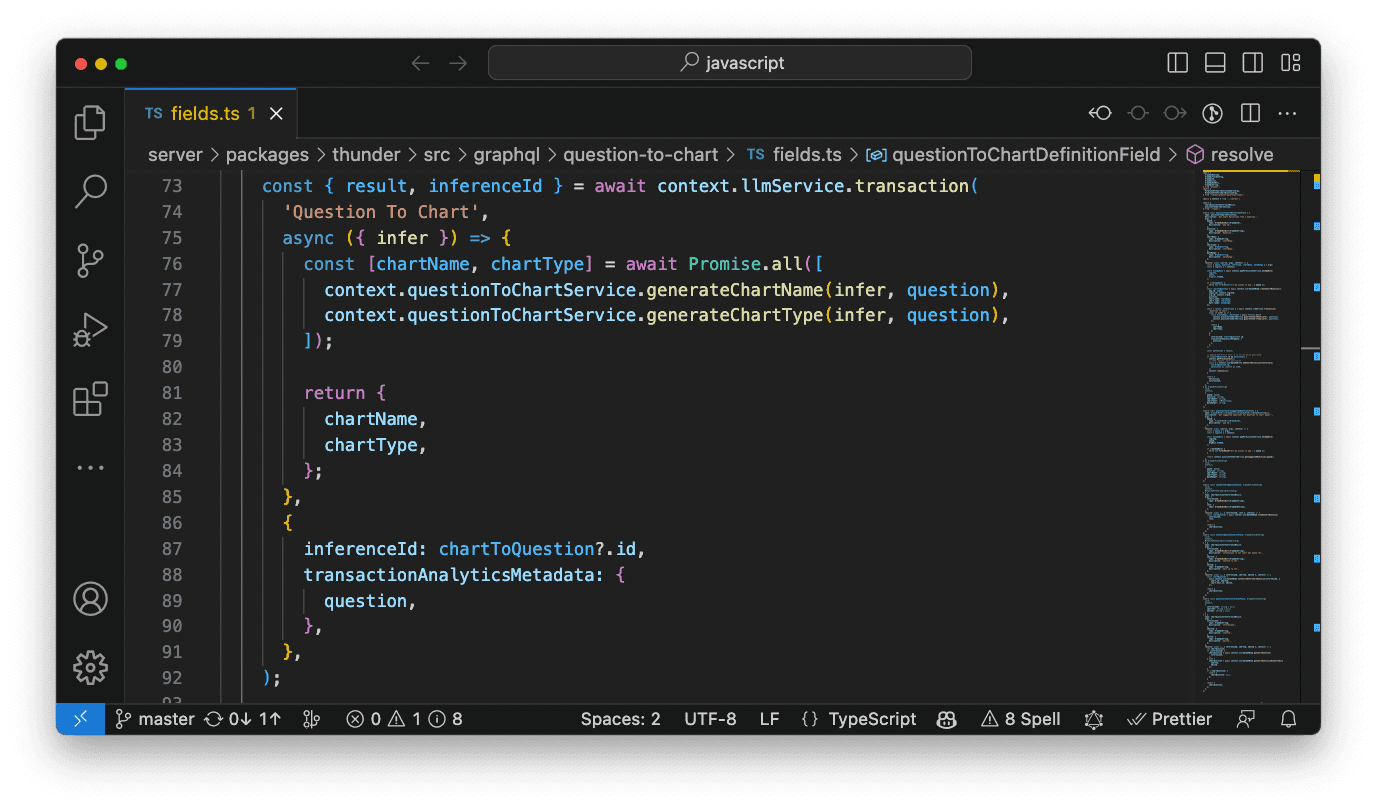

Amplitude has a custom query engine, which can be queried with JSON query definitions. Ask Amplitude uses a series of LLM prompts to convert a user’s question into a JSON definition that can be passed to our query engine and used to render a chart.

Our initial MVP looked entirely different from today’s version of the feature. As we began experimenting with LLMs, we hit multiple limitations that required re-architecting prompts, re-designing interactions, and changing how we framed the feature in the UI. Building real products on top of LLMs and the OpenAI APIs is a brand new discipline, and we’ve learned a lot from taking a hackathon idea to production.

We’re excited to share some of the key insights from this process and hope that they’ll be useful for anyone that’s starting out on their LLM journey.

Learning 1: Keep it simple

Our first attempt at building Ask Amplitude involved providing some example charts and events in the prompt as in-context learning. Then we directly asked for a chart definition that answers the user’s question.

This was promising at first, but quickly became impractical. We found that the LLM would hallucinate configuration keys and values and often generate invalid JSON. It would also misinterpret the question. The complexity of what we were asking it to do was too high, leaving ample room for error.

This is where we realized we could make use of a classic software engineering principle—KISS (keep it simple, stupid). LLMs, like humans, do better when given clear and specific tasks. So we broke down the process of creating a chart into the individual decisions a human needs to make, such as:

- What type of chart? (e.g. conversion funnel, segmentation, retention)

- Which events should be included?

- What time range should be analyzed?

- What filters should be applied?

- What “group bys” should be applied?

We then stitched together each of the individual answers in code to consistently get a valid JSON query definition. Simplifying the individual LLM tasks reduced hallucinations, made the results more consistent, enabled better recall, and enabled us to parallelize prompts—making generation much faster.

Learning 2: Prompt engineering is an infinite task

It’s well-documented that OpenAI’s models are changing over time, even when using a consistent model version. This means it’s important to constantly monitor the response quality of our prompts and fix them when they break. We maintain source-of-truth unit tests, which help us catch issues that are introduced by underlying model changes and keep us sane as we iterate on prompts. This also gives a baseline quality assessment for what we expect Ask Amplitude to be able to do.

Even if you’re using a stable model, prompt engineering is a game of marginal gains—1% improvements to individual prompts can have a big impact on output quality—meaning there’s always a bit of juice left to squeeze, and it's difficult to know when to stop. Like any feature, we’re continuously iterating and improving the question-answering capabilities in both breadth and depth.

Learning 3: Prompt engineering is a team sport

Prompt engineering isn’t just about tweaking prompt text. It’s also about feature engineering, input data processing, and prompt architecture. To minimize hallucinations and improve accuracy, we do significant pre-processing to decide what data to use for in-context learning. We end up relying on a lot of traditional ML techniques to prioritize data.

Tweaking and tuning the text of prompts also requires lots of domain knowledge (as they say, if you can’t explain it to a 6 year old), and getting data from a database to a prompt is not ML, but rather a software engineering task. Often these don’t overlap, so prompt engineering becomes a highly collaborative task between domain experts, data experts, prompting specialists, and product engineers.

We built a tool to help with this—it enables non-technical people to edit and iterate on prompts without editing code (more on this later).

A good example of collaboration on prompts is event selection. The obvious idea of simply listing all available events in the prompt and asking “Which events are most relevant to this question?” hit limits very quickly. Our customers often have hundreds or even thousands of events, leading to hallucinations, poor recall, and context window limits. We needed a way to pre-filter the number of events provided in-context to a more manageable number, around 10 or so.

Fortunately, we’ve been maintaining embeddings for events for a while as a way to power some of our other AI features (like Data Assistant). We realized we could reuse these to select the most semantically relevant events before handing them off to the LLM. This reduced context length and improved recall, cost, and speed.

Learning 4: The Hierarchy of LLMs

LLMs are great for a whole host of tasks, but they are relatively slow and expensive for certain problems. We’ve found that many tasks that one might reach to an LLM for could be achieved much faster and cheaper using embeddings and vector search.

As we’ve built out language features, our first port of call is text embedding search. If we’re not able to get good enough results, we add a layer of GPT-3.5. If this still doesn’t work, we ultimately use the most powerful language model in GPT-4, though it’s reserved for the most complex tasks.

This represents tradeoffs with accuracy, cost, and speed. Embeddings are by far the fastest and cheapest to operate in production, but LLMs typically are able to make better final selections.

The tools we made along the way

Inference wrapper

Knowing that Generative AI is going to be used by lots of different teams within the Amplitude organization, we built everything to be reused and shared in all sorts of different contexts and products.

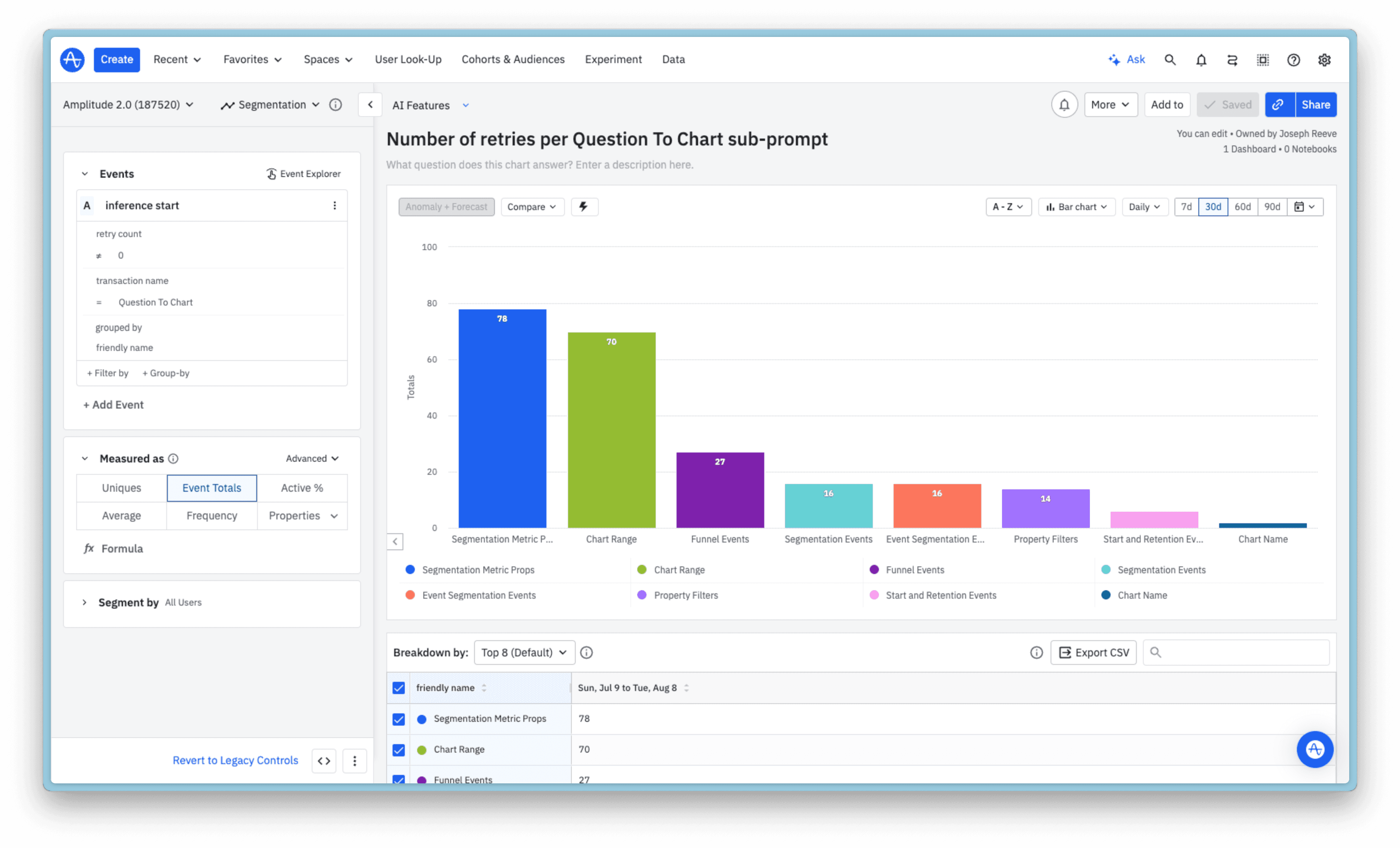

An important job of LLMs in Ask Amplitude is selecting values from a list. Some percent of the time, the models hallucinate invalid values or response formats, so we needed a way to validate and retry on invalid responses. Our inference wrapper supports validating data types, enums, and even JSON. If validation fails, the wrapper will retry up to the specified number of times before throwing an error.

The wrapper logs all sorts of data about inferences into Amplitude, so we can track trends and prioritize our prompt engineering efforts.

Logging inferences is also important because in the future, we will want to fine-tune foundational models on each prompt so we can build models specific to each Ask Amplitude sub-prompt.

Given there are multiple LLM-powered features within the Amplitude platform and in development, many of which have multiple constituent sub-prompts, it’s important that we’re able to group inferences to a feature. Our internal LLM service exposes the concept of inference transactions—groups of inferences that are triggered by a single user action, but require different context and prompts.

Prompt engineering UI

As mentioned above, prompt engineering is a collaborative discipline. It requires domain knowledge AND technical expertise to provide relevant data for in-context learning and to teach the LLM about Amplitude. That’s why we built an internal tool on top of Amplitude Experiment that allows anyone at Amplitude (e.g. designers or product managers) to iterate on and test prompts in production without affecting our customers.

In the future, we plan to use this tool for A/B testing prompt variations or even LLM-powered self-improving tests using multi-armed-bandit style experimentation.

And, we’re hiring

At Amplitude, we want to bring the power of AI and LLMs to everyone who builds products. First in the form of features like Ask Amplitude that help our customers ask questions and learn more quickly from their product data, but eventually in the form of a whole suite of product infrastructure and tools that make building AI products easy.

If you’re passionate about using the latest in AI technology to solve real problems and help companies build better products, then we’d love to hear from you.

Joseph Reeve

Former Software Engineering Manager, Amplitude

Joseph Reeve is a former engineering manager at Amplitude. He has built and launched digital products for enterprises, startups, and charities, and enterprises for nearly a decade. He serves as a consultant and is the founder of Gived, an R&D design and development agency, focused on projects that improve the world.

More from Joseph