Auto-track is [Still] Bad!

Every few years the auto-track debate rears its ugly head and needs to be shut down

Editor's Note

Since publishing this blog post, we’ve changed our stance on Autocapture. We believe today’s analytics are too difficult to get started, use, and scale. On our mission to make analytics effortless, we’re introducing Autocapture as a way for teams to get started with their analytics implementation much more quickly. And as they evolve, they can use precision tracking for more sophisticated use cases and long-term data collection needs. Amplitude is a flexible platform that’s easy to use no matter your technical expertise. Read more about why we changed our tune and how we think Autocapture can be better.

Many years ago, I was at a digital analytics conference and I visited a booth for a new marketing vendor that was pitching a “no-tagging” option for digital marketing analytics. I was intrigued because I had spent much of my professional career writing about digital analytics solution architecture and tagging. After hearing their pitch, I could see the allure of what they were offering. Imagine being able to “auto-track” all of the data you might need from your customers without having to spend time doing solution architecture, design or begging developers to build a data layer and set analytics events and properties. Who wouldn’t want that?

But as I thought about it, I began to realize what a terrible idea this would be! In the early days of digital analytics, it was commonplace to collect data by “DOM scraping” web pages. DOM scraping allowed you to grab data in HTML tags and stick it into analytics variables. This method was quick, but also extremely fragile. DOM scraping was soon replaced by data layers and tag management systems. The latter took more time, but was much less fragile (for a good history of this transition, read this). Using auto-track approaches to tagging is a step backwards to the DOM scraping days and brings back a lot of its fragility.

But every few years, there is a renewed push to get organizations to reconsider auto-track or “no-tagging” solutions and many who weren’t around to experience its flaws fall for it and repeat the mistakes of the past. Unless you are building a digital property that will only be used for a few weeks or months, I cannot think of any situation in which I would advise an organization to use an auto-track approach for digital analytics. Here is a short summary of the reasons to avoid these solutions:

- Too Much Data – Auto-track products inherently collect too much data and make it difficult to find the meaningful data you need to be successful.

- Bad Data – Auto-track products make it easy to collect data, but a lot of that data becomes bad or unusable data without the need for someone to clean up their event definitions and CSS matching rules each time developers make changes to websites or apps. A simple name change by a developer can throw off some of your key metrics until the new name is fixed/rationalized. I have learned that getting adoption for digital analytics is difficult even if you do a great job of ensuring that the data you are collecting is accurate. Imagine trying to make it successful if your critical data points are incorrect for periods of time until they are fixed. In many organizations, people are looking for excuses to “trust their gut” instead of using data and poor data quality can give them an excuse to ignore digital analytics data.

- Doesn’t Save Time – As one of our co-founders Jeffrey Wang says: “Auto-tracking doesn’t eliminate work. It shifts work to a less scalable process.” Auto-track saves time for those who would otherwise have to put forethought into what they want to track on websites/apps, but it makes more work for analysts, data quality resources or product managers who have to obsess about tagging. So if those at your organization are pushing for an auto-track solution, there is a possibility that the motivation is that it will save them time. Or it may be the case that they don’t think the organization is using data to drive better outcomes anyway, so they simply want the path of least resistance.

- Security/Privacy Issues – Auto-track products can accidentally capture sensitive or private data that is not meant to be collected (feel free to Google “autotrack passwords” for more info). This is increasingly dangerous as new directives like GDPR and CCPA impose fines on organizations for the mistreatment of personal data.

Many of these concerns were outlined years ago by Jeffrey Wang, one of our Amplitude co-founders, when he explained why Amplitude purposely didn’t add auto-track to our product. Even organizations that Amplitude competes with have agreed that auto-track is a bad strategy.

The Levels of Data

I recently had the pleasure of listening into a call by my colleague John Cutler about auto-track. On the call, there was a prospect that was comparing Amplitude to a competitor and one of the big differences was auto-track, which the prospect thought might help them. John explained that there are essentially three levels of data when it comes to digital analytics:

- Level 0 – These are the most critical data points for your organization. They will never change unless your organization makes a massive pivot into a new area or business model. For example, a B2B campaign management product would almost certainly have a Campaign Created event with a set of fairly stable properties.

- Level 1 – These are data points that will be useful for the mid-term. They are likely useful for the next year or two, but there is a chance that they might change as the website/app changes. Continuing the B2B example, this might include tracking onboarding video starts and completions. Right now, the campaign creation flow shows videos to new customers, but a year from now, videos may be removed if they aren’t proven to increase campaign creation rates.

- Level 2 – These are data points that are more transitory and oftentimes very detailed. These may only be around for a few weeks or a few months. An example of this might be tracking clicks on a specific link, a toggle, or button on the form. Right now, it is interesting to someone, but it doesn’t add that much value and will likely disappear in a few weeks, or the tagging of it can stop once the learning it provided is understood and will likely not change dramatically.

For most organizations, there will be relatively few Level 0 events and properties, many more Level 1 items and there can be hundreds of Level 2 items. Most of your time should be spent on the Level 0 and Level 1 items. Level 2 items should happen naturally by having tagging integrated into the development process. The reason this concept is so interesting is that much of the case that is made for auto-track solutions is based upon how much work is needed to proactively implement analytics tagging. But once you realize that you can answer 80% of your analytics needs by tagging a relatively small number of data points, the time savings argument (which is false anyway) simply evaporates.

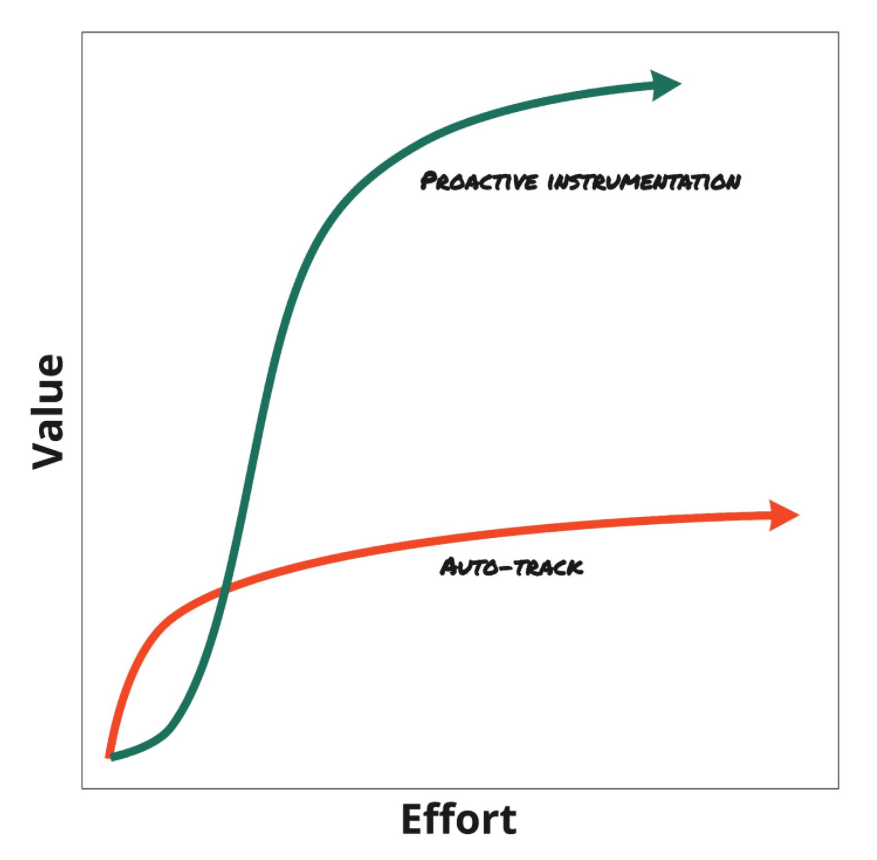

In John’s discussion, he drew this great diagram comparing the effort and value of auto-track from a more traditional proactive tagging implementation. As you can see, the auto-track solution begins with more value with less effort, but soon turns to incrementally less value per amount of effort. While the traditional approach takes slightly more effort initially and more value per effort as time goes on.

I have long advocated for organizations identifying the business questions they want to answer and mapping those business questions to a solution architecture before tagging takes place. I believe that it is worth the time up-front to identify what data you plan to collect and why instead of tracking data and then trying to figure out how it can be used. Like most things in life, you get out of it what you put into it. So don’t fall for the trap of auto-track solutions and recognize that success in digital analytics is a marathon, not a sprint.

What if I Forgot Something?

In addition to the supposed time savings, another argument I hear made for auto-track is around the omission or forgetting of required data. It usually goes something like this: “There is no way I can anticipate all of the data I am going to need through requirements gathering, so I need an auto-track solution to collect all of my data in case there is some business question that arises that I didn’t anticipate up-front…”

Of course there will be cases in which something arises and you are missing data that you wish you had to make a business decision. No matter how good you are at doing business requirements gathering, you cannot anticipate every event and property that will be needed. But if you think back to the Level 0, Level 1 and Level 2 items discussed above, I have found it very rare that something that has been missed will be a Level 0 item, which represents the most critical data points for your organization. Level 0 items should be pretty obvious to your organization. You may have some Level 1 items that arise that you missed, but it’s unlikely that you cannot add some new Level 1 items and wait for a few weeks to get enough data to answer your business question. If a question were so important that it needed to be answered in 24 hours, it should have come up during requirements gathering. Level 2 items should be even less important in the grand scheme of things. It is likely that many of the items you are missing are Level 2 items because they either aren’t critical or represent new things that weren’t present during requirements gathering. In most cases, it is ok to add some new tags and wait a few days or weeks to get this missing data.

Culture

The last thing I’d like to mention on the topic of auto-track is the area of corporate culture. As is often the case, technology decisions tell you a lot about the culture of an organization and teams within the organization. When I see analytics teams that are looking at auto-track solutions, here are some of the thoughts that swirl in my head:

- Why is it so difficult for them to plan their implementation ahead of time? Sometimes the desire for an auto-track solution is masking the fact that the analytics team doesn’t really know what the business needs. Maybe they need to spend more time with their internal stakeholders instead of looking for a product that will enable them to track everything just in case.

- Why is it so difficult for them to get implementation resources? If an analytics team is doing a good job, they should be perceived to be critical and strategic to the organization. Analytics done right helps organizations make money or save money, so why would an organization not devote resources to implementation efforts? Maybe the auto-track solution makes it easier for the analytics team to avoid the fact that the organization doesn’t value their work.

- Will a new analytics platform solve their problems? Sometimes switching from one analytics solution to another seems like a great way to wipe the slate clean and start fresh, but if your organization has inherent cultural issues that have caused the current solution to be unsuccessful, it may be advisable to fix those issues before trying a new vendor. Failure to do so can result in having the same issues repeated with a new tool.

Final Thoughts

While I hope that this post is ultimately unnecessary due to the fact that organizations have learned their lesson about auto-track products, sometimes it is important to remind ourselves of things we have learned in the past so history doesn’t repeat itself. If your organization is being pressured to look at an auto-track solution, I urge you to consider the potential issues raised above, the different levels of data, the benefits of up-front planning and the underlying cultural aspects that might be driving the decision.

Adam Greco

Former Product Evangelist, Amplitude

Adam Greco is one of the leading voices in the digital analytics industry. Over the past 20 years, Adam has advised hundreds of organizations on analytics best practices and has authored over 300 blogs and one book related to analytics. Adam is a frequent speaker at analytics conferences and has served on the board of the Digital Analytics Association.

More from Adam