How Data Lifecycle Management Applies to Product Analytics

Understanding how data lifecycle management relates to product analytics will lead to cleaner data and better insights.

Many organizations collect data, but not all effectively manage it throughout its lifecycle. According to one McKinsey & Company report, organizations are still managing data “with ad hoc initiatives and one-off actions, rather than through long-term strategic adjustments that are required for sustainable success in an evolving business environment.” And ad hoc data lifecycle management can cause problems for your product analytics.

Well-organized data is essential for discovering actionable insights from product analytics. With intentional data lifecycle management, you ensure that your data remains clean and usable. Data-informed teams improve your bottom line now and well into the future.

What Is Data Lifecycle Management and Why Does It Matter?

Data lifecycle management (DLM) is the process of effectively monitoring and organizing information. It involves a comprehensive approach to managing an organization’s data, recognizing what’s relevant, and proactively updating your processes.

Effective data lifecycle management has benefits that reach all areas of the business:

- Efficient access to data: Too much data can result in too much noise and not enough signal. With effective DLM, teams create a plan for which data they want to track and intake.

- Cost savings: Effective data lifecycle management allows organizations to reduce the expenses associated with fixing mistakes in data storage and collection.

5 Data Lifecycle Management Steps in Product Analytics

The process of data lifecycle management can be broken down into five overall steps, which, when done well, provide clean data everyone can use to surface valuable insights.

1. Plan and Instrument Your Data

The first and most important step of product analytics DLM is choosing what data to send to your product analytics platform. Being clear and consistent about which events and properties are important and what those events and properties are called will set the stage for a healthy data lifecycle.

Choosing the right data to track involves creating a tracking plan and a data taxonomy. Your tracking plan is documentation about which events and properties you plan to track, the business objectives behind those events and properties, and where all of this data will end up. Your data taxonomy is your methodology behind how you name the events and properties you track.

Together, your tracking plan and data taxonomy ensure everyone is aligned around what data is being collected, why, where to find it, and how to refer to it. One important note at this stage is that you must resist the urge to track everything immediately. Start your tracking plan and taxonomy with what you know is important, and move from there.

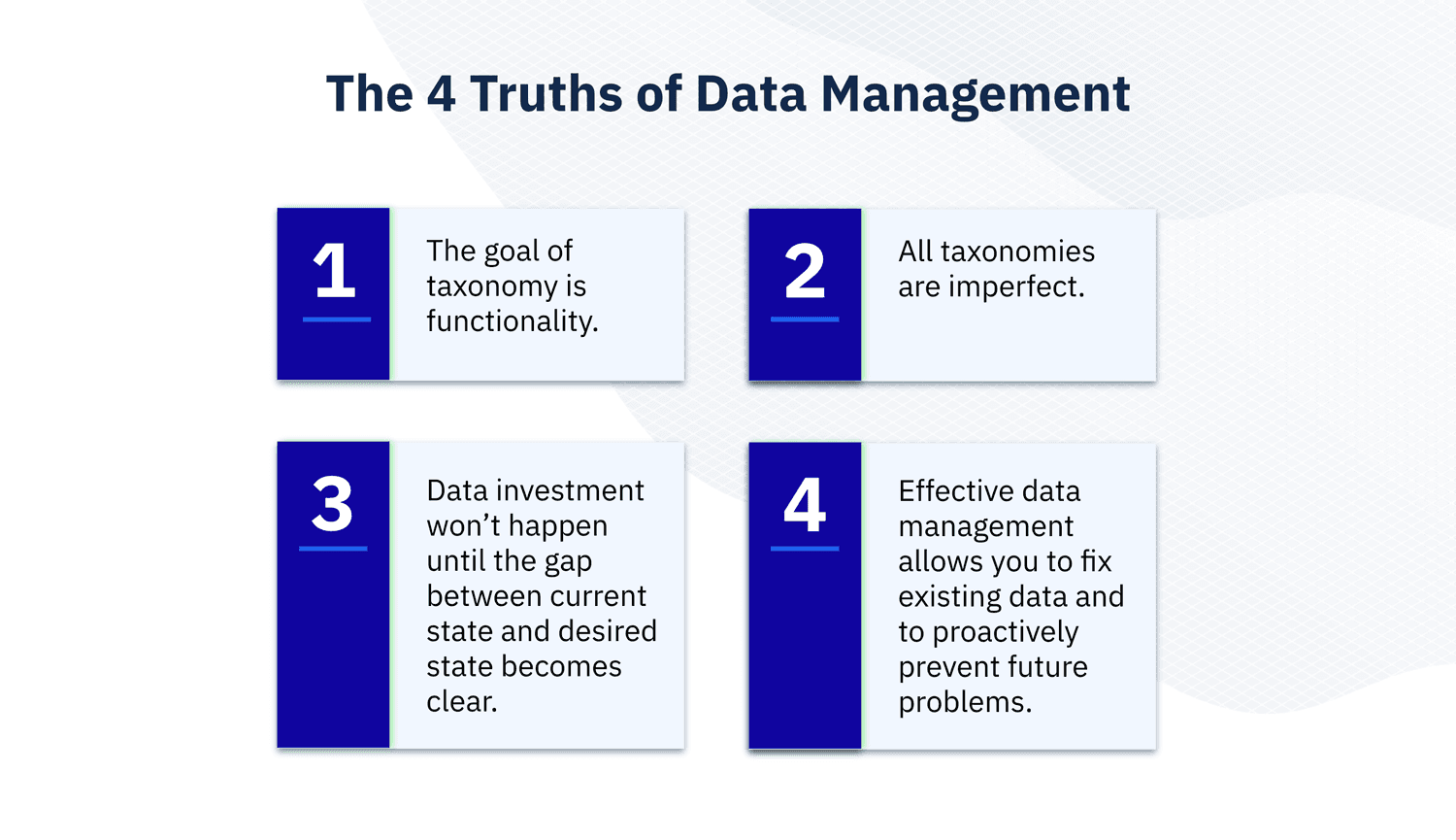

Defining what data to track can be a lot to consider, and you likely won’t figure it all out at once. Throughout this first step, it’s important to keep in mind the four truths of data management for product analytics:

We have a Data Taxonomy Playbook that walks you through all of these steps to ensure you’re collecting good, clean data from the start.

Once your plan is in place, you can start instrumenting your data lifecycle. Instrumentation is the initial process of setting up your product analytics platform, including setting your event and user properties and connecting it to where you store your data.

2. Store the Data

With your plan for collecting data in place, you need to send and store all that data in a secure place. You can store data in a variety of tools—databases, data warehouses, data lakes, data management platforms (DMPs), customer data platforms (CDPs)—each with its own specialty.

For the purposes of getting your data lifecycle off the ground, all you need is a DMP or CDP. In the past, there was a clear distinction between these two tools, with DMP generally dealing with second- and third-party data and CDPs generally dealing with first-party data. Nowadays, the two tools have (for the most part) merged into one common purpose: to collect, store, and organize a variety of customer data.

DMPs/CDPs like Segment, Tealium, and mParticle excel at organization, with features that make it easy to clean and standardize all of your customer data from one central location. From there, the data you’ve carefully planned, stored, organized, and cleaned is ready for analysis.

3. Send the Data to Your Product Analytics Platform

Now your data is ready for your product analytics platform, which makes all this data you’ve been collecting and organizing usable for people across your organization.

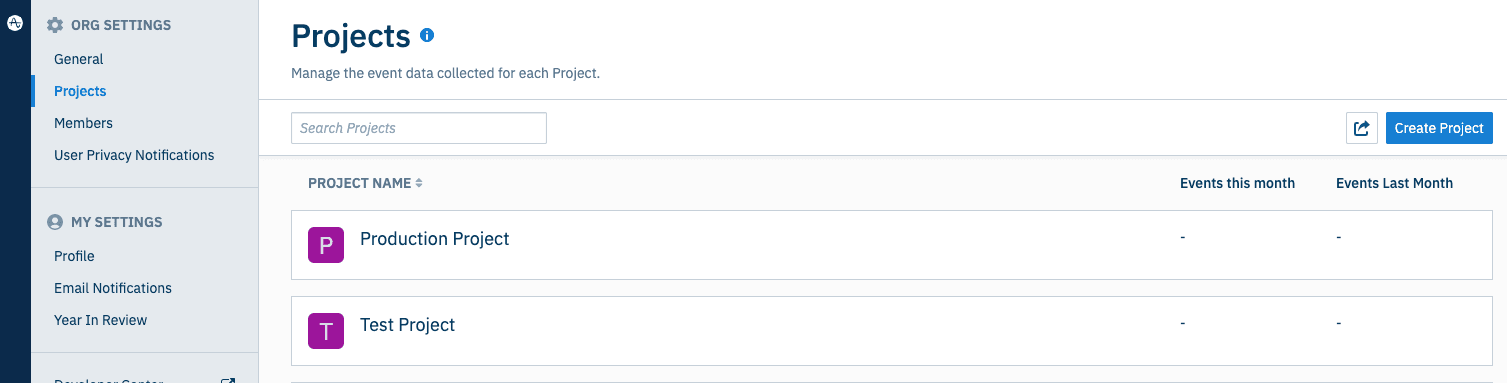

In Amplitude, when you’re ready to start analyzing your data, you need to create a project, which connects to your data source, be it Segment, mParticle, or another source via API or SDK. We highly recommend creating a test project and a production project. Here is a screenshot of those to projects side by side:

Your test project is where you test your instrumentation and ensure the data you’re using is accurate, presented how you want it to be, and usable. Your production project is where you’ll actually do the analysis. Keeping the testing process outside of the production project allows you to edit data and try things out without messing up the clean production environment that good analysis requires.

4. Act on Data Insights

Next, it’s time to put your data to use. This stage is the actual act of analysis, where you create graphs and analyses of the data, draw insights, and then act on those insights.

The more widespread the ability to act on insights is, the more democratized your data will be. All the work you’ve done up to this point in the DLM process will ensure your data is clean, relevant, and usable for everyone that needs access to it.

With access to this data, product managers can create a data-informed product strategy, marketing can tailor their campaign targeting based on feature usage, and much more. None of this is possible without the good, clean data lifecycle you’ve established with the three previous steps.

5. Practice Consistent Maintenance

There is no final step to the product analytics data management lifecycle. It will always be an ongoing endeavor, requiring consistent, intentional maintenance.

As data becomes outdated, you need to update the events and properties you track, make sure you’re storing and organizing it efficiently, and ensure the distinction between your testing and production environments is maintained.

Instead of deleting outdated data, we recommend that you stop tracking the event and properties that have run their course. This will stop the flow of outdated data at its source instead of having to track it through the lifecycle.

Overall, though, consistent maintenance of your product analytics DLM is a part of a healthy data governance framework, which includes three pillars: education, instrumentation, and maintenance.

Good, Clean Data Is Key to Actionable Insights

The purpose of DLM in product analytics is to provide the best, cleanest data possible. With this good, clean data, product analytics insights will come easily to anyone using your product analytics platform. The data will be easy to understand, communicate with, and use. Get started with Amplitude today and try it for yourself.

Belinda Chiu

Senior Technical Support Manager, Amplitude

Belinda is a Senior Technical Support Manager at Amplitude who champions the Platform team’s mission to enhance the customer experience with timely, expert advice. Working in the customer success field for many years, she finds enjoyment in ensuring that clients are utilizing a product that best fits their organization's needs and optimizing their experience to the fullest potential.

More from Belinda