Why Is Data Validation Important?

Learn how to give your teams confidence in their work with techniques to ensure your data is reliable.

Editor’s note: This article was originally published on the Iteratively blog on February 18, 2021.

Key takeaways:

- Data validation can go a long way in helping build a data-driven culture.

- Reliable data is often a business’s most valuable asset, offering insights that improve the customer experience and drive revenue.

- Bad data is not only a drain on resources–it often means that teams will spend hours trying to verify it–but it erodes confidence and stymies innovation.

- Using data validation and other techniques proactively can fight “data decay” and prevent other issues before they affect customers.

Businesses rely on high-quality data to make critical decisions for their organization. If data is not accurate and complete, end-users won’t not trust the data, which limits their use of it. Data validation is a set of processes and techniques that help data teams uphold the quality of their data.

Now, let’s dive deeper into why data validation is important for businesses and data teams.

Data validation makes it easier for companies to trust their data

When businesses don’t trust their data, they are more reluctant to use it and trust the analysts/engineers delivering the data to them. People stop trusting their data when it’s inaccurate, invalid, and no longer useful to them. The lack of trust doesn’t happen overnight for most businesses. Inadequate tooling, poorly managed processes, and human error, over time, are some of the contributing factors to why businesses are losing fate in their data.

And that’s a big loss–in more ways than one.

For one, reliable data–“good data”–is often an organization’s most valuable asset, providing insights that can help them stand out from their competitors and drive revenue.

By contrast, bad data is a drain on company resources. For instance, companies waste $180,000 annually on undeliverable mail because four percent of their mailing-list addresses are inaccurate.

Bad data also often means organizations spend more time attempting to dig it up themselves. According to data-axle.com, sales reps spend 20 percent of their time researching leads. If time is money, that’s a lot of money wasted thanks to bad data. Even worse: bad data can in turn erode employee confidence.

Fighting “data decay”

Good data is valuable and hard to come by, especially as time goes on. Why is it hard to keep up with data quality as time goes on? Over time data starts to decay. What we mean by data decay is data that was once accurate is now outdated. Could it be outdated because a user’s address changed? Or did your business begin collecting a new data field for users that is now incomplete for a majority of existing users? Data decay will happen no matter how great of a process you have in place at your organization.

However, validating your data can assist your organization in reducing the potential errors caused by data decay. While it might not be a perfect solution, it will identify where data is missing, incomplete, inconsistent, and inaccurate. Data validation at the client or processing state won’t help with decay because data changes over time and should constantly be updated in your warehouse to make sure it contains the most up-to-date information. Over time, validating your data will create a better customer experience, because you will be able to target advertisements, emails, and calls to customers based on their potential needs. Regain the trust that might be lost in your organization, and start validating your data.

Data validation builds engineer confidence

We just mentioned that data validation affects the whole organization, but how does it affect engineers in your organization? Well, for starters, data workers are less confident about the quality of data at their organization than management is, with only 31% of data workers confident about the quality of data.

Why is it important for engineers to be confident about their company’s data?

When engineers have confidence in the data, they spend less time worrying and showing stakeholders that the data is accurate. If the data has been wrong before, engineers, in most cases, are told, “Prove to me why this is right.” After a while, this gets old, and engineers’ time can be spent completing other engineering tasks that provide value to a product or feature.

What can engineers do to gain confidence in the quality of data again?

Engineers can put together a data validation process to ensure that their data is accurate and complete. Once an afterthought or completely ignored in being tested, data is now tested and part of the software development life cycle. Data can be considered a first-class citizen in the development process and can be tested and validated alongside the codebase.

Why is data validation important for engineers?

As companies have adopted a data-driven approach, data accuracy and completeness are far more important to organizations than 10 years ago. Back then, sampled data and simple dashboards were normal, and most organizations did not have a data team.

Where did data engineers learn the concept of data testing?

Well, the concept of testing has been around in the software engineering field for a while. Developers have reaped the benefits of testing and fully understand how valuable it is for them in the software development life cycle.

With an effective data validation process, your team can ensure that data is up to date. Your team can begin to work faster than ever before and limit the number of headaches inaccurate data costs engineers. When you test your data and trust that it’s accurate, you are more confident in your ability to make changes to your code without being concerned about it affecting your data.

Data validation should be proactive, not reactive

Data validation is difficult to implement because most data teams and engineers rely on reactive data validation techniques causing validation to become an afterthought. Thus, engineers and analysts react to issues caused by the data rather than taking a proactive approach to catching issues before they reach end-users. While this is better than nothing, it still doesn’t allow data teams to take advantage of the benefits data validation brings to an organization.

Taking a proactive approach to data validation aids organizations in delivering useful data that can be understood throughout the organization. When applied properly, proactive data validation techniques, such as type safety, schematization, and unit testing, ensure that data is accurate and complete. These techniques enable engineers to crack down on the problems that caused the bad data in the first place. Inaccurate and incomplete data that once took days or even weeks to discover can now be avoided when taking a proactive data validation approach.

The importance of data validation

Data validation can reduce your time cleaning bad data later on. Analysts and engineers can waste hours of their day cleaning bad data, and, in return, businesses can lose revenue because that time could have been spent improving products if the data had been better. Digging through data to find inconsistencies and errors is annoying and wastes time for everyone involved.

Data validation helps engineers test their data to reduce the amount of bad data in their warehouse. To get the most out of data validation, organizations should take a collaborative approach to validate data. To ensure that the highest quality data is being produced, everyone needs to work together because data is a team sport. Why is it a team sport? Well, data validation doesn’t happen at one specific point. It can be done at multiple points in the data life cycle and requires everyone on the data team to work together to confirm that the data is correct.

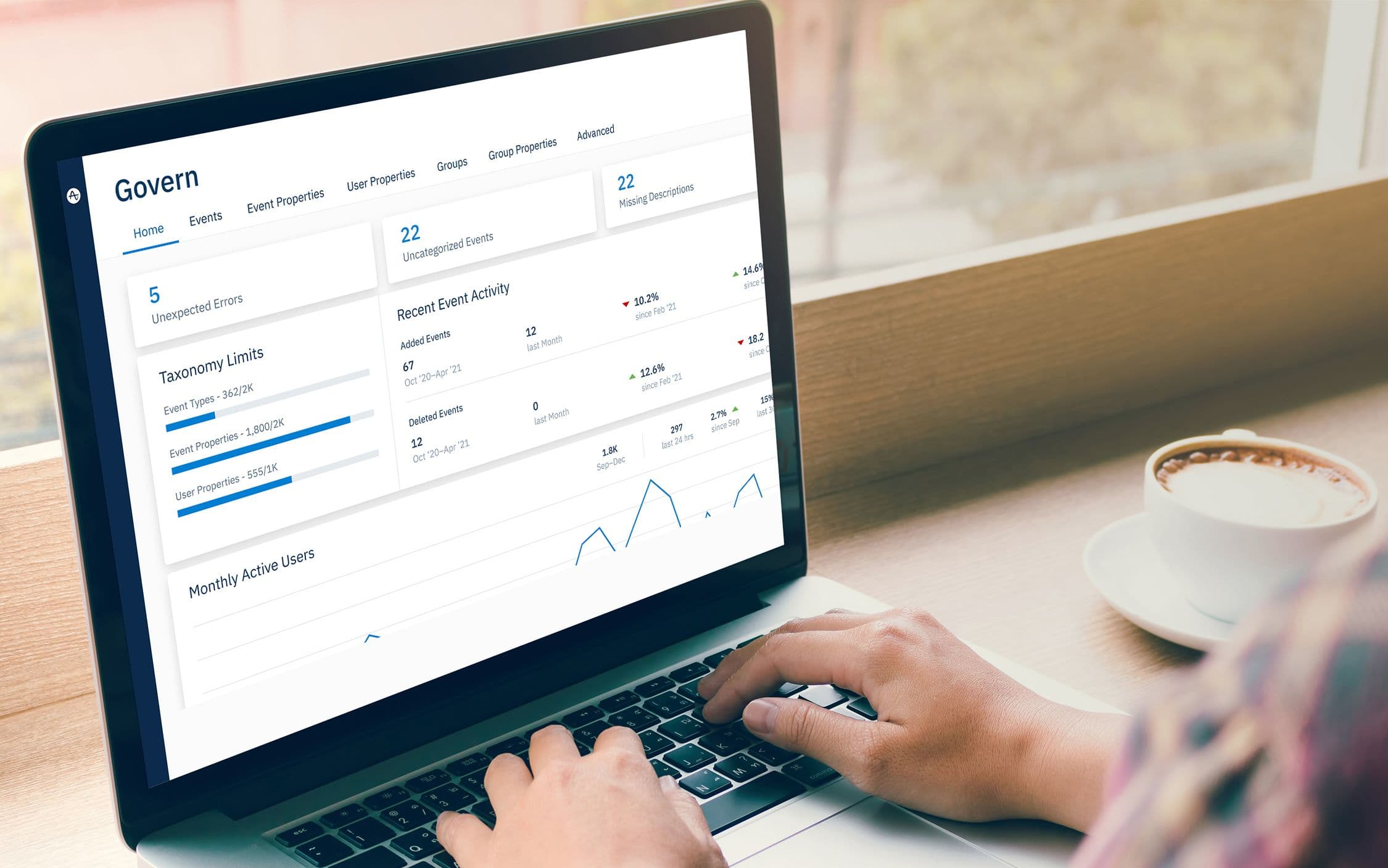

To learn more about how Amplitude can help you implement data validation, sign up for a free account here or book a demo.

Franciska Dethlefsen

Former Head of Growth Marketing, Amplitude

Franciska is the former Head of Growth Marketing at Amplitude, where she led the charge on user acquisition and PLG strategy and execution. Prior to that, she was Head of Growth at Iteratively (acquired by Amplitude) and before that Franciska built out the marketing function at Snowplow Analytics.

More from Franciska