Budgeting App Improves User Onboarding Conversions 251% with Amplitude

Swish has iterated on one of their onboarding flows over 65 times — here's their story.

Over the past 6 months, my team at Swish increased conversions 251% by using Amplitude to measure and implement product changes. This article explains our story and details step-by-step how were were able to achieve this improvement.

ref Want to improve your user onboarding conversion? Try a demo of Amplitude.

Making Product Decisions by Gut

When we first started working on a new spending tracker app called Swish a year ago, many of our product meetings were disorganized and full of debate. We based many of our product decisions on gut instinct, without much quantitative data or qualitative feedback from our users.

Not surprisingly, the first version of the product had some issues.

While our inbound channels brought in more than enough new users, many of them dropped off before linking their bank accounts to the app. Linking accounts is a critical step in our user onboarding because it allows Swish to analyze a user’s transactions and automatically create a simple monthly budget for them. Without linking accounts, the app just doesn’t work.

Everyone on our team had a different opinion on what was wrong and what we needed to do in order to fix it, and many of those potential solutions were mutually exclusive.

Making Product Decisions with Data

It was right around this time that we hired a new product designer, Tim Noetzel, who brought in a fresh (and welcome) perspective on how to use data to help make product decisions. He got us hooked on Lean Analytics and Brian Balfour’s newsletter for growth hackers. It didn’t take long before we were drinking the kool aid.

The key turning point for our team was when—instead of saying “I think I know how to fix this problem”—we started saying “I have a hypothesis.”

Every potential product change is a based on a set of assumptions that haven’t yet been validated.

For the first time we were acknowledging that every proposed product change is based on a set of assumptions that haven’t yet been validated. The key question was: how could we quickly validate that our ideas for improving the product would actually work?

The answer, in part, was to start A/B testing almost every significant UX change. We started collecting product usage data with Amplitude and compared side-by-side the current user interface with the proposed change until one or the other achieved statistical significance.

With the help of Amplitude, we have been able to improve the number of users who link accounts by 251%.

After just 4 weeks of A/B testing changes to the Swish onboarding process and viewing the results with Amplitude, we were able to increase conversions by over 40%. After 6 months, we had increased conversions by 251%.

A/B Testing Onboarding Flows with Amplitude

Here are some tips and tricks we learned after 6 months of improving onboarding flows with Amplitude:

1. Instrument Generic Events First

If you are releasing your first onboarding flow ever, you obviously don’t have much to rely on besides gut. One low-touch strategy for getting feedback without implementing anything is creating mockups and sharing them with friends and family. Ask unbiased questions, and see how they would progress through this flow.

Check out Amplitude’s quick start guide for an overview of getting started.

But beyond user testing, the most important thing you can do is instrument that first onboarding flow. Using Amplitude, you can instrument measurements for your onboarding flow in minutes.

In order to keep your events organized and prevent your event schema from getting unwieldy, we recommend starting with two generic events:

- Viewed Page – Include the state name or page path of each page as an event property.

- Tapped Page Element – Include the button or link label and page path as event properties.

You’ll likely add a few additional events for key points in your funnel (e.g. “User Signed Up” or “User Requested Push Notifications”), but these two events should cover 95% of user interactions. By keeping these event names generic, it’s easy to compare different versions of your funnels as you make changes.

If you do decide to add additional event types, look to create events that capture an action that is translatable across any kind of onboarding flow. Some examples include “User Signed Up” or “User Requested Push Notifications.”

In some cases, a “User Signed Up” event is better than using a “Clicked Button” with button label “Sign Up” because in future flows, you may not use buttons that have the label “Sign Up.” Having this higher level event name will allow you to directly compare funnels with less mistakes.

2. Set Up Funnels & Filter Yourself Out

Using Amplitude’s Funnel Analysis tool, you can quickly create funnels based on these new events.

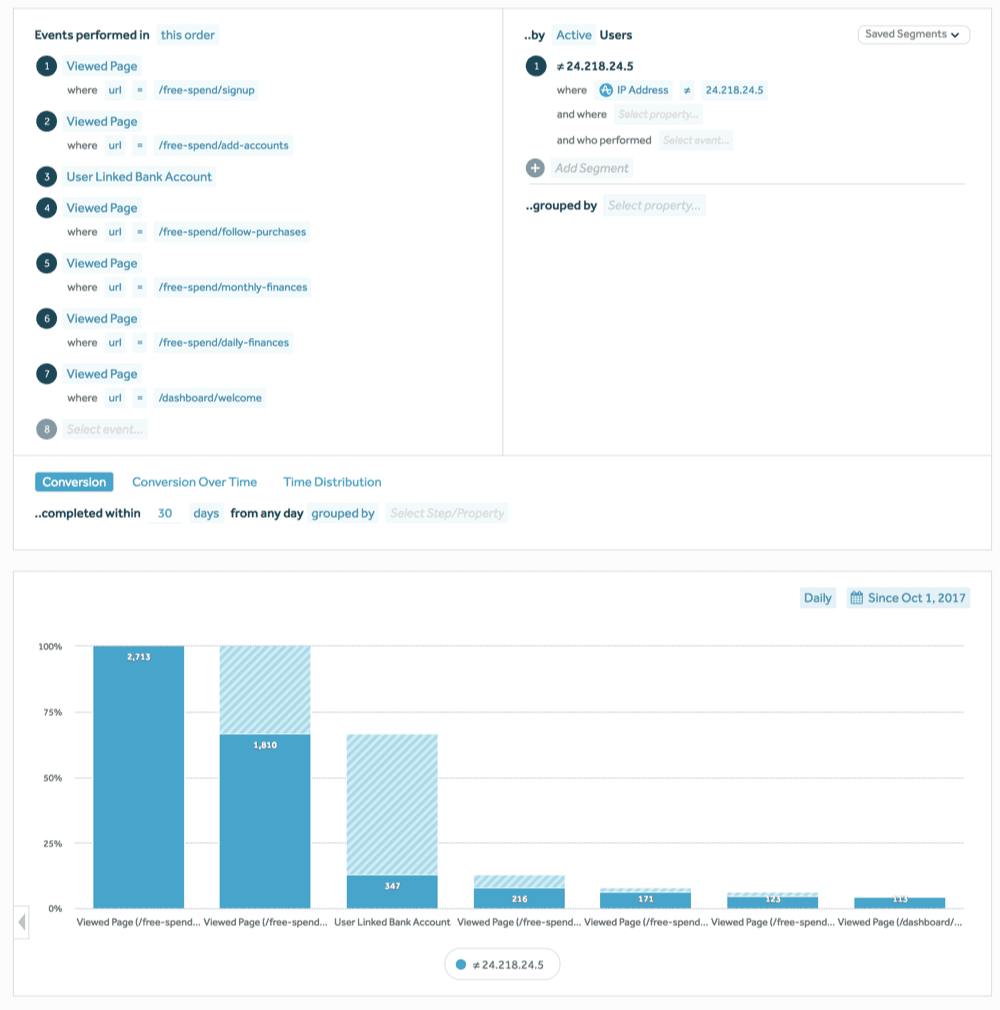

Here is an early release of one of our onboarding funnels:

Funnel chart in Amplitude.

As you can see from the screenshot, we utilized generic event names like “Viewed Page” as well as one non-generic “User Linked Bank Account” event. We also used the filters feature to remove Swish team members from the funnel so we wouldn’t skew our results.

3. Analyze Your Performance

After instrumenting Amplitude, your funnel will provide key insights into how your users behave. Do users make progress easily, or is there a clear step in your flow where they drop off?

You’ll likely find a handful of issues in any given flow, but you should focus on the biggest opportunities for improvement. Look for potential changes that could result in double-digit improvements. Improvements earlier in the funnel are often significantly more valuable, since they result in more users trickling down to subsequent steps.

It’s also valuable to segment your funnels based on referral source, user persona, browser and device, and other factors that might influence performance. You can often uncover key insights by comparing the performance of different groups of users this way.

4. Run A/B Tests

As your acquisition channels grow and you have more prospective users, you may want to A/B test your onboarding flows so you can get answers to your growth questions faster.

For younger products, this probably means testing two entirely different flows or pages with dramatically different approaches. You might show half of your users one flow and the other half another. For mature products, this may mean testing individual components within a single page, like blocks of text, buttons, or colors. When in doubt, prioritize testing dramatic changes over simple ones, since larger changes have a higher potential to produce meaningful results.

“We had to make some trade offs.”

When Swish started considering how we would A/B test our flows, we had to make some trade offs: we weren’t willing to pay money for a service like Optimizely, we didn’t want to run and maintain a Sixpack setup, and other solutions would have been difficult to implement in our existing stack.

We opted to build an extremely lightweight A/B testing mechanism. We knew that we’d be testing dramatic changes over multiple groups of pages, so we focused on building a mechanism that would make the decision to enroll a user in an entire flow.

Here is a quick run down of the logic we used:

- A user lands on the first page of our onboarding flow. Our experiment enroller asks: has this user ever seen a variation of this onboarding flow? If yes, show them that same flow. If no, randomly choose one of the flows and enroll this user into that flow.

- If we are enrolling a user, we persist this experiment information. This is helpful for two reasons: we want to always show the user the same flow, and we need to reference an experimentID property that will be sent with subsequent events to Amplitude for tracking purposes.

- Once the user sees the new flow, we fire a Viewed Page event with a property experimentID containing the ID of the chosen flow. This is critical for tracking purposes. We need to be able to distinguish which experiment users have been enrolled in so we can create funnels with this property in mind.

- If you want to, you can attach this experimentID to every event. But, as previously mentioned, we kept this process very lightweight and used the initial Viewed Page event with experimentID as an indicator of what experiment a user saw.

Implementing this simple version of A/B tests helped our team move significantly faster. Rather than worry about complex tooling, we let Amplitude do the heavy lifting. Our experiment enroller segmented users into test variations, and when we’d collected enough data we used one of the many free A/B/n test calculators to verify significance.

“Rather than worry about complex tooling, we let Amplitude do the heavy lifting.”

Ultimately, we were able to build and launch experiments in a few days, rather than the weeks it had previously taken us.

5. Use Results From A/B Tests To Drive Your Growth Meeting Conversations

The best part about this process was that we saw results quickly. It only took us a few days to implement this logic, and we could then rely entirely on Amplitude to do the heavy lifting.

We didn’t need to pay for anything extra or implement another tool. We simply leveraged Amplitude’s event tracking, funnel analysis, and properties to create an analytical understanding of how our flows were performing.

With data in our hands, our growth meeting conversations switched from “I think this will perform well because it seems like a great idea” to “I think this funnel can perform better if we fix the drop off at step five.”

This not only improved our quantitative analysis of flows, but also inspired us to gather more qualitative feedback from users in the form of user interviews and usability tests. This qualitative feedback helped us understand why our funnels were underperforming, leading us to implement, measure, and iterate on even more improvements.

Swish’s Data-Driven Future

Amplitude has not only encouraged our team to implement better processes to become data-driven and aligned with our users; it has actually driven results.

We have iterated on one of our onboarding flows over 65 times. With each iteration, we’ve changed designs, functionality, and language. One of the most important steps for our onboarding flow is the process of linking accounts; if a user doesn’t do this, our product won’t work. With the help of Amplitude, we have been able to improve the number of users who link accounts by 251%.

We have iterated on one of our onboarding flows over 65 times.

As a result of all of this, those product meetings that were previously disorganized and frustrating are now ultra-productive and exciting. Thanks to Amplitude, we learn more about our users every day and Swish continues to get better and better.

Jeff Whelpley

CTO of Swish

CTO of Swish, Google Developer Expert (GDE), Boston AI Meetup Organizer, Angular Boston Meetup Organizer, conference speaker

More from Jeff