Nova 2.0: Re-architecting the Analytics Engine behind Amplitude

Introducing Nova 2.0, our new architecture built to improve performance, reduce query latency, and cut server costs.

In the last couple years, Amplitude has seen exponential growth, establishing us as industry leaders in the space of Product Analytics.

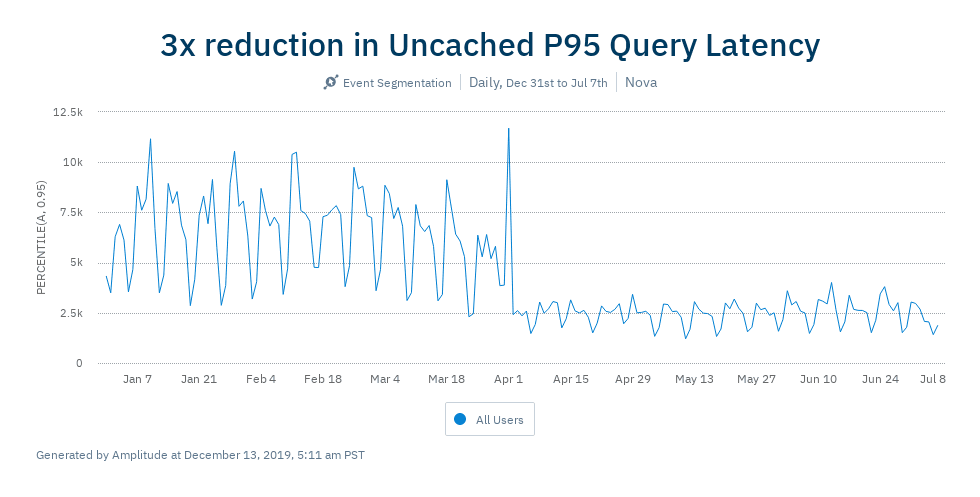

As a result of our increasing customer base and the growth of our existing customers, our data volume has also increased significantly to ~400 billion events/month and some of our queries regularly scan more than 300 billion events. We believe that everyone who cares about data should have access to real-time, interactive analytics, so low query latency is one of our top priorities. We have kept query latency in check despite the massive uptick in data volume because of our early investment in Nova, our query architecture. With this re-architecture, we managed to improve P95 latency by 3x across the board, reduced our server costs by 10% and also paved the way for a dynamic, auto-scaling querying infrastructure.

[Editor’s note: Amplitude now tracks more than 1 trillion events per month. See up-to-date numbers here.]

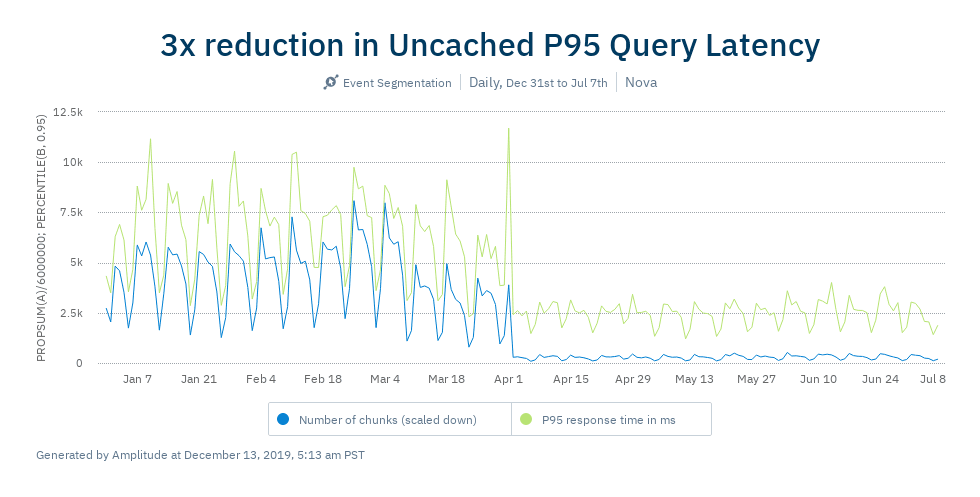

The Amplitude chart (🐶🍗) below depicts one of the amazing things we achieved with this re-architecture.

What is Nova?

Nova is Amplitude’s in-house columnar-based storage & analytics engine. We built Nova from the ground up because of our unique flexibility and performance requirements. Nova’s original design is inspired by a few exciting systems like PowerDrill, SCUBA, Trill, and Druid. We also drew inspiration from data-warehouses like AWS Redshift, Google BigQuery, and Snowflake. I recommend checking out our Nova blog post to understand it’s architecture which will be important to understand the redesign and its benefits.

Why re-architect?

- While median query times for cached queries stayed below 0.5s, P95 latency for uncached queries had gradually increased from 2.5s to 8s over the last year due to high volume queries. Our overall P95 end-user latency for all queries continues to be below 1 second because of multiple layers of caching implemented in our infrastructure.

- We are constantly building new and more powerful features to allow customers to get more insights out of their data. In just 2019, we have shipped over 68 new features and improvements to the Amplitude platform. Check out our monthly release notes on our blog. A lot of these features have caused additional load on Nova because of their complex business logic and data access patterns.

- We have also invested in features that improve data trust with Amplitude. One of our widely used features is query-time transformations, which gives customers the ability to mutate data retroactively at query time. As all our storage files are immutable, this has introduced an additional layer of complexity.

- We had been running Nova for 2.5 years in production and learned a lot about our customer’s querying patterns. Dogfooding Amplitude and using tools like Datadog helped us identify the slowest pieces of our architecture.

- We wanted to be proactive and plan for the next phase of our growth with large-volume customers. This includes enabling functionality like auto-scaling on our querying infrastructure.

Research and Investigation

As we were analyzing the performance of our systems, we had a few discoveries.

Fragmentation of Data into lots of small files:

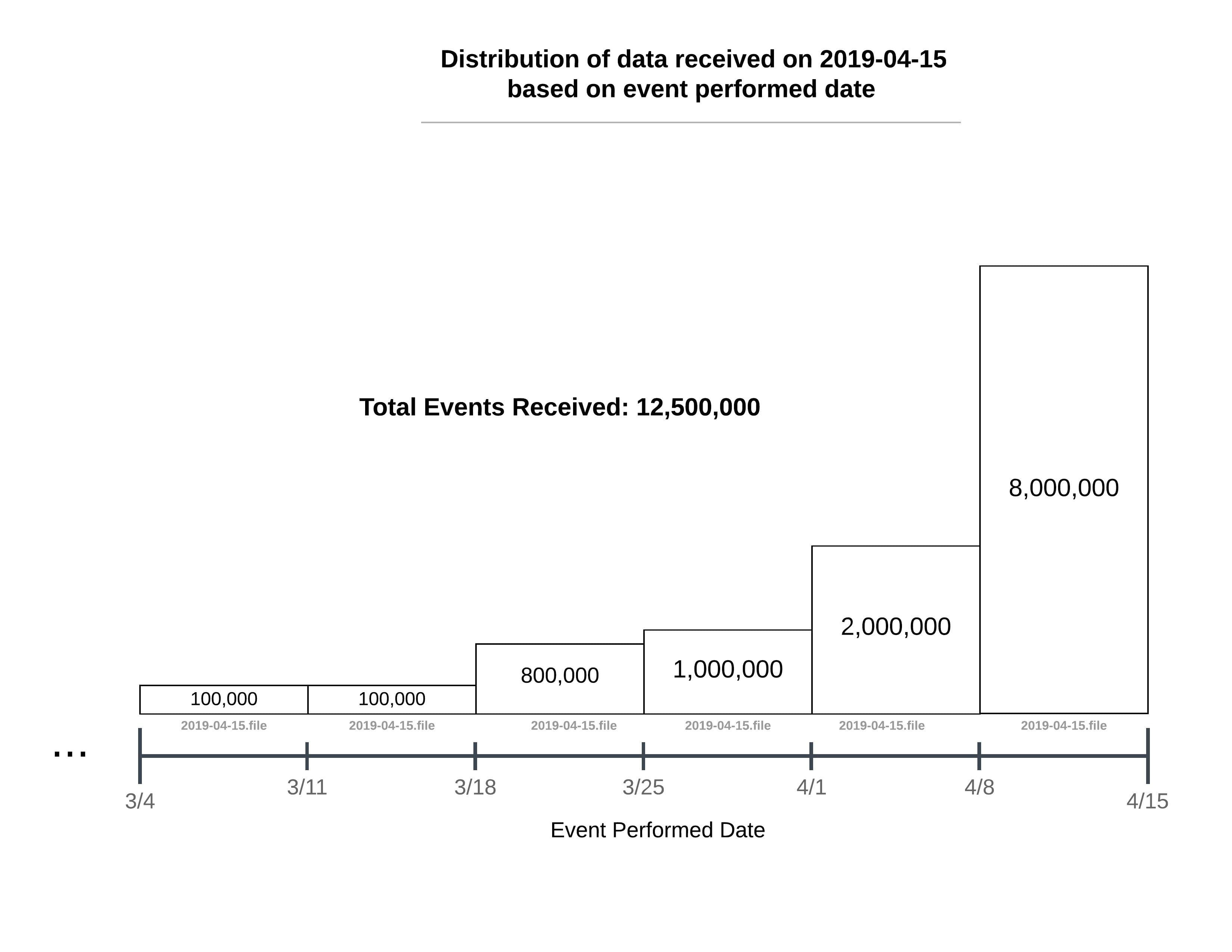

For our slowest queries, close to 65% of the total query time was being spent on reading raw data from the disk. Further investigation showed most of this time was spent opening and closing lots of small files. This problem is a little unique to us because of the way our data is sent to Amplitude. There are two key timestamps in Amplitude, event time (time at which the event occurred) and server upload time (time at which the event was uploaded into Amplitude systems). We often have significant differences in these two timestamps because of the following two reasons:

- Offline Activity: devices are off the grid while performing events, so events get buffered on the device. e.g. airplane mode. All events are sent to Amplitude once the device comes back online.

- Backfill of historical data: Often our customers have internal data lakes and want to backfill their historical data to Amplitude.

As all queries in Amplitude have a filter for the event-time, we want our raw data to be indexed by event time, so we can filter out data outside the query-range. We were using weekly event time buckets for our customers.

Compaction within a specific event-time-bucket can get us part of the way, but we were looking for a bigger improvement.

Customers query only a few select columns:

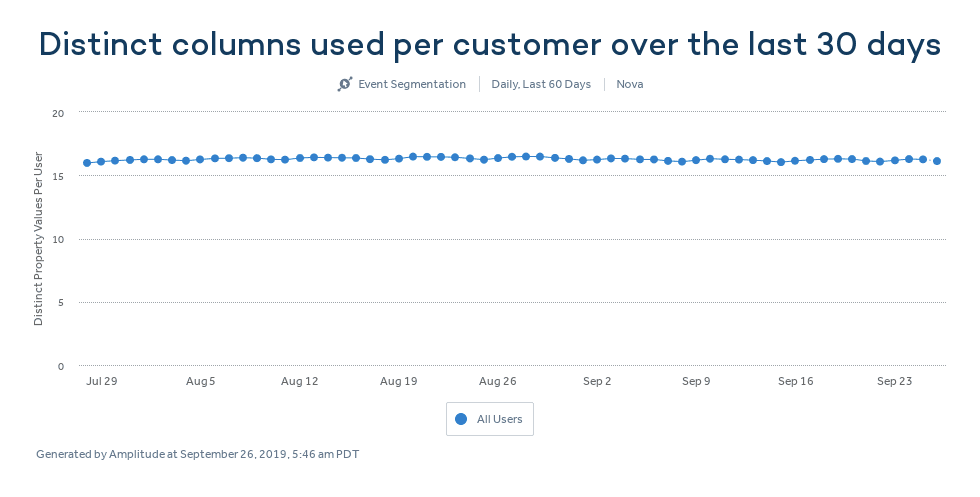

An average project sends approximately 189 columns to Amplitude. As is the case with most OLAP (Online Analytical Processing) workloads, we’ve always known that only 3–4 columns are used per query on average, that’s why we built Nova as a column store.

We also discovered that, in practice, customers only query a few select columns over and over. The average number of columns queried per customer over the last 30 days is just 16.

All columns for a specific batch of rows were stored in a single file, and our legacy storage system was downloading the entire file from our canonical store (AWS S3) to the local disk of our machines. 90% of the data on the disks was not being queried.

Architectural Changes:

Introducing Dynamic time-range files to reduce P95 latency by 3x:

We decided file immutability was important and created files per server upload bucket, instead of generating files purely on event time and updating them as new data came in. To solve the small file problem, we created bigger files per server upload day. This was one of the most important pieces of the redesign. The old event time bucketing provided a built-in index on the time dimension. Given a time-range filter, it was straightforward for our queries to find the relevant files.

Legacy storage format: {weekly-event-time-bucket}/{server-upload-date}.

Example: if we get a query for Jan 2019, we only have to look into the sub-directories of 5 event time weeks that overlap the month of January 2019.

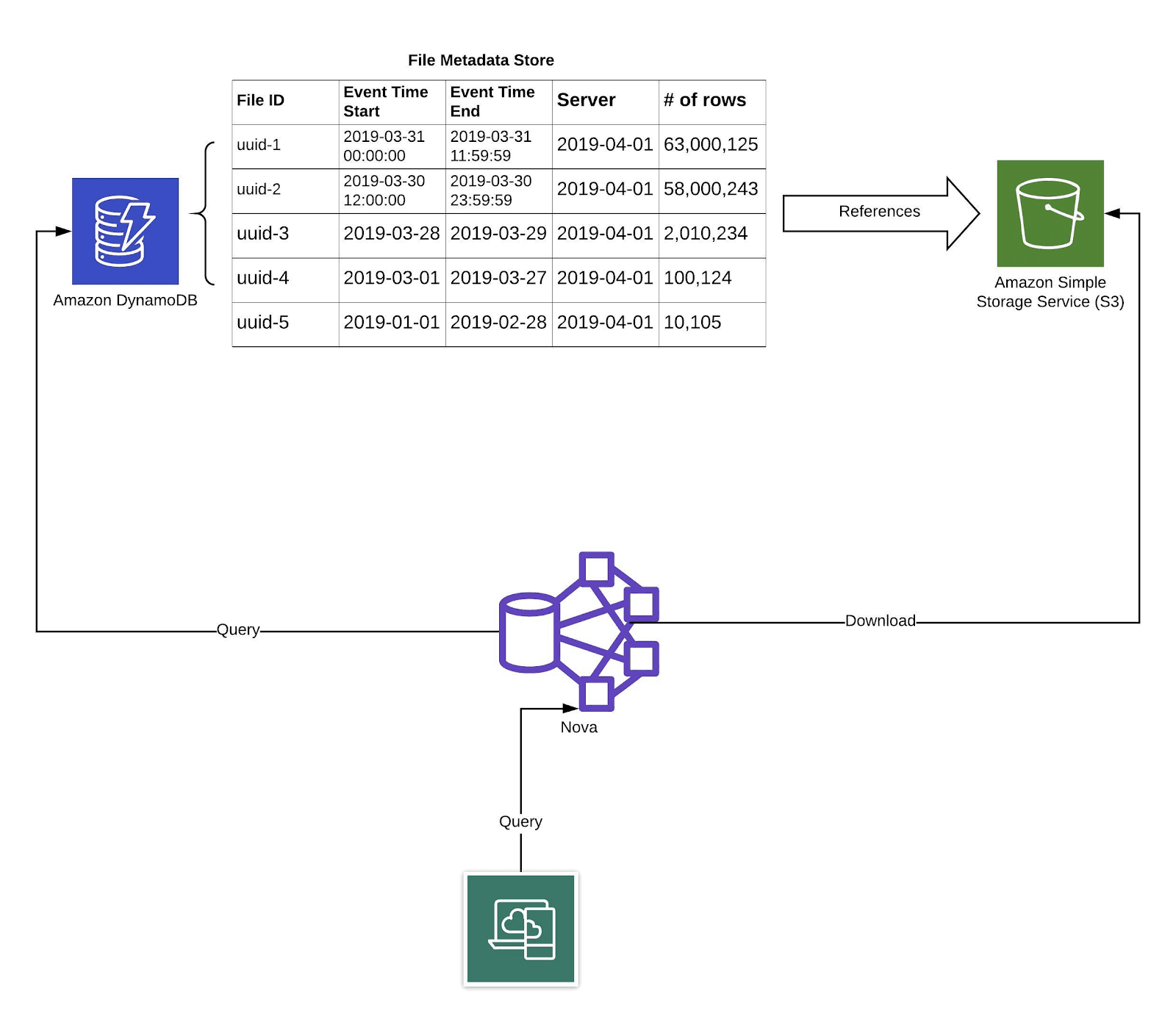

If we give up on static weekly event time bucketing to accommodate more events, these files will have a dynamic time range. In that case, we would need a data store that stores the start and end time of these dynamic files in an indexed and efficiently queryable way. We can then ask the data store for the files we should scan for a specific query. This led to the introduction of the File Metadata Store.

The File Metadata Store

We are using AWS DynamoDB for our File Metadata Store. As the information in the metadata store changes only once per day, we cache the metadata store results heavily on disk and in memory on our query nodes. We have also built a more optimized metadata store lookup, more on it in a future blog post.

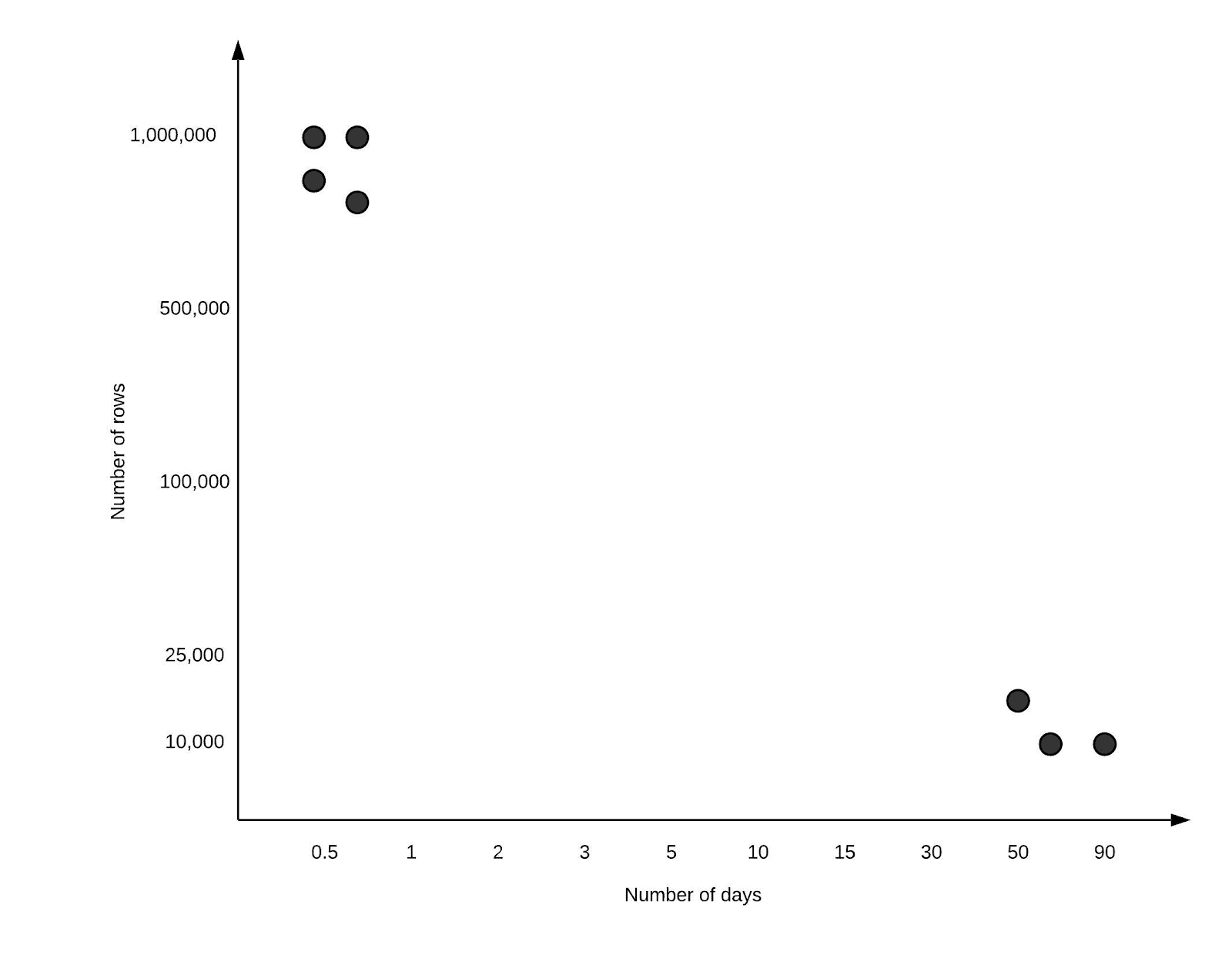

After moving to dynamic time-range files, the next question was deciding how to pick the time-ranges. While too many files are bad because of the extra overhead of opening & closing files, too few files are also bad because it makes our event time index less effective. For example: in a single file for all events, most of the data scanned might sit outside the query time range.

We wanted two kinds of files in our systems:

- Files spanning a short time range and has a lot of rows. We would only scan this file if the short time range overlapped with the query’s time range

- Files spanning a long time-range and has a small set of rows (still much more than 1 row in some files like we had in our old system). We would scan this file very often because it will have a lot of overlap with the query’s time-range, but because the file is small, scanning it is low cost.

Our CTO, Curtis loves taking Distributed Systems problems into the realm of Mathematics and did a lot of interesting analysis to determine optimal rows per file for query purposes. More on that in a separate blog-post.

Reducing the number of files has had a massive impact on our performance numbers. As shown in an Amplitude chart below, our P95 response time which had slowly climbed up to 8 seconds went down below 3 seconds after introducing the new File Metadata Store to all our customers.

This was the biggest contributor to the performance gains from our re-architecture and reduced our P95 Query Latency by 3x.

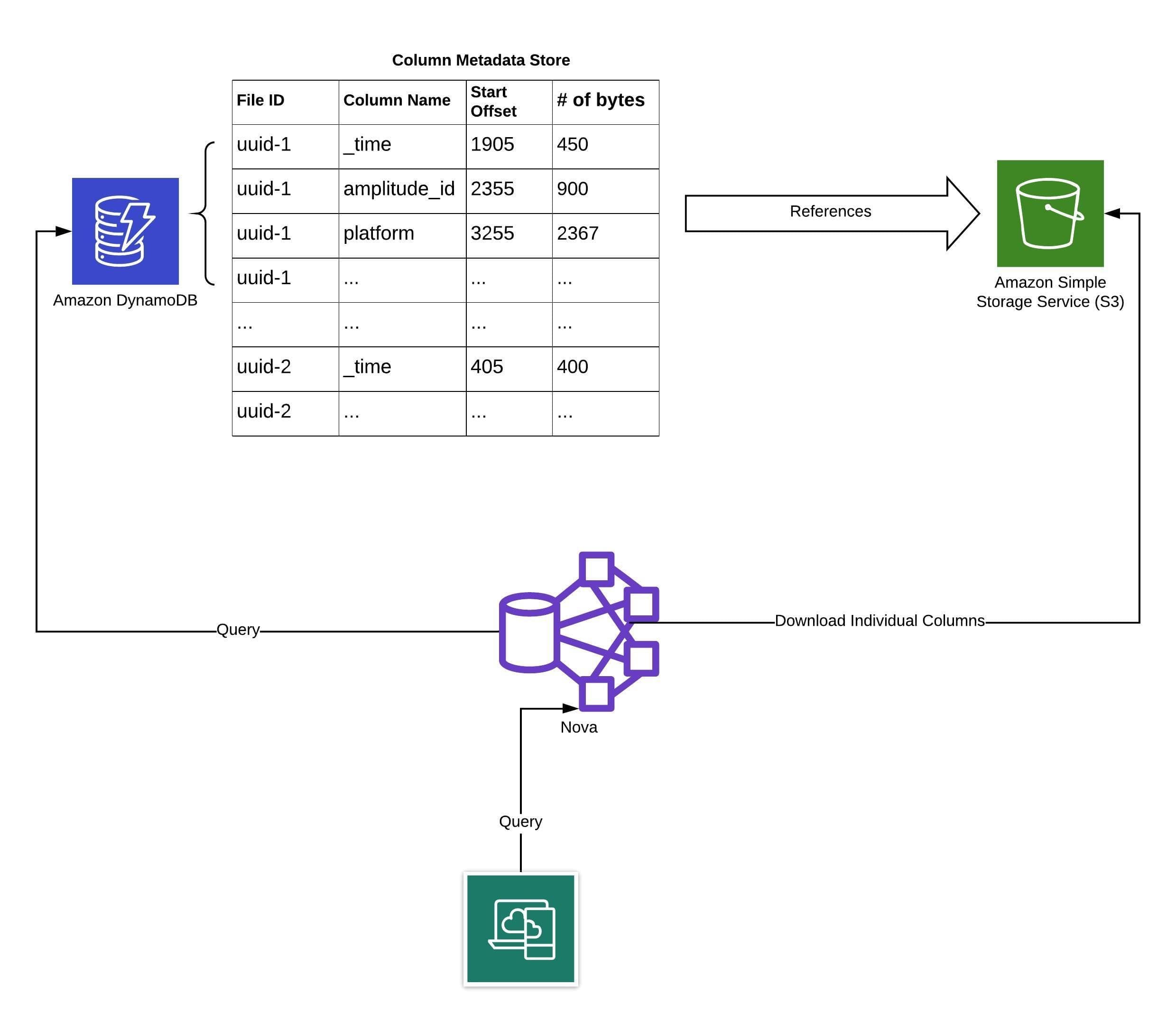

Introducing a Column Metadata Store and reducing server costs by 20%:

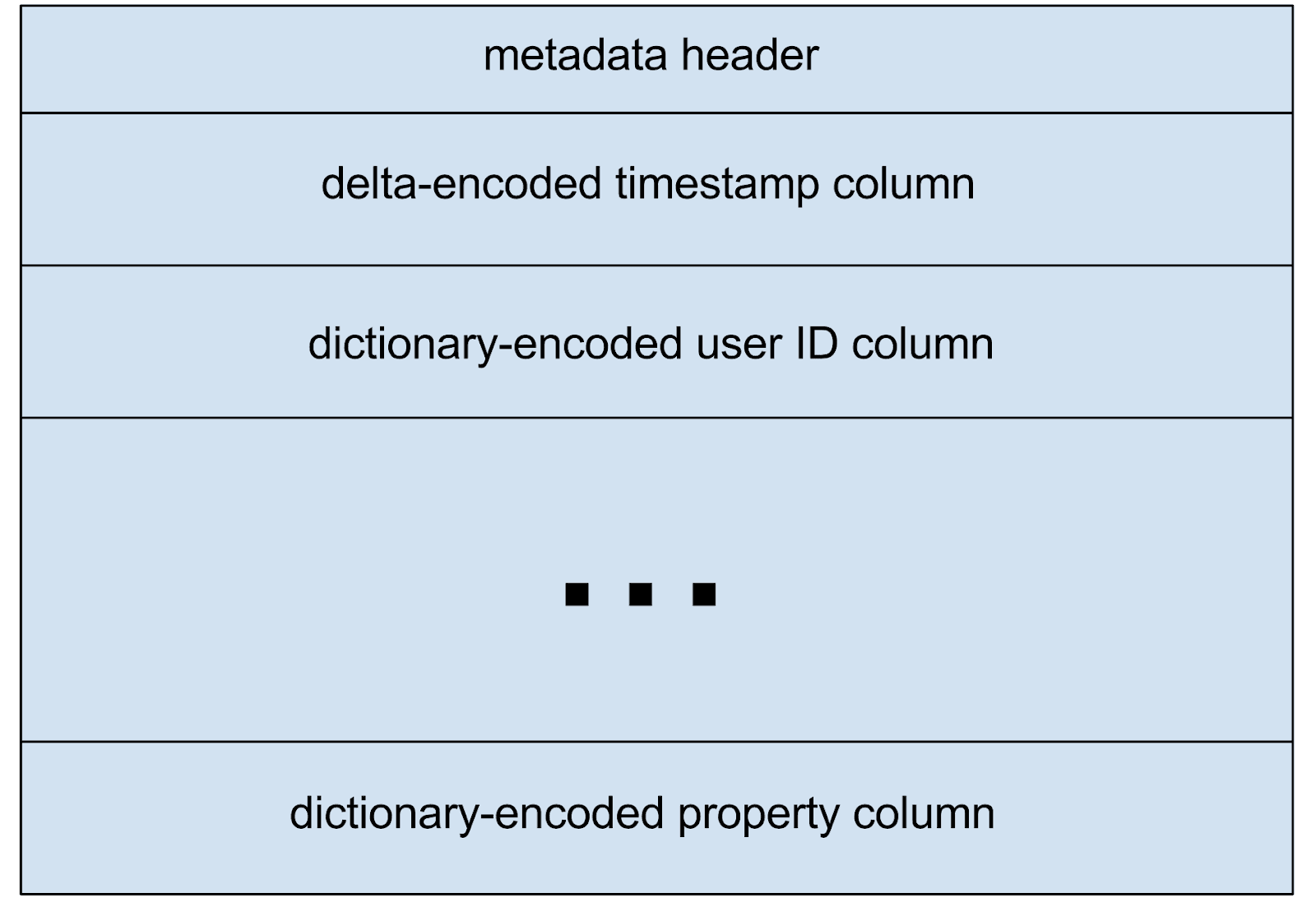

Once the metadata store gives the list of files to be scanned for a specific query, the specific query downloads the relevant files from S3. This is what a file looks like in Nova.

The metadata header in the file stores the start-offset and the end-offset of every column in this file. This allows us to only read the columns relevant for the query. A lot of the columns in these files on disk are never used based on our discovery that only 10% of columns are actually queried by our customers. Instead of downloading the entire file, we were interested in just downloading the relevant columns for the file. If we would create a separate S3 object for every column in every file, it would significantly increase our S3 costs, as S3 charges per PUT request. We could, however, leverage S3’s Partial Object Read capabilities to download only the relevant columns from S3 if we knew the start and the end offsets of the columns in the file. To satisfy the use case, we introduced another distributed data store that would store the start and the end offset of all columns for every file in Nova and we called it to the Column Metadata Store.

The Column Metadata Store

The Column Metadata Store is also built on top of AWS DynamoDB. As we were now only storing columns that were being frequently queried on disk, our disk storage requirements dropped by around 90%. We cache the S3 files on our EC2 server’s Instance Stores. Our legacy system had close to 1PB of Instance Storage across all it’s machines and our new system only needs ~140TB of instance storage. We reduced our EC2 server costs by 20%. We got this benefit by moving to servers with similar CPU & Memory instances, but much lower disk Disk resources. Reducing our Instance Store requirements by ~90% gave us more flexibility in choosing the right instance type from the wide range of options in the AWS EC2 ecosystem. We are also leveraging the new 5th generation instance types as we provisioned a fresh set of nodes for the new system.

Future Work:

Our Analytics Engine is one of the key differentiators that has helped us scale to meet the needs of our customers and help them in building better products. We continue to invest resources to support large volume customers, improve query latency, add new features, and reduce costs.

This re-architecture has enabled us to do the following in the future:

Dynamic Query Routing:

We run a few multi-tenant Nova query clusters and each customer belongs to one cluster exclusively. If we would route a customer’s query to a new cluster in the legacy system, the cluster would have to download all data (all columns) for the customer in the time-range to answer the query. In Nova 2.0, we only need to download the relevant columns, which is 90% less work for the new cluster to which the query is routed. The new system has reduced our dependence on the local machine’s disks for high performance.

In Nova 2.0, we only need to download the relevant columns, which is 90% less work.

Auto-scaling Query Clusters:

Because of similar reasons as above, we only have to download 10% of the data as compared to the legacy system. Our bootstrap time for a new node has reduced significantly because of the re-architecture.

Materializing transformed columns:

Our new transformations feature allows customers to mutate and rename certain columns on query-time. As we have moved to cache only specific columns on disk instead of complete files with all disks, we can start materializing and caching these transformed columns on local-disk and in S3 to avoid applying resource intensive transformations at query-time.

Timeline:

We started brainstorming ideas and doing research in the second half of 2018. The development phase started in late Q3 2018 and took us approximately 6 months. The new system was going to replace an existing system being used by all of our customers across the world to run thousands of queries throughout the day, so we had to tread cautiously and avoid regressions. Once the backfill finished, we provisioned the new query clusters and ran both systems in parallel for approximately 6 weeks. We developed a client-side router to forward customer traffic asynchronously to the new cluster and compare responses for correctness between the old and the new systems. The entire roll-out phase took 8 weeks and we rolled out Nova 2.0 to all Amplitude customers on the 1st of April, 2019.

“This is the smoothest launch I have seen in my last 4 years at Amplitude.”—Amplitude Customer Success Manager

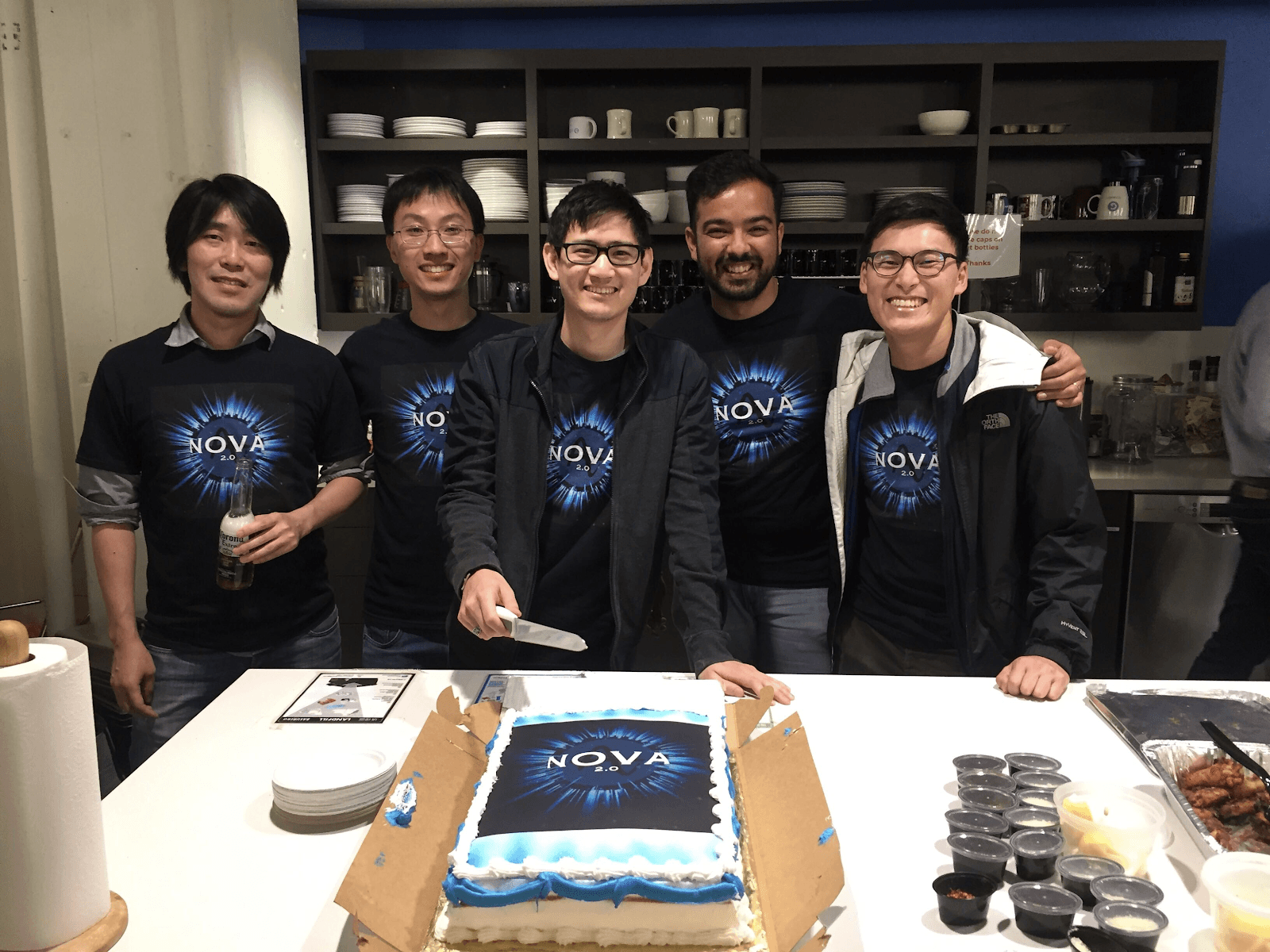

The Team (L to R): William Lau, Jeffrey Wang, Curtis Liu, Nirmal Utwani, and Sebastian Song

If any of this is interesting to you, please reach out to us by commenting on this blog or through LinkedIn. We are always looking for feedback and ideas to improve our infrastructure.

If you would like to join our team to solve problems and own part of our infrastructure, please reach out to us at careers@amplitude.com.

Nirmal Utwani

Amplitude Director of Engineering, AI Analytics

Nirmal is a founding engineer at Amplitude, part of the team from Day 1, building the company's analytics platform from the ground up. As Director of Engineering, he now leads teams responsible for Amplitude's AI products and core analytics capabilities, serving millions of users across thousands of enterprise customers. His expertise spans distributed systems, query engines, and applying LLMs to complex analytical workflows.

More from Nirmal