8 Data Science Skills That Every Employee Needs

When data scientists look to others for improving their skills, they can get a better understanding of the company that exists around the data.

Analytics is about getting your team the data insights it needs to build better products and make the right decisions for your company.

But if your team can’t understand that data, then this is all for naught. Software like Amplitude can make your data easy to understand, but each member of your team still needs basic data skills to get the most value out of what they’re looking at.

These skills can help your team, regardless of whether that’s in product or marketing or sales, interpret the data as it comes in. It also gives them the skills to work with your data scientists to propose new ideas for your product, as well as the confidence to work alongside them to improve the business.

To do all of this, every employee should be able to…

1. Understand what correlation means

Correlations get a rough ride, but they are the backbone of data science. We always want to know how different variables change in relation to each other.

For instance, your two variables might be the number of people completing your onboarding flow and the number of people retained after a month. If your onboarding flow is valuable and helps new users get to their ‘a-ha’ moment quickly, then you may guess that two numbers are positively correlated–when the first variable increases (people completing the onboarding flow), the second variable increases as well (retention after one month).

Correlation ranges between -1 and +1. A negative coefficient means two variables are affected in opposite directions (as one variable goes up, the other goes down). A positive coefficient means both variables change in a positive direction (as one goes up, the other goes up too). A correlation of exactly 0 indicates no relationship between the two variables.

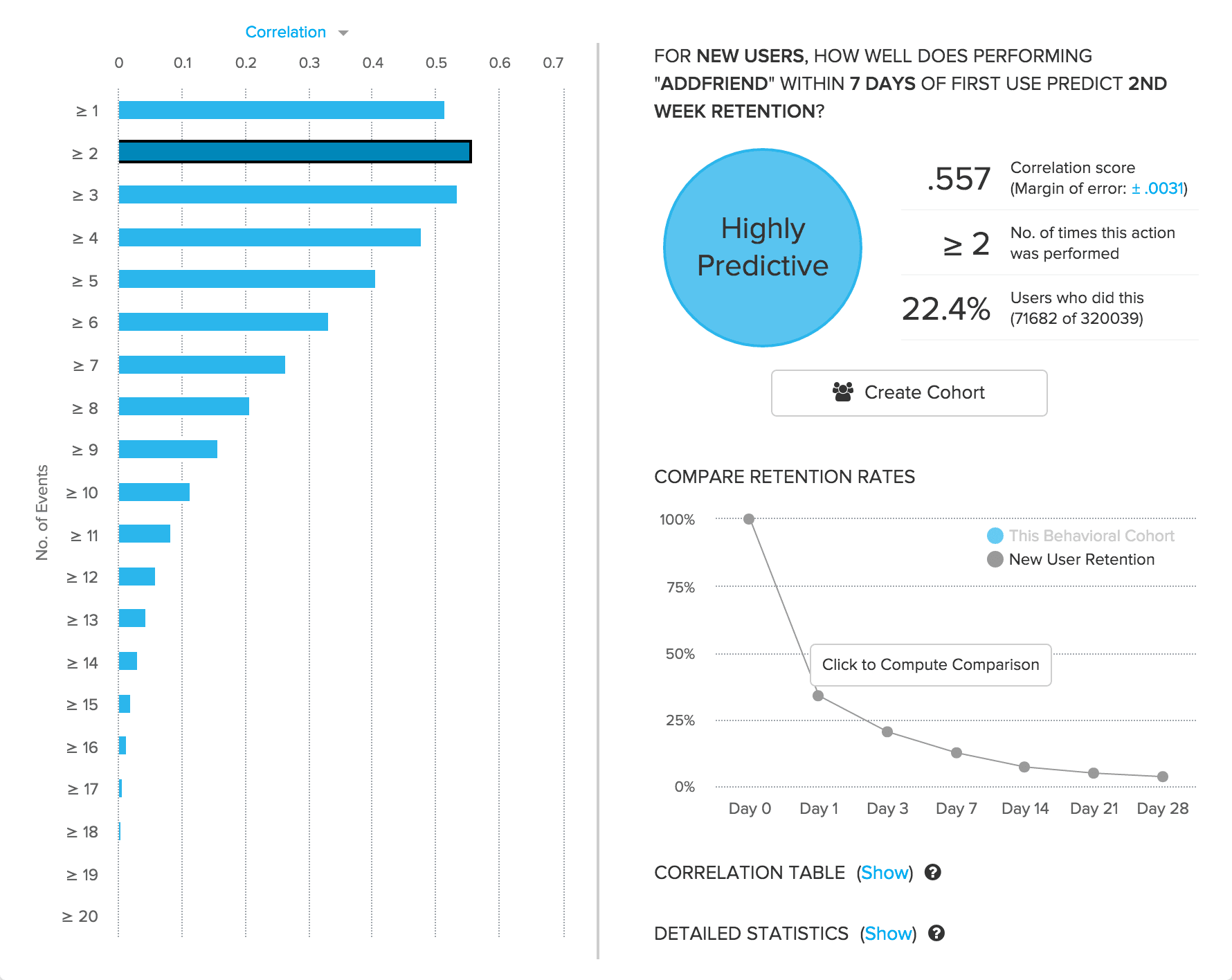

We use correlations in Compass to see how well users performing certain events predicts whether or not those users were retained. We have generalized event correlations into four categories:

- Highly Predictive (correlation >= 0.4)

- Moderately Predictive (0.4 > correlation >= 0.3)

- Slightly Predictive (0.3 > correlation >= 0.2)

- Not Predictive (correlation < 0.2)

A screenshot from Compass showing the correlation of users who added a friend in 7 days to being second week retained.

It is 100% true that correlation doesn’t equal causation. Just because two variables move together, doesn’t mean that one actually changes the other. For example, your revenue and number of people completing your onboarding flow are probably positively correlated, but that doesn’t mean completing onboarding directly causes morespend; both metrics are probably bumped by an overall increase in current customers.

The correlation doesn’t equal causation argument is exactly why you need to run tests when you are trying to make improvements to your product.

2. Find the best sample size for your tests

Your hypothesis is that the font of your signup page footer is what is holding back your conversions. Your designer has chosen Roboto, whereas the latest growth hacks tell you Comic Sans is a conversion winner. You start your A/B test, and then nothing happens. Literally. It’s not that you don’t get good results, it’s that you’ll get no results.

Your sample size will be too small.

Even if you get millions of views a month, only a small percentage of those will go to the signup page, of which only a small percentage will go to the footer, of which only a small percentage will click on the text. Divide that small fraction by half for your control and testing pages, and your eventual sample size is too small to show any significant change.

That might be an extreme example, but for A/B tests to work you need a large number of people in both your A and B conditions. These tiny change experiments can work for Facebook and Google because they have obscene traffic. But you likely don’t. Any employee who wants to set up an A/B test needs to understand the limitations that sample size will put on what they can test.

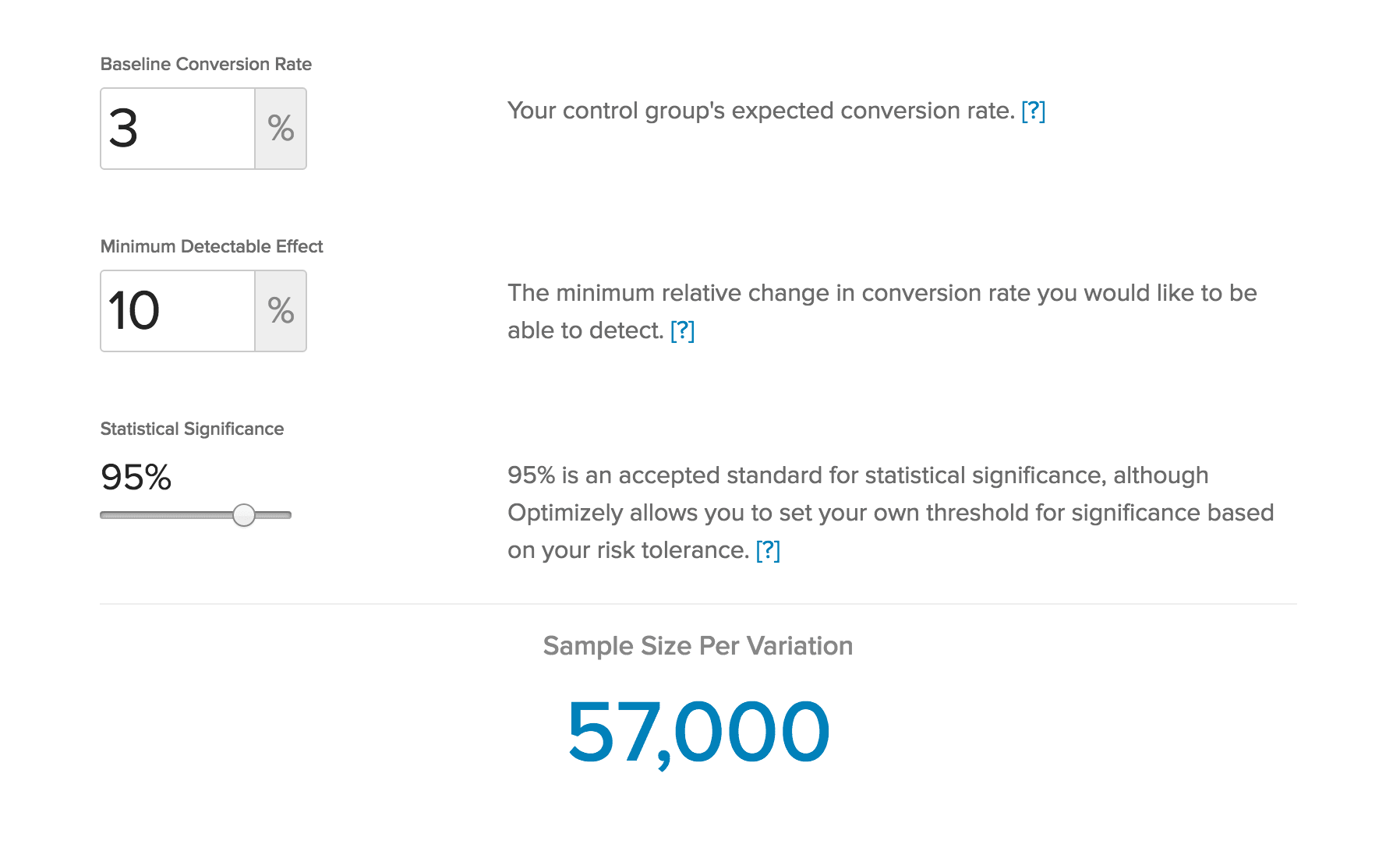

Optimizely has a sample size calculator which will help you understand the kind of numbers you need for statistical significance.

This allows you to set what your current conversion rate is along with what effect you want to see. Decide your significance level and it will spit out the sample size you need for each variation. Here you can see that if you want to see your conversion rate change from 3% to 3.3% and know that it is a real change, you need 57K people in each of your variations.

If you don’t have that type of traffic, you need a rethink your test.

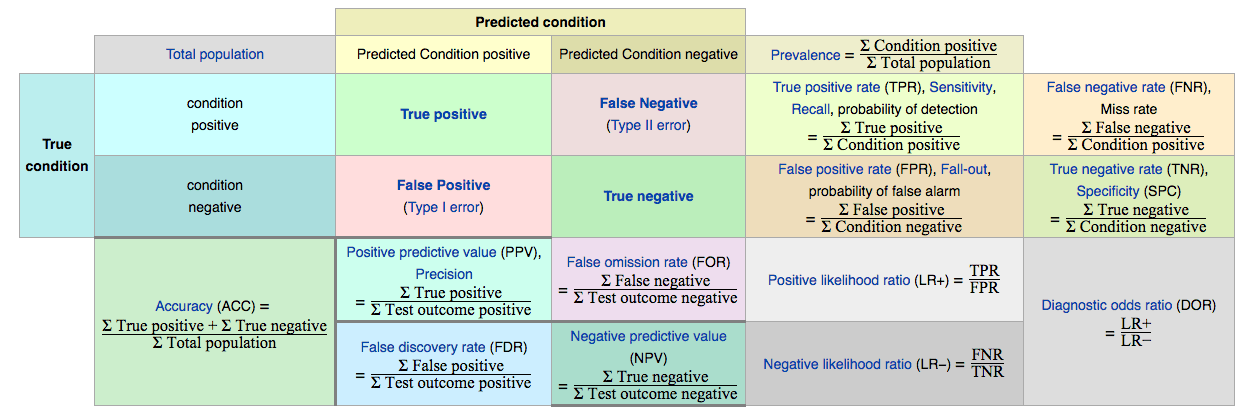

3. Know why PPV matters

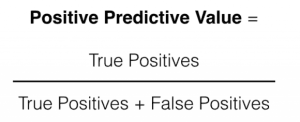

PPV, or positive predictive value, is a measure of the precision of your tests. It allows you and your team to know whether the behaviors you are measuring are predictive of the metrics you are interested in, such as retention.

You calculate PPV by taking the number of true positive samples in your experiment and dividing by the combined number of true and false positive samples.

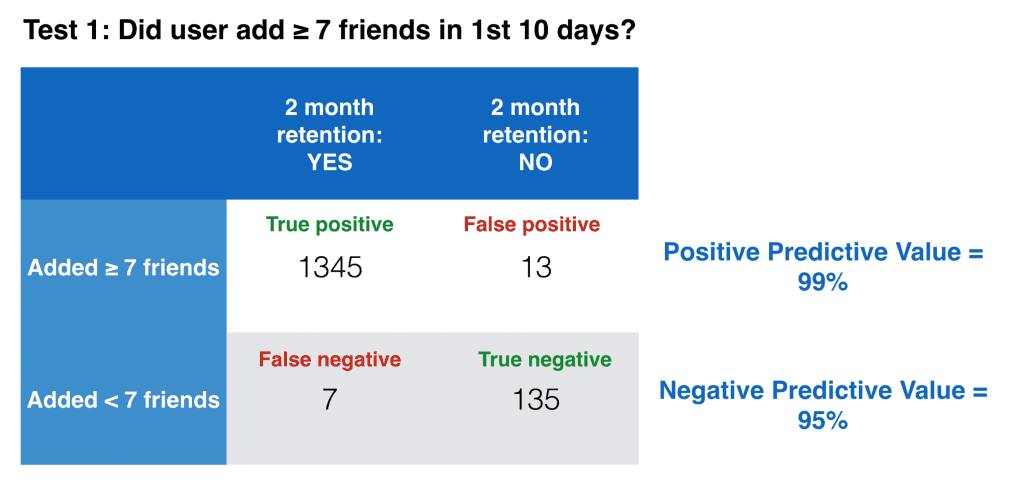

Let’s run through what this means with Facebook’s “7 friends in 10 days.” If Facebook were using Compass at the time of launch, they could have looked at this exact question: Did a user adding 7 friends or more in the first 10 days of use increase their chances of retention 2 months later? Compass would then compute this matrix for them:

This also includes the negative predictive value, NPV. What this tells you is that this question is a great way to separate out your cohorts. Adding 7+ friends in the first ten days is highly predictive of whether that person will be around in two month’s time. Conversely, not adding 7 friends is highly predictive of that person not being around in two month’s time.

With a simple question and a simple metric, you’ve been able to parse out your most important cohorts and find exactly what is driving them to stay.

This is only a small part of a larger confusion matrix. Through just setting out a simple binary question like this and tracking true and false positives and negatives, you can compute an array of statistics that help you understand your data better:

(Source: Wikipedia)

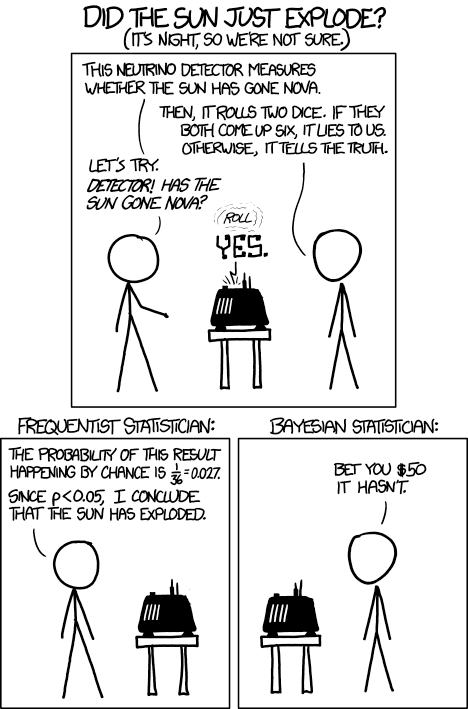

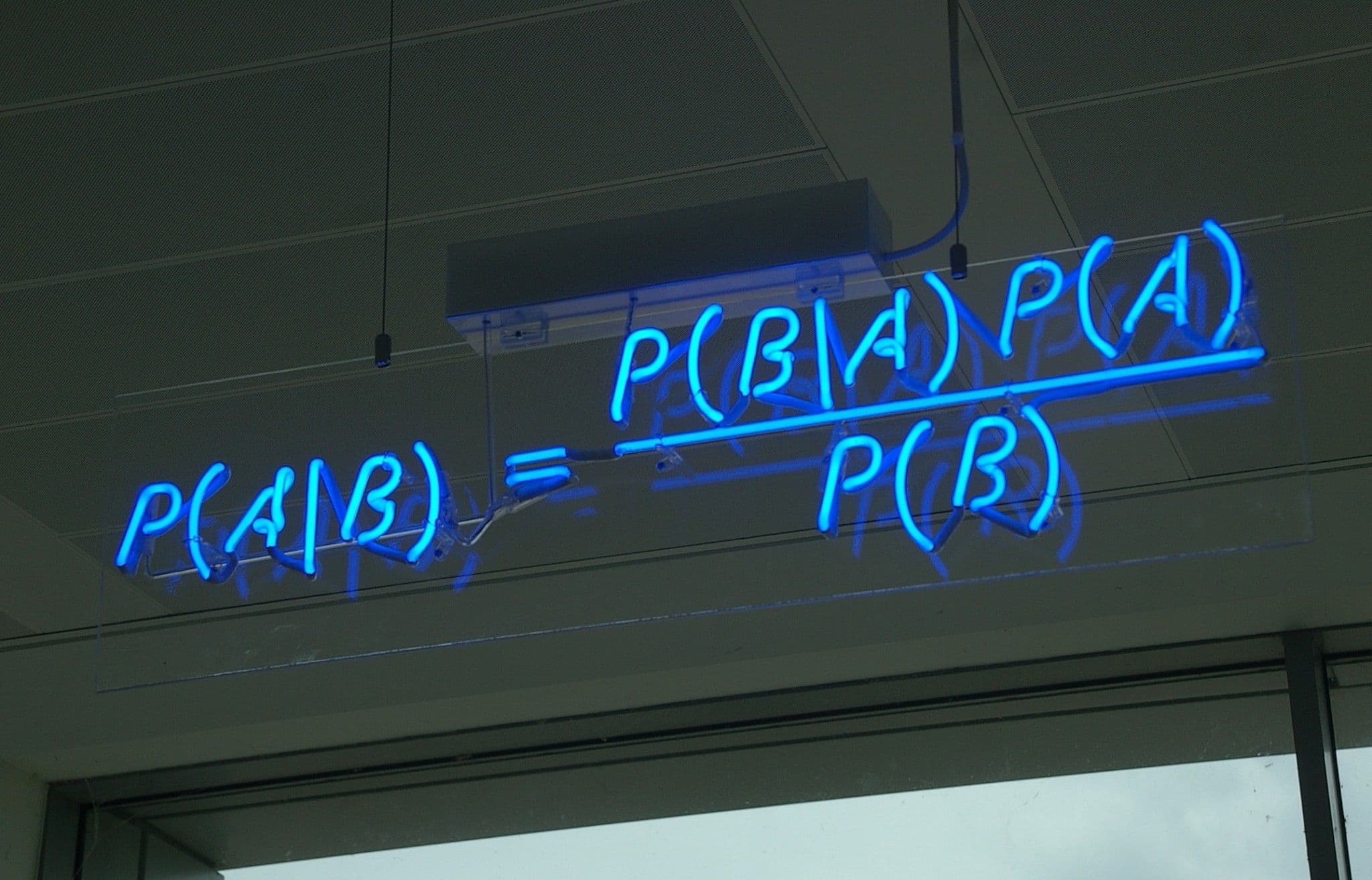

4. Think Like Bayes

Bayesian statistics differs from more orthodox “frequentist” statistics by treating the world as probabilistic. This means that instead of sharp decision boundaries (e.g. _this hypothesis is true/false) you get probabilities on whether your hypothesis is true or false (e.g. t_here is a 90% chance it’s true).

Another fundamental difference is that Bayesian thinking allows you to use your knowledge of the world, called your prior, to build your initial model:

(Source: xkcd)

These probabilities can then be updated as more data comes in. This means that as you are running experiments, you can update your thinking depending on the evidence. Bayes works like this:

- First, you take your hypothesis: Changing the button on the signup page will increase signups.

- Then, you assign a probability to it being true, which is your prior: Changing buttons on other pages has increased conversion rates, so I think changing this one will have an X% chance of success.

- Collect data and incorporate the implications of that data into your previous statement of the proposition’s probability. This is your posterior.

- Then, your posterior becomes your prior for the next iteration. Collect more data, repeat, and continue.

(Source: wikimedia commons)

The best thing for non-data scientists about Bayesian thinking is that it’s intuitive—you already do this. You have an idea of how something works in your head, and as new evidence comes in, you update your internal model.

5. Know the limitations of machine learning

Machine learning is a catch-all term for a number of different algorithmic and statistical methods. This is the cool tool du jour, with almost every new startup having some AI component. It even makes pretty pictures:

(Source: The Guardian)

This image was created by the “dreams” of DeepMind, Google’s machine learning tool. You don’t have to understand the finer points of DeepMind, but it is a good idea to understand what machine learning is and what it is not, what it is capable of and what it is not. This can help you in two ways:

- If you have an idea about some data, you’ll be in a better position to know whether machine learning can help you understand that data better.

- If another company comes knocking on your door saying that their algorithms are the answer to all your problems, you’ll know whether they are immediately BS or not.

Machine learning works on an iterative process. Through iterative training, these algorithms can learn to describe features in your dataset. They train a model, which could be as simple as linear regression or as complicated as DeepMind’s convolutional neural networks, on known data. Then that model can be used to classify unknown data.

What’s important to know is that ML is not the answer to all the world’s ills. While it can find relationships that other techniques will miss, it won’t give you answers that aren’t already in your data. It is still dependent on really good quality data. Which leads us neatly to…

6. Clean Up Your Data

If there is just one item on this list that your data scientist would want you to learn, it would be this. You will instantly become their best friend if you present them with a clean dataset for their analysis. When you do, they can get to work faster, and you get answers faster.

Learning to clean datasets makes everyone’s life better.

7. Write SQL

SQL stands for Structured Query Language, and is the language of almost all databases. By speaking SQL, you are speaking the language of your data and your database. You will have all of your data at your fingertips.

Sometimes you might want to just test out a theory before you bring in your data scientists. If you already understand SQL, then you can quickly run a few queries to see if your theories hold weight.

Now, Amplitude prides itself in providing accessible data insights for the whole company,regardless of whether a person knows SQL or not. But for the budding data scientist in you, we do also give you direct SQL access to your event data, giving you the flexibility to answer even the most complex questions about user behavior.

8. Tell a good story

This is another data science skill that stands out as non-obvious. But if neither your data scientists nor you have this skill, then all the other skills are useless.

What’s often forgotten about with data scientists is that they are not all robots (yet). Data science isn’t all about the math. In fact, when it is, it’s next to useless for the business as a whole. Data scientists need to build a convincing story with your data. This is why they are so indispensable. They need story-telling abilities to convince the audience of what the data shows.

By developing their “light quant” skills and working as an analytical translator, your data scientists can explain your data to the company and the world in a more compelling fashion.

Every other employee needs these skills, both for their own work (telling you a compelling story of why you should hire a new CFO) and for when they want to discuss data. By building that story around data, not letting it sound stale, you are more likely to get people on board with any experiments you want to run.

3 Skills That Every Data Scientist Needs

Just as other employees need data science skills, so do data scientists need to take on skills from other areas of the business:

- Without business acumen, they won’t know which questions and data are most vital to the success of the company.

- Without creativity, they won’t think up the best questions and what possibilities there are with the data.

- Without reasoning, they won’t arrive at the true answer to all their questions and data.

If your team understands some of the basic scientific concepts that underpin your data scientist’s role, then they can better prepare their data and have a more in-depth conversation with them. When data scientists look to others for improving their skills, they can get a better understanding of the company that exists around the data.

Then both analysts and others in your company can be stronger together.

Archana Madhavan

Senior Learning Experience Designer, Amplitude

Archana is a Senior Learning Experience Designer on the Customer Education team at Amplitude. She develops educational content and courses to help Amplitude users better analyze their customer data to build better products.

More from Archana