The Significance of Data Governance in Digital Analytics

Learn how data governance features can help improve your digital analytics implementation.

In digital analytics, organizations spend significant time collecting, interpreting, and reporting on data. The overarching goal is to leverage data as a competitive advantage. You can use data to improve digital advertising effectiveness or improve digital products. But before you can leverage your data, you need to be sure you have the correct data. Making decisions based on inaccurate data isn’t much better than making decisions based on no data. It is easy to ignore the significance of good data governance. Data governance is often seen as a laborious task that can be deprioritized, but that is a mistake. In this post, I will outline some data governance features essential to long-term success in digital analytics.

Data governance goes beyond the initial implementation

The best way to have good digital analytics data is to have a good implementation. The hours organizations spend planning and implementing digital analytics events and properties are essential to have reliable data. Several quality assurance and testing levels often occur during the initial implementation to verify data quality. But after the initial implementation, many organizations find that their digital analytics data quality degrades. Data quality degradation is often due to a need for more data governance. While initial deployments can be high-profile within the organization, data governance is less glamorous. Even though implementation and data governance have the same goals of good data quality, many organizations focus more on the former than the latter.

One of the reasons why organizations don’t do a great job with data governance is that the digital analytics products they use haven’t invested enough in data governance features. Many leading digital analytics products on the market lack basic data governance features. This lack of governance features is a shame because data governance underpins everything related to the digital analytics program.

Implementation solution design

Understanding what data you collect in your digital analytics implementation is essential. Simply identifying and communicating the events and properties your organization has chosen to collect is table stakes. Unfortunately, many digital analytics vendors use spreadsheets to share what is in the analytics solution. Tracking implementation solutions in spreadsheets has the following downsides:

- Information is separated from the digital analytics product and reports

- Multiple versions of the solution design can spread across the organization, making it difficult to know which is the current version

- Spreadsheets cannot tell you the status of each solution design element (e.g., is it currently collecting data?)

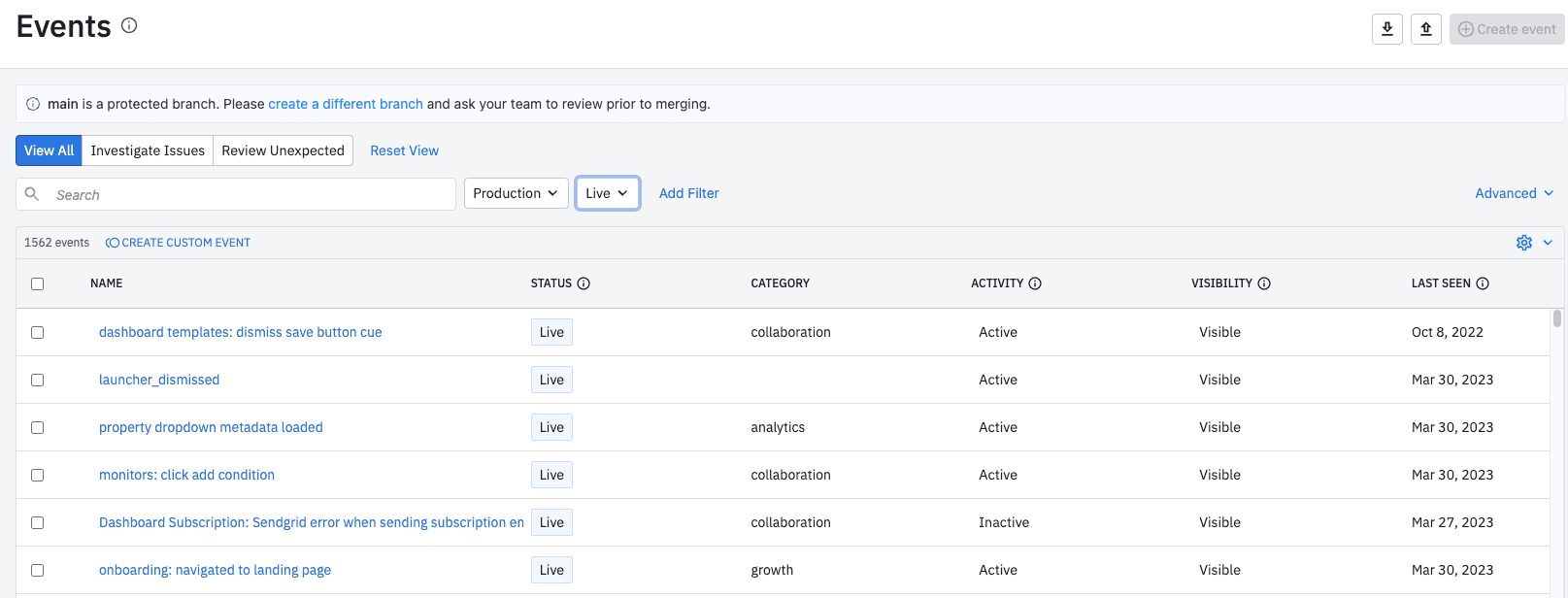

Having all solution information directly in the digital analytics product interface is preferable so you can always view the most up-to-date information:

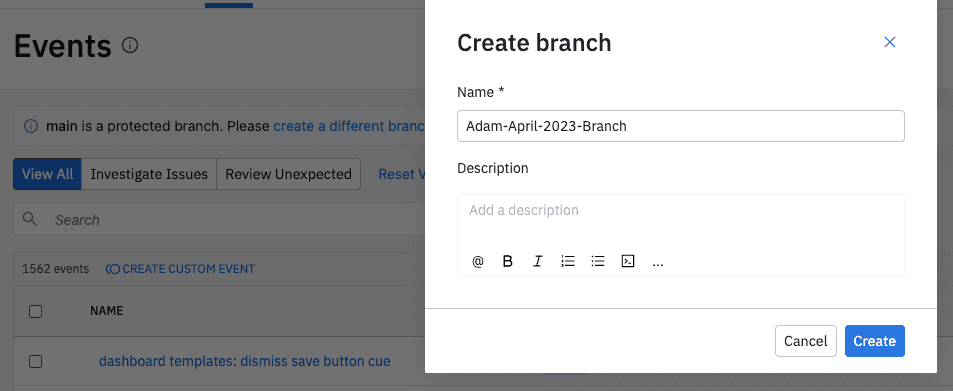

It is also beneficial if multiple people can iterate on the solution design, possibly even simultaneously. Ideally, you can manage solution design iterations via “branches” like you would do if using GitHub:

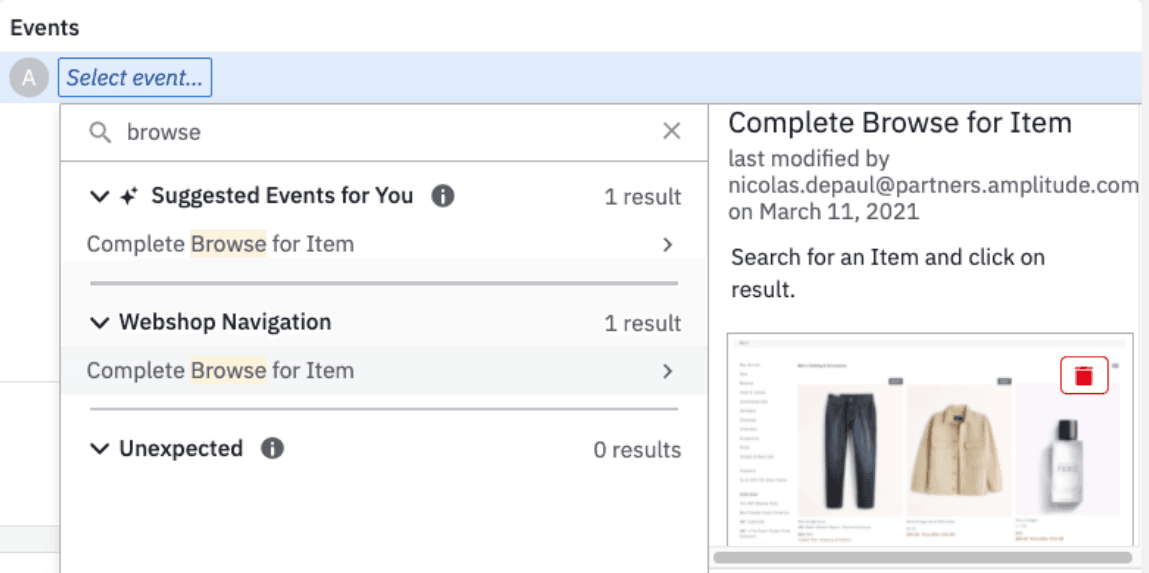

Data schema validation

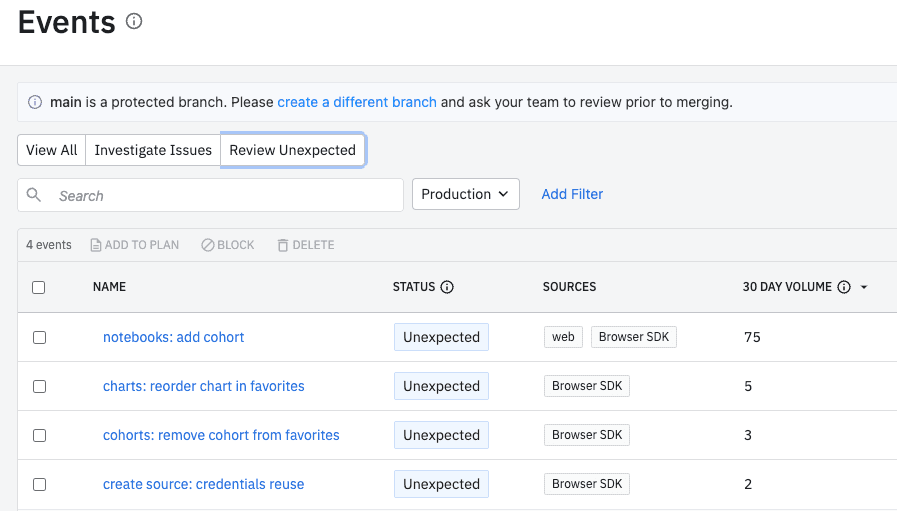

Another critical aspect of data governance is data schema validation. One component of schema validation is automated verification, which ensures that the data being collected is what is expected. This is important because digital analytics team members are often busy and don’t have the time to proactively monitor when new events or properties are being sent to the implementation. Tracking unexpected events is better handled by the digital analytics product, which is aware of the solution design. Automation allows the digital analytics product to alert the team if unexpected events or properties are found. These unexpected items are quarantined until they are reviewed so they don’t taint production data:

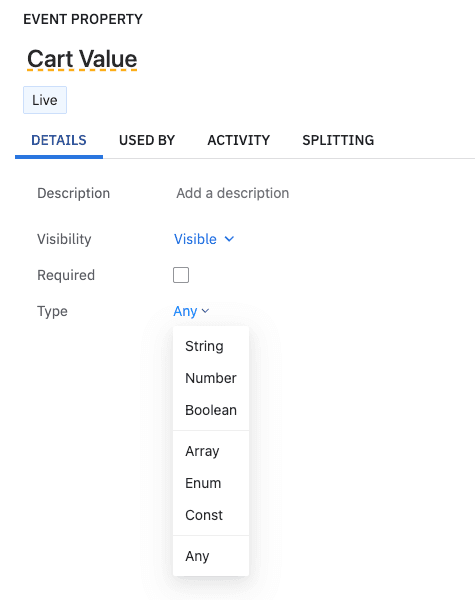

Another feature of data schema validation is the ability to verify that the collected data values match the expected format. For example, if you have a data property that is meant to be a number but is being passed a text string, that should be flagged automatically. If you have a specific postcode format that must be followed, each value should be verified so that data is consistent. Regarding data schema validation, an “ounce of prevention is worth a pound of cure!”

Object officiation

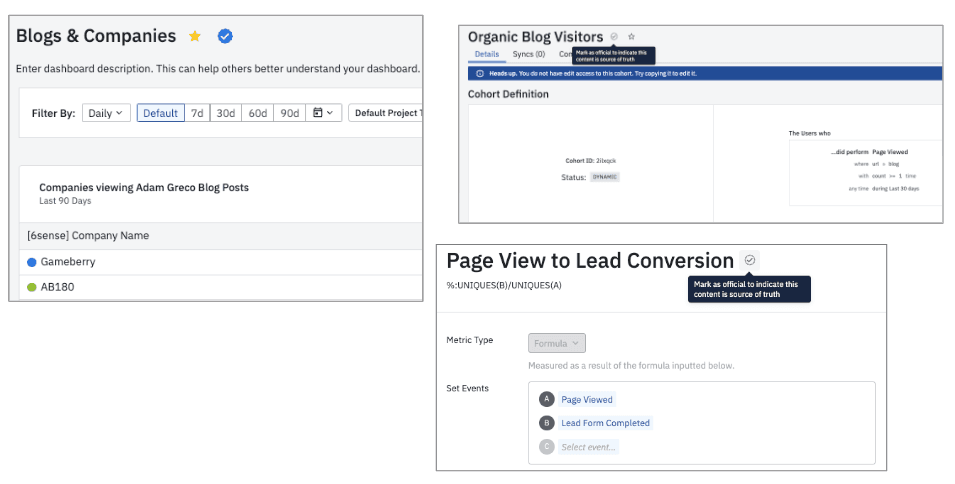

When people in your organization are using your digital analytics product, it can be challenging to know which analytics components are correct and which are not. Often users create new cohorts, metrics, etc., to try things out, but an implementation owner hasn’t verified them.

Object officiation is the process of marking digital analytics objects as “official” within the implementation. As shown below, objects can be assigned some indicator (e.g., blue check) to signify that someone with the appropriate credentials has sanctioned the object. Officiated objects can be trusted and assumed to be correct.

Object officiation takes the guesswork out of selecting digital analytics components and helps avoid different users spreading non-official objects across reports and dashboards.

Object deduplication

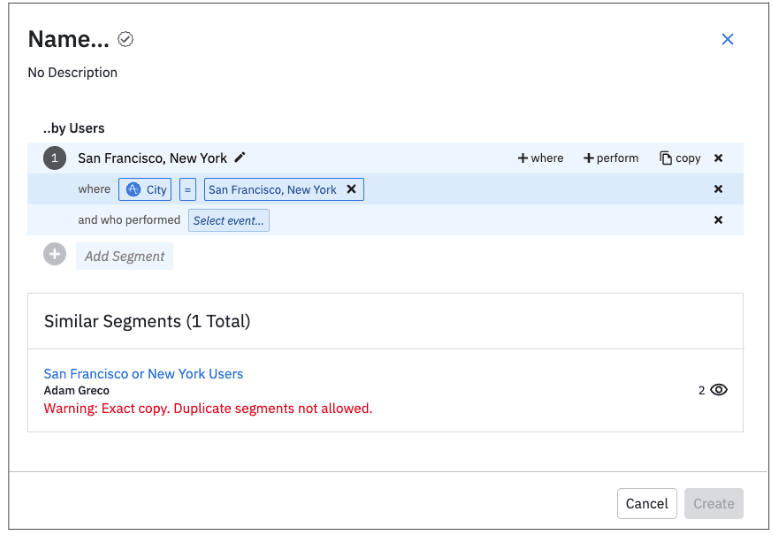

Object deduplication is tangentially related to object officiation. One of the most annoying aspects of digital analytics is the proliferation of multiple versions of the same analytics objects. These objects may be calculated metrics, cohorts, etc. While object officiation can help minimize this, it is often still possible for analytics users to create numerous versions of analytics objects. These different versions can be associated with multiple reports or dashboards. This problem is exacerbated when a flaw is found in the definition or formula of the analytics object. Instead of fixing it in one place, organizations must find all the different versions and fix each separately, which can be a governance nightmare!

Advanced analytics products (like Amplitude!) have addressed this deduplication issue directly. The best way to avoid deduplication is to prevent users from creating duplicate objects. As shown below, if a user attempts to create an exact duplicate, the digital analytics product will prohibit them from saving it.

Object usage

Organizations implementing digital analytics often implement many different events and properties. Every team has additional data they want to collect. When you have many data points, it can be overwhelming to understand which data elements are used most or least. Many digital analytics products make the situation worse by obfuscating object usage. In some digital analytics products, understanding which objects are used, where, and by whom requires administrators to download usage data via CSV and create reports.

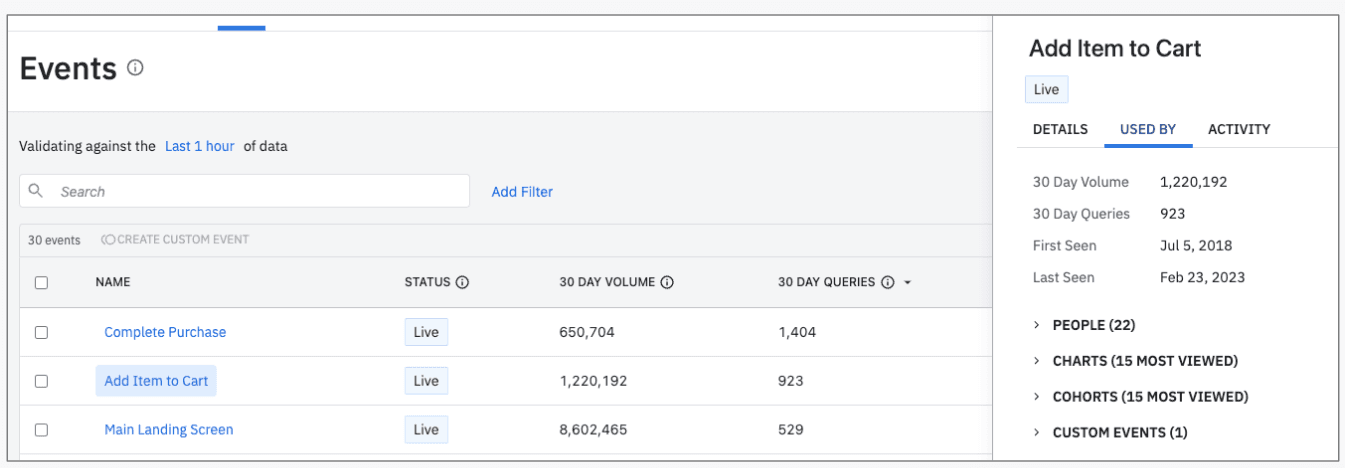

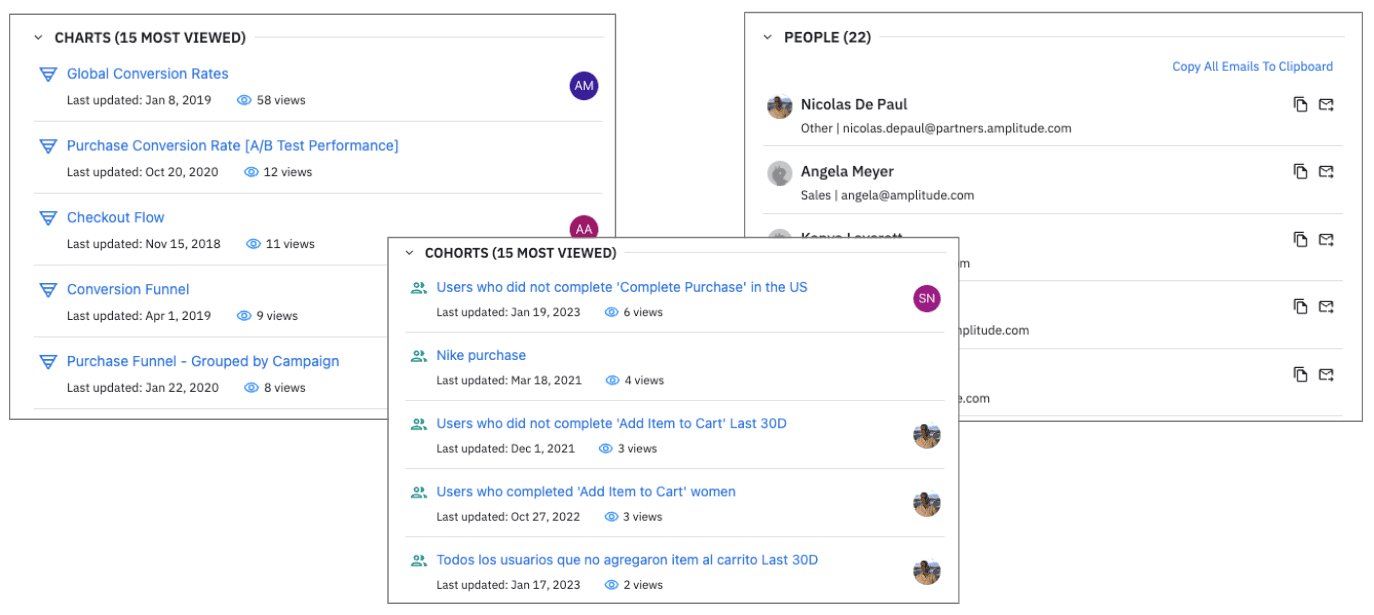

Modern digital analytics products make it easy to understand where every digital analytics event or property is used, as shown here:

This information should also be visible at a more detailed level to view the specific people and objects using the event or property:

Having this information at your disposal allows implementation administrators and managers to:

- View with which events and properties analytics users are engaging

- Determine if some events and properties may require additional training due to lack of use

- Consider removing events and properties that are no longer needed

Understanding analytics events

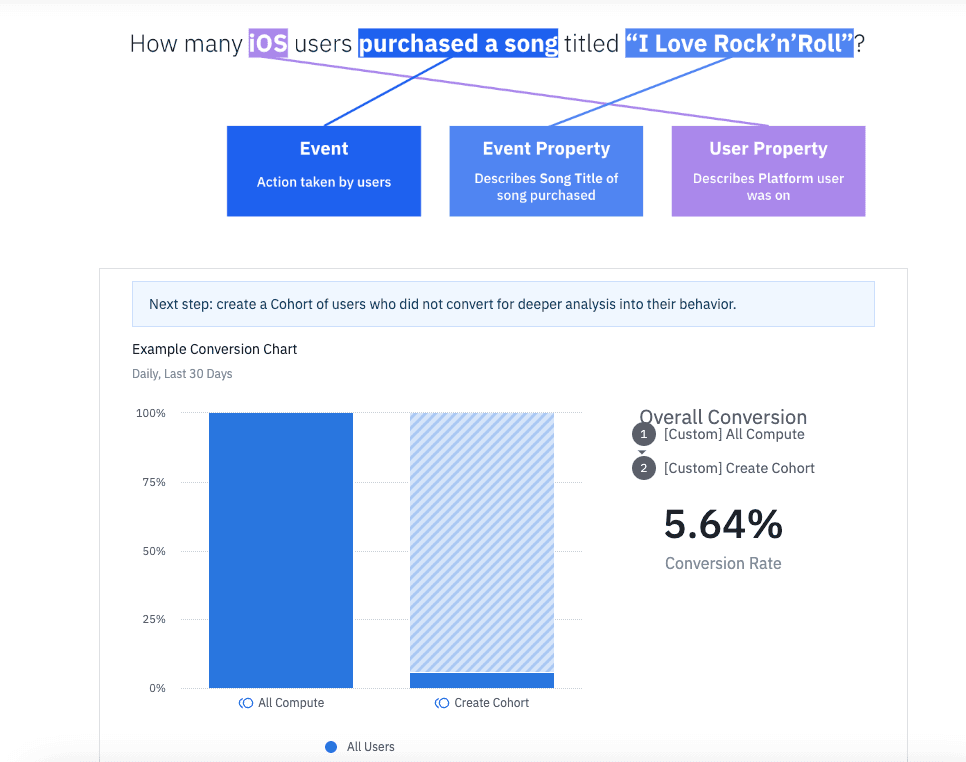

A common problem in digital analytics implementations is that casual end users aren’t entirely sure what each analytics event means. Developers are notorious for creating unintelligible event names (e.g., form_reaction). Many analytics users are uninvolved in the implementation process and don’t have the backstory on how and why analytics events were named. Therefore, anything administrators or managers can do to help end users ensure they use the correct event for their analysis is greatly appreciated.

One way to address this is to create a data dictionary. This is where users can learn about each analytics event and see where it is on the website or mobile app. Data dictionaries can be stored in a document or a shared space like Confluence or Miro.

At Amplitude, we offer two interesting ways to communicate information about your implementation and its events and properties. One is through our Notebook feature. Amplitude Notebooks are freeform canvases where you can add text, images, video, analytics reports, etc. Notebooks are a great place to document your implementation and share context around analytics events and properties.

The other way Amplitude helps administrators share information about implementation events is through event screenshots. Administrators can attach screenshots to events in Amplitude’s Data module. Attaching a screenshot of where the analytics event is set to each event will enable end users to see that screenshot as they scroll through the various events during report creation. These screenshots help end-users build confidence to use the correct event for their analyses.

Data transformation

No matter how hard you try, there will be times when incorrect data is sent to your digital analytics product. Developers may change a website or app that triggers the wrong data to be sent. Customers could enter incorrect information into form fields. Users could refresh pages and create duplicate data. Regardless of how it happens, inaccurate data is collected. Some of the preceding schema validation items can help mitigate bad data. Still, your digital analytics product should be able to modify/update/transform data if needed.

Some digital analytics products don’t provide a way to transform data and require you to make changes in data warehouses. This isn’t helpful since end-users might use the digital analytics product interface where data is incorrect. Administrators should have the option to transform data if needed. You should look for the following data transformation features in your digital analytics provider.

Value modification

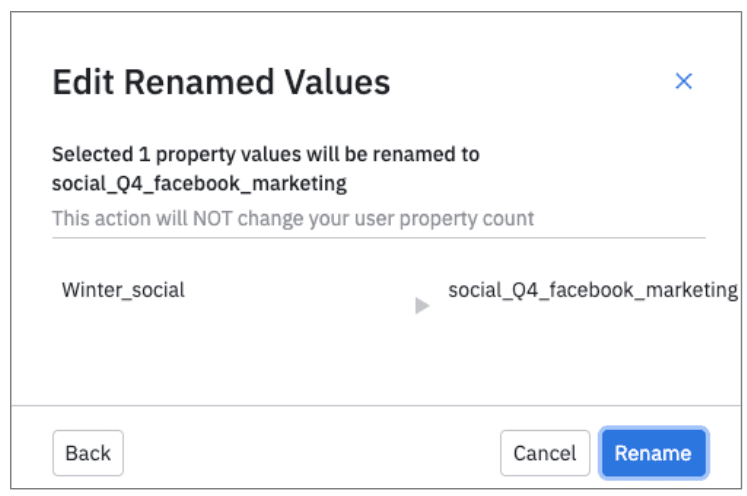

Administrators should have the ability to change specific property values if needed. For example, imagine a marketing campaign code sent to the analytics product without the desired naming convention. Administrators should be able to modify these values so end users see the correct value when using reports and dashboards.

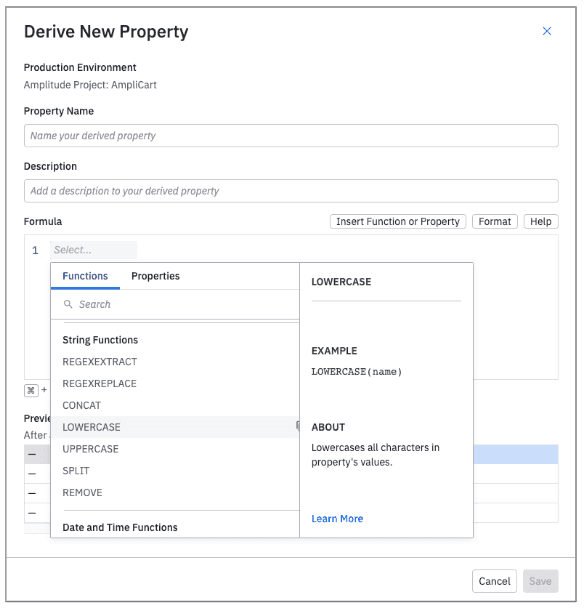

In addition to one-off value changes, administrators should be able to create rules that modify many values at once. These rules may use formulas or functions to apply changes to all affected property values.

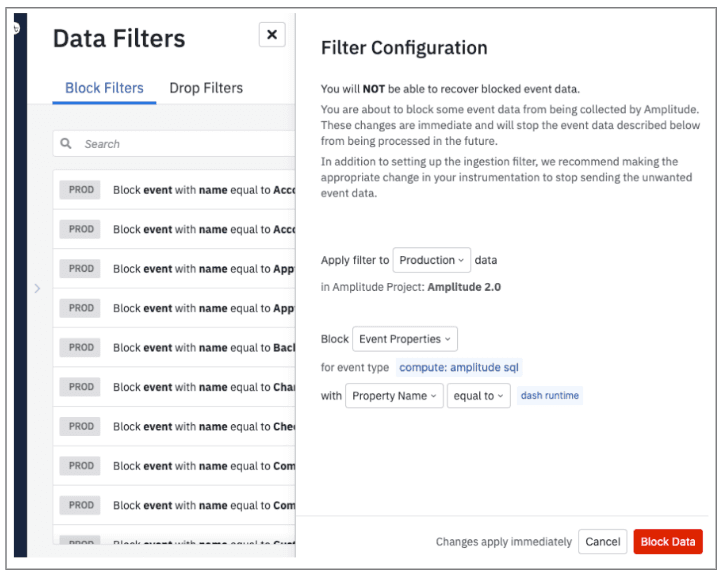

Data obfuscation

Another desired data transformation feature is the ability to hide or obfuscate incorrect data. Sometimes portions of data need to be fixed, and you want to hide them from users. An example might be test data that was accidentally sent to production. This data isn’t deleted but hidden from all users.

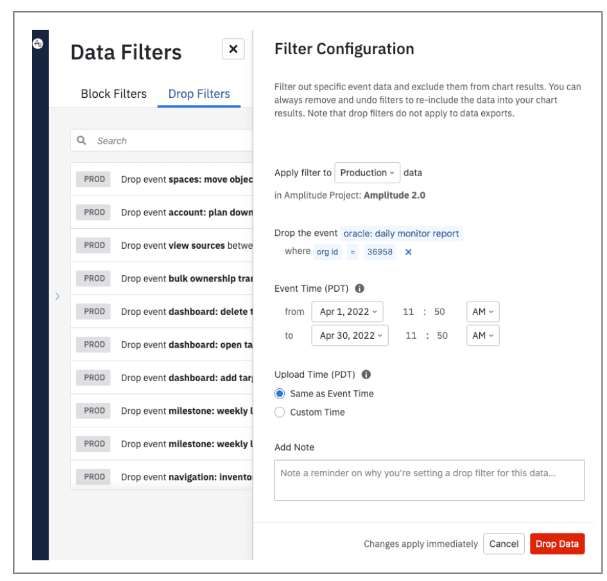

Data deletion

In extreme cases, you will have analytics data you want to remove altogether. Therefore, your digital analytics product should enable you to select specific portions of data and remove them.

Automated data testing

Another critical data governance tool is automated testing. There are products available in the market that will check your website or app to ensure that analytics code is functioning properly. These products can also be configured to act like customers and verify that your analytics tracking works as designed. Popular products in this area include Observepoint and QA2L. It would take an entire blog post to explain these products’ advanced functionality, so I encourage you to check them out and consider adding them to your digital analytics stack.

User data privacy compliance

Another aspect of data governance is honoring user privacy deletion requests. Privacy regulations like GDPR and CCPA provide consumers with the ability to request that data collected about them be deleted upon request. Therefore, it is critical that digital analytics teams respond and take action on these requests as dictated by their local governing regulations.

Summary

As you can see, there is much more than meets the eye regarding data governance. Ensuring that your digital analytics data is accurate takes an enormous amount of proactive work. Different digital analytics providers offer varying levels of assistance in data governance. Because all analyses depend on quality data, the data governance capabilities provided by your vendor are something to consider.

Adam Greco

Former Product Evangelist, Amplitude

Adam Greco is one of the leading voices in the digital analytics industry. Over the past 20 years, Adam has advised hundreds of organizations on analytics best practices and has authored over 300 blogs and one book related to analytics. Adam is a frequent speaker at analytics conferences and has served on the board of the Digital Analytics Association.

More from Adam