Do This Now: 8 Ways to Focus your Product Team on Impact, Not Features

Now is the time to shift your team's focus to measure the impact of the product and features you're building.

“I work in a feature factory! What can I do to nudge my organization to focus more on impact and less on delivering features?”

In this post, I am going to present eight things you can do this week to start nudging your organization towards being more impact focused. Big-bang, linear change works in some contexts, but product development is a different beast; it’s very easy to push the wrong buttons and disrupt your change-agent mojo. With that context in mind, here are eight impact-focused nudges that I’ve observed successfully and safely encourage change in a range of organizations.

1. Reframe feature requests as ‘bets’

So…someone asks you to build exactly X, or to generate a feature-centric roadmap. My favorite approach here is to play their game, but layer on a game. Let me explain. Let’s assume for a moment that the person asking for X magically knows exactly what to build. They are clairvoyant and omniscient. Some say Steve Jobs was like this, I disagree, but let’s give the feature-requestor the benefit of the doubt. “Could you describe your bet in more detail?” I’ll ask. “What will we see if this bet works out? What are the odds? How are we going to play this hand?”

What you notice is that the requestor 1) feels good about “placing bets” (it feels bold, not like running measly experiments), and 2) by calling it a bet we somehow liberate them to talk openly about risk, and expected upside. It sucks to get a prescriptive request, but by getting more clarity on the bet, we’re halfway towards some of the activities below like the Product Decision Review and One-Pager.

2. Write the recap before you begin

I call this one the recap in a bottle. Before you launch into the initiative, work with your team to design a recap presentation in advance (a precap if you like). Put together something you’ll be proud of regardless of the result.

- What is the status quo?

- If your intervention is successful, what might we observe?

- Whose behaviors/outcomes will change?

- How will they change?

- How will this new behavior benefit customers/users and the business?

- What will customers say about the updates?

Commit to present the recap to the broader organization at some fixed point in the future or on some cadence. And actually present it! The transparency and willingness to introspect will inspire other teams. More info on how to run precaps here.

3. Fill up a learning backlog/board

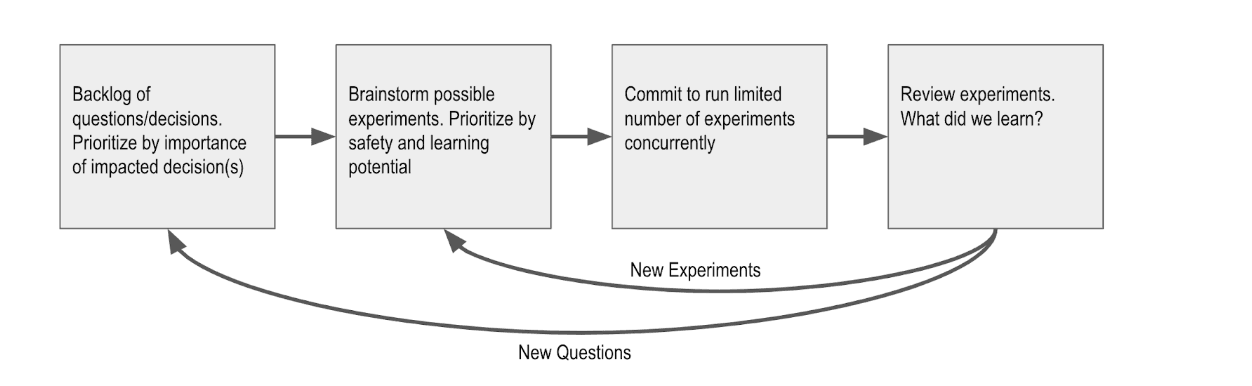

Fill and groom a learning backlog prioritized by the “value” of the learning. Frame each item in the learning backlog as a question paired with the decision (or decisions) that answering the question might inform. To prioritize your learning efforts, consider the maximum amount of money you would you pay for the information. Now brainstorm experiments to answer these questions. How might you go about answering the question quickly, cheaply, and safely? Run one or two concurrent experiments with expiration dates, and periodically review what you have learned (as publicly as possible). Even teams tasked with prescriptively building features will benefit by explicitly calling out their learning goals.

To prioritize your learning efforts, consider the maximum amount of money you would you pay for the information.

Example Backlog Items

Question:

How do onboarding goals and habits differ between our enterprise users and SMB users?

Decide:

- whether to design/develop alternate flows based on customer segment.

- how much to focus on driving organic adoption during the onboarding flow, as we believe enterprise and SMB users will be less/more receptive to these efforts

Question:

Where is the leakiest part of the free-trial to paid conversion funnel in terms of impact on customer LTV (vs. user counts)?

Decide:

- Reassess how we report on, and optimize for conversion rates

- Focus on pricing optimization

4. Hold quarterly product decision reviews

So you’ve been running in feature factory mode for a bit (and you weren’t doing the recap in a bottle exercise above)…well, it might be time for a product decision inventory and review. Product development is filled with countless decisions. Hold quarterly product decision reviews to ask, “What information did we have available at the time of the decision? What context were we operating under? What options did we weigh?”

The goal here is not to focus myopically on the outcome–what ended up happening (which is often luck driven)–but rather to review the decision-making process to understand opportunities for improvement.

Review the decision-making process to understand opportunities for improvement.

The output of this exercise might include: some heuristic checklists, agreeing to instrument new parts of your application, trying new research techniques, timeboxing decision making, moving it closer to the front-lines, etc. One of my mantras is “high decision quality, high decision velocity”, and this exercise helps hone those skills. As with all of these activities: stress psychological safety. This work is hard, and we never have perfect information.

5. Meet regularly for insights workshopping sessions

Question-asking, research, modeling, analysis, and data storytelling take practice. The trouble is that most people feel put “on the spot” when doing official presentations. There’s a ton of pressure to get it right, which means they play it safe (or don’t do it at all). One nudge is to organize a regular cross-functional, cross-team insights/analytics workshopping lunch.

Settle on a short, five-minute presentation format, and leave a full ten minutes for a spirited discussion. Try to fit three or four presentations/discussions in per lunch. Importantly, make it “safe” for work to be unfinished and for non-experts to share their progress.

6. Add a behavior component to personas

Most product initiatives have (or should have) a “Who” in mind. Who are you building for? What are they trying to achieve? What do we know about them?

Without going deep into the merits of personas–there are passionate beliefs on the issue–it helps to, as the cliche goes, know your customer. Imagine I ask you to create a segment in your insights-tool-of-choice that identifies the “target” of your “intervention” (I prefer intervention to feature because to me it better describes what’s happening). What does that query look like? How do you go from all of the humans in your product to this specific set of humans?

Without going deep into the merits of personas–there are passionate beliefs on the issue–it helps to, as the cliche goes, know your customer.

The nice thing about this approach is that it appears fairly benign on the surface. But by really focusing on a narrow “Who”, you’ll be able to guide the discussion around impacts and outcomes more persuasively.

Related Reading: Create User Personas That Stick

7. Write one pagers

There’s nothing groundbreaking about describing an initiative with a one-pager (or six pager in the case of Amazon). Unfortunately, many front-line teams never see the “why” encapsulated succinctly. The “ship has sailed” so to speak. This can be easily remedied.

Make a point of writing one-pagers–ideally sharing a common structure to make scanning and comparison easier–and make them visible for teams to see. Better yet, invite team members to ask questions and comment while initiatives are in the incubation/assessment stage.

One of my favorite workshops involves designing an org-appropriate one-pager format. You can pull this off yourself. Do a quick activity brainstorming questions that should be answered in each one-pager and use that to bootstrap your v1. The practice alone of clarifying the “bet” on a single page can reorient the organization towards impacts. See here for a guide to writing one pagers and a good example of product docs from Amplitude’s product team:

ref Free Download: Sample one-pager from Amplitude’s product team

8. Design (and iterate on) an always-on dashboard

Most dashboards never see the light of day. They’re assembled in a vacuum by a single person and then forgotten, along with the background information, terms, and caveats. Part of the reason this happens is (again) because of fear and perfectionism. What if “it” is “wrong”? Another reason for the dashboard graveyard is that teams don’t iterate on their dashboards to make them more effective.

Related Reading: Why Experiment?

The solution? A big shared monitor and an always-on dashboard. Invite editing and tweaking. With time, you’ll come up with something that hopefully informs decisions.

In closing, what has worked for you when it comes to shifting your organization towards being more outcome focused (in a healthy way, of course)? How do you change the tenor of the conversation without tipping the boat? How do you “hack” the status quo in a way that doesn’t threaten your internal influence?

As an experiment, I’d love to write a follow-up post where I curate your tips. Please submit tips in the comments, and I’ll assemble them into another post in the near future. Thanks!

John Cutler

Former Product Evangelist, Amplitude

John Cutler is a former product evangelist and coach at Amplitude.

More from John