What Is a Modern Data Stack for Growth?

Learn why every company needs a data stack to fuel growth and how to build one with purpose-built technologies.

The term “modern data stack” has garnered a lot of interest in the past 18 months, with most of the chatter being in the context of analytics and how a set of modern tools and technologies can help improve the analytics craft.

The most widely accepted modern data stack for analytics comprises data tools spanning the following four categories:

- Data collection via ELT: Used to extract data from databases and third-party tools

- Data warehousing: Used to store a copy of the data

- Data transformation: Used to transform the data and build models for analysis

- Business intelligence: Used to build reports using analysis-ready data models

However, this stack only partially serves the needs of product and growth teams as it ignores the following:

- Collecting event data from primary or first-party data sources (websites and apps)

- Storing this data in a cloud data warehouse

- Analyzing this data to understand user behavior and identify points of friction

- Activating the data in downstream tools to build personalized customer experiences

Therefore, as an extension of the modern data stack for analytics, a modern data stack for growth includes tools that enable teams to do the above.

The goal behind this guide is to offer a complete breakdown of the data stack that empowers go-to-market (GTM) teams to take control of their workflows and do more without relying on data and engineering teams.

Collecting event data: CDI versus CDP

Collection of event data or behavioral data is the first step towards adopting a modern data stack for growth and getting it right is critical as everything else from here on hinges on clean, accurate data being made available in the tools used by GTM folks.

Purpose-built behavioral data collection tools fall under two closely-related categories with overlapping capabilities: customer data infrastructure (CDI) and customer data platform (CDP).

The lack of adoption of the term CDI is the reason some CDI vendors refer to their product as a CDP without actually offering CDP capabilities. Additionally, more and more vendors of marketing automation tools are using the term CDP to describe a non-core feature of their product, adding to the confusion regarding what a CDP really is.

CDI is essentially a standalone solution whereas CDP is a bundled solution that includes CDI capabilities. Allow me to explain the differences in the simplest terms possible.

Customer data infrastructure

The purpose of a CDI is to collect behavioral data or event data from primary or first-party data sources—your websites and apps—and sync the data to data warehousing solutions as well as to third-party tools used for event-based analysis and activation.

Product and growth teams typically consume behavioral data in a product analytics tool to understand user behavior and in engagement tools to build personalized, data-powered experiences—a CDI’s job is to make the right data available in these tools.

Additionally, CDIs also offer data quality and governance features to ensure that the data collected is not only accurate but also as expected.

If you’d like to dig deeper into CDIs, I recommend having a look at this guide I wrote recently.

Customer data platform

As mentioned above, CDP is a bundled solution and can be best understood via the following formula:

CDP = CDI + Identity Resolution + Data Storage + Visual Audience Builder + Data Activation

Identity resolution, the ability to build audiences using a drag and drop visual interface (without writing SQL), and the ability to sync data to third-party tools are the core capabilities of a CDP. On top of these, CDPs also store a copy of the data in their own data warehouse and make the data available for future consumption.

CDP is essentially a layer on top of CDI to activate or take action on the data.

Additionally, CDPs and even some CDIs offer the ability to collect data from third-party tools. However, this is not a core offering of theirs—ELT tools like Fivetran, Airbyte, and Meltano are purpose-built and best-suited to collect and store data from third-party tools in a data warehouse.

Warehousing event data

A data warehouse is essentially a database designed for analytics and is used to store data from all possible data sources—first-party apps, third-party tools, as well as production databases.

Irrespective of the technology you use to collect event data, storing a copy of your data in a data warehouse such as Snowflake, BigQuery, or Firebolt is a good practice with several benefits.

Besides the obvious benefit of owning your data, having access to raw data in a warehouse allows you to do more with data—from transforming and combining event data with data from other sources to enriching the data before analyzing it or activating it.

Additionally, warehousing your data will enable you to invest in advanced use cases such as predictive models to reduce churn or a recommendation engine to power upsells. It’s easier to leverage your data if it’s housed in your warehouse, rather than locked inside a third-party vendor’s warehouse, where you might have to pay a fee to access historical data.

Even if you don’t have a need for the data today, it’s still worth storing a copy of your data in a data warehouse, especially when warehouses have become so affordable and can be spun up in a matter of hours without deep technical knowledge.

Once you’re done collecting and storing data, it’s time to analyze and activate the data. If executed well, these two stages of the data pipeline can make a significant difference in your company’s growth trajectory.

Make sure you’re accurately collecting your behavioral customer data in The Amplitude Guide to Behavioral Data & Event Tracking.

Analyzing event data

A product analytics tool is a purpose-built analysis tool meant to analyze event data and understand how users interact with your product.

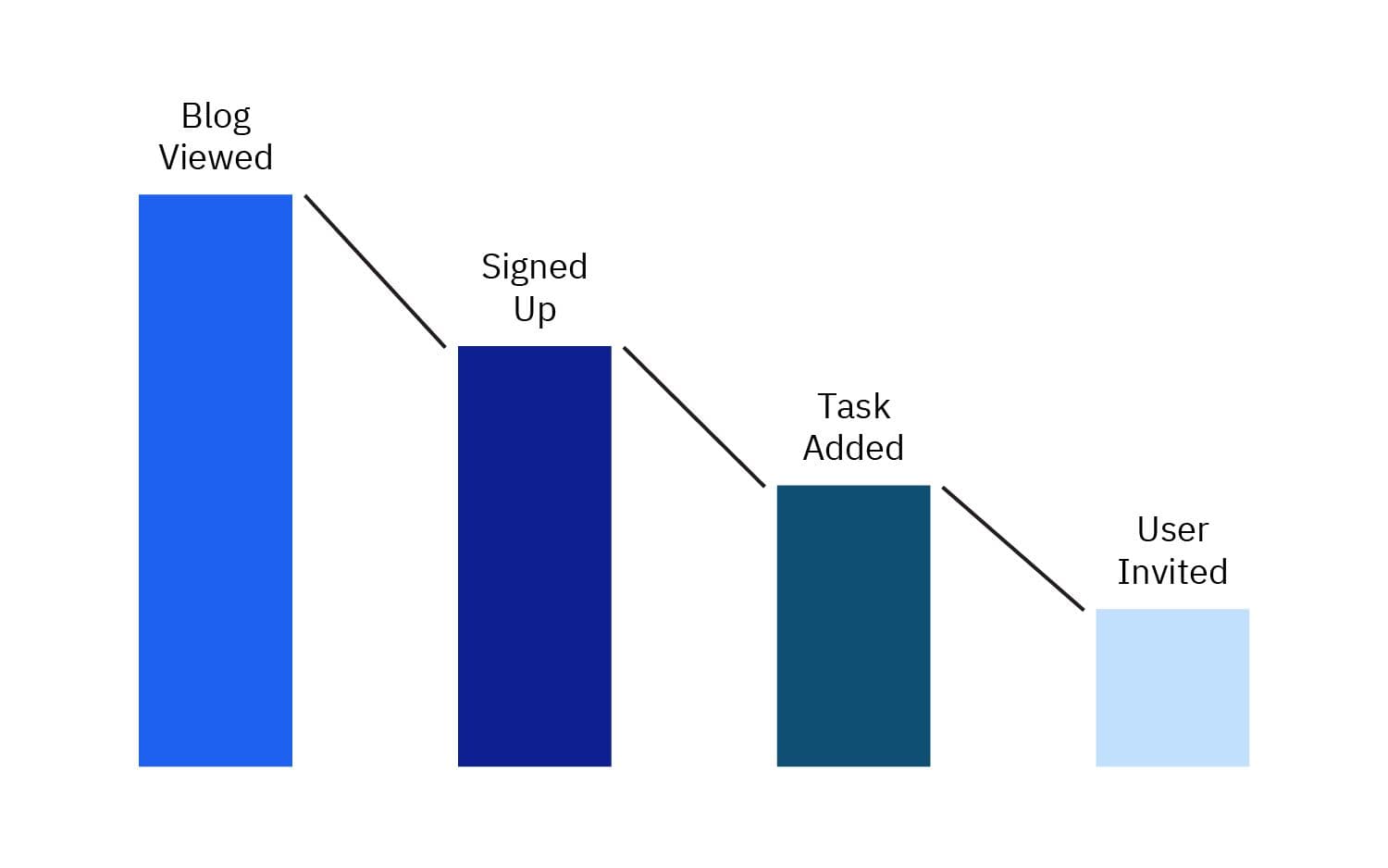

Customer analytics and behavioral analytics are also terms to describe product analytics tools as they allow you to analyze user behavior by visualizing how users move through the customer journey.

Product analytics tools primarily cater to product and growth teams where:

- Product teams are able to improve product development by prioritizing features that are used and deprecating the ones that are not

- Growth teams are able to improve onboarding, activation, and retention by visualizing the customer journey and identifying points of friction

Analyzing event data as a funnel

The data powering product analytics tools comes in the form of event data from your website and apps—first-party data sources.

You can also send event data from external apps but you need to be intentional about it, else your product analytics tool will become bloated with too many events. Excessive event data can quickly cause data quality issues—a leading cause for lack of trust in data.

Different ways of moving data to a product analytics tool

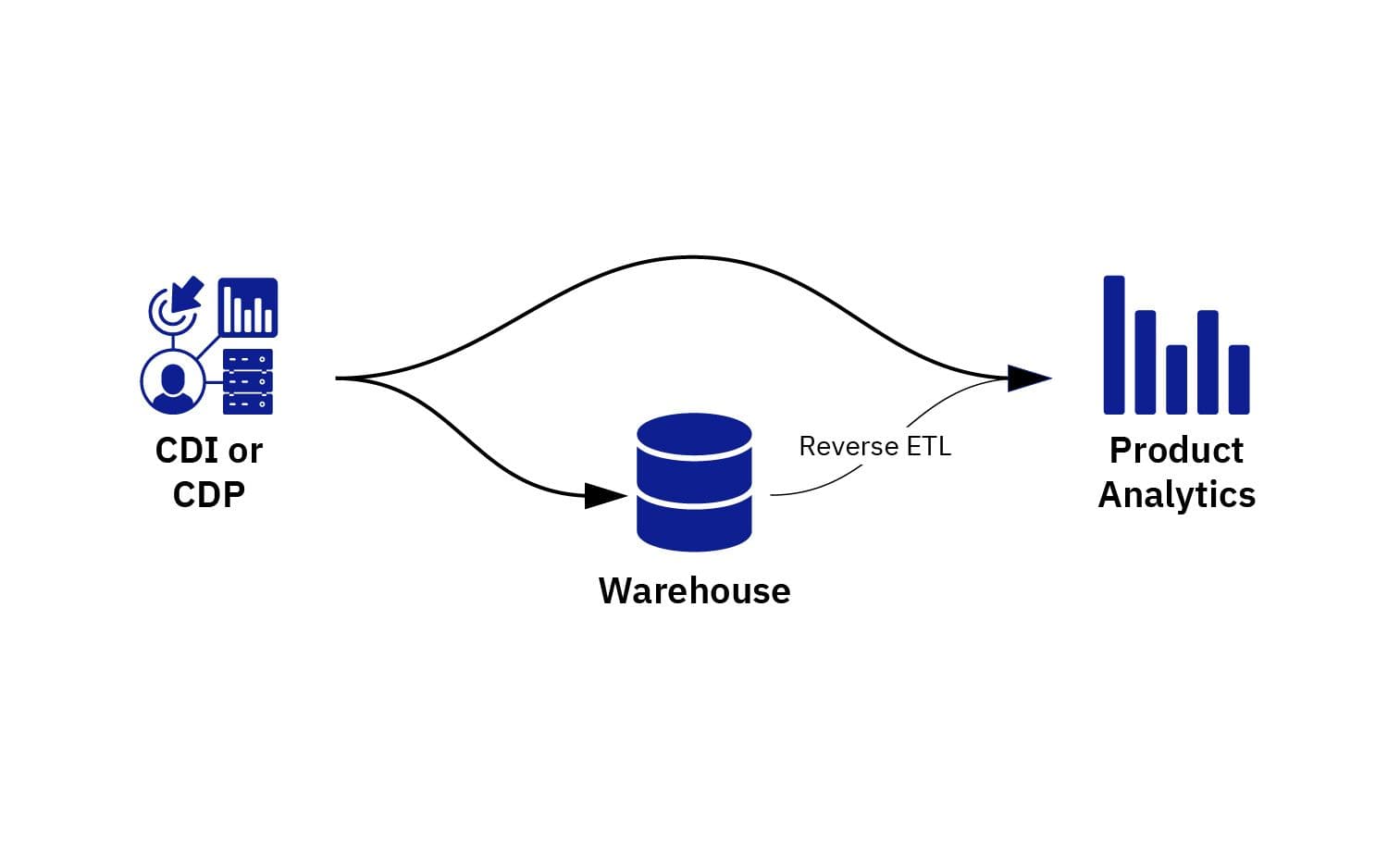

With the rapid adoption of the data warehouse, companies are discovering the benefits of making the data warehouse the source of data for product analytics tools.

If you’re already storing data in a data warehouse and have dedicated resources to manage the warehouse, then syncing data from the warehouse to your product analytics tool is a good idea. It eliminates the need to maintain a separate data pipeline and allows you to model and enrich data in the warehouse before sending it downstream.

Another noteworthy benefit of this approach is that companies using a BI tool like Looker, Mode, or Preset alongside a product analytics tool will not experience any data inconsistency between the two since the warehouse will be the source for both tools.

Activating event data

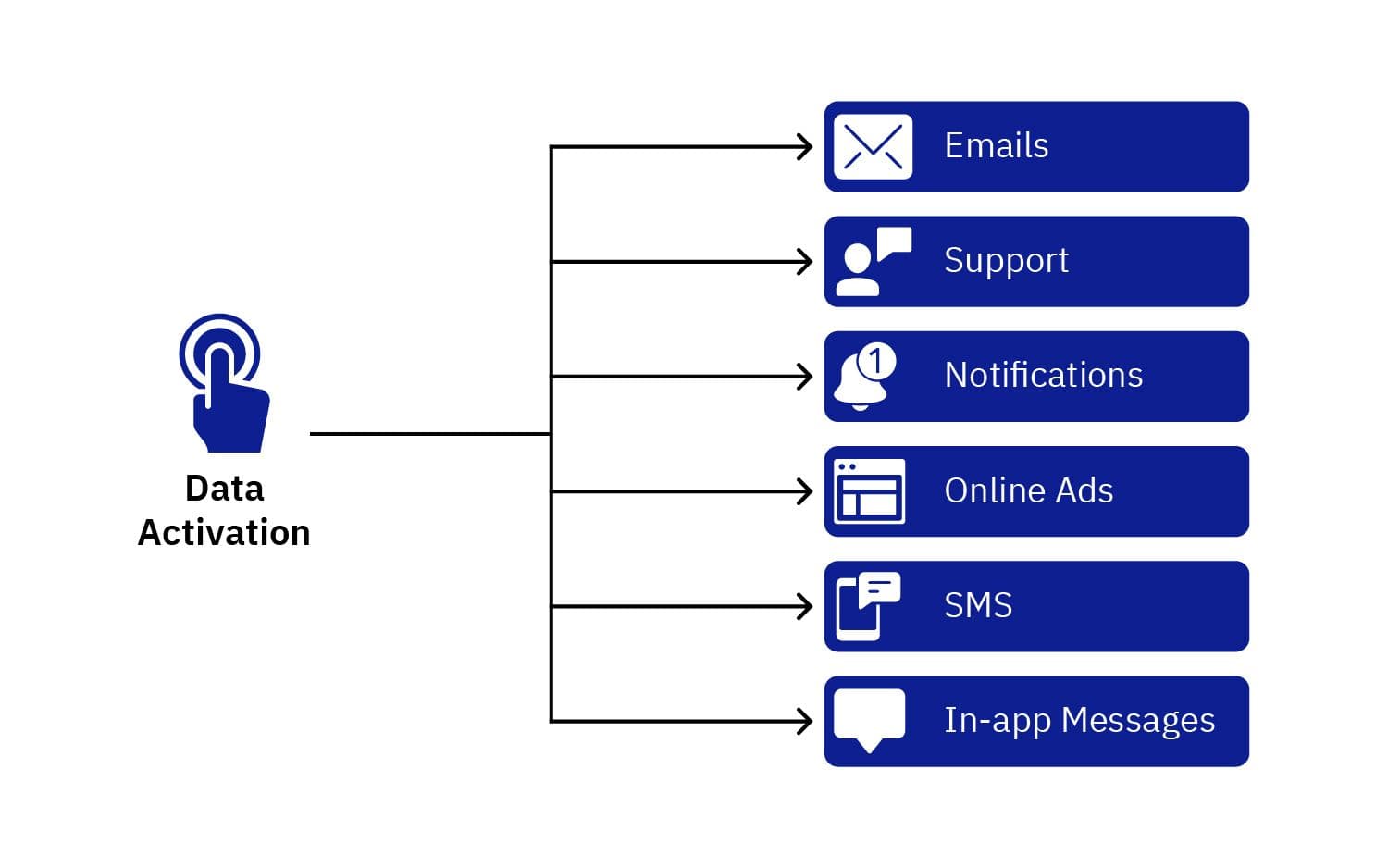

Data activation is the combined process of making data available in the tools used to build data-powered experiences and building those personalized experiences across customer touchpoints.

In other words, data is activated or acted upon when customer interactions are contextual, timely, and relevant as a result of being powered by data.

Data activation

Analyzing data and gathering insights is only good if you can take action on those insights by activating the data in the tools that you use to engage with prospects and customers. By activating data efficiently, you can go beyond looking at dashboards and can utilize data in a meaningful manner.

Providing superior experiences relies on every customer interaction across touchpoints to be powered by behavioral data. This is made possible when the data is made available in the downstream tools where the data is activated to power those interactions—from outbound emails and support conversations to ads and in-app experiences.

In the absence of data in those tools, there’s not much you can do besides building linear experiences where every user experiences the same messages, emails, and ads, irrespective of the actions they’ve taken inside your product or the interactions they’ve had with your brand.

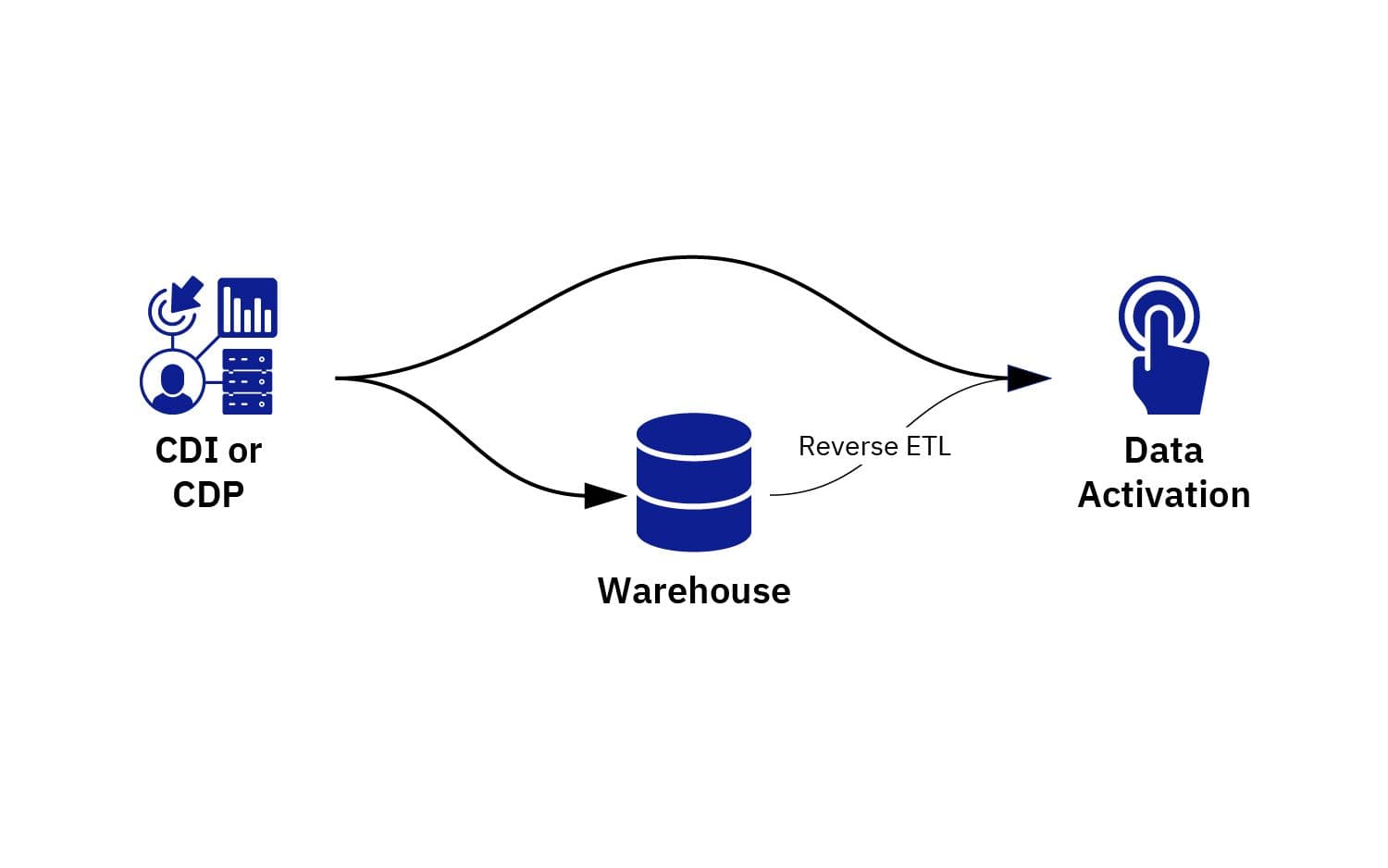

Let’s look at the various technologies available to move behavioral data to downstream tools where the data is eventually activated:

- CDIs to sync raw event data

- CDPs to sync raw data as well as visually-modeled data

- Reverse ETL to sync data modeled using SQL in a data warehouse

- Destination integrations by product analytics tools to sync the data already available in product analytics tools

All of the above are means to the same end—enabling teams to build personalized, data-powered experiences in the tools they use in their day-to-day.

CDIs and CDPs are already covered above so let’s jump into the other two technologies you can use to make data available in downstream activation tools.

Different ways to move data to tools where data activation takes place

Reverse ETL

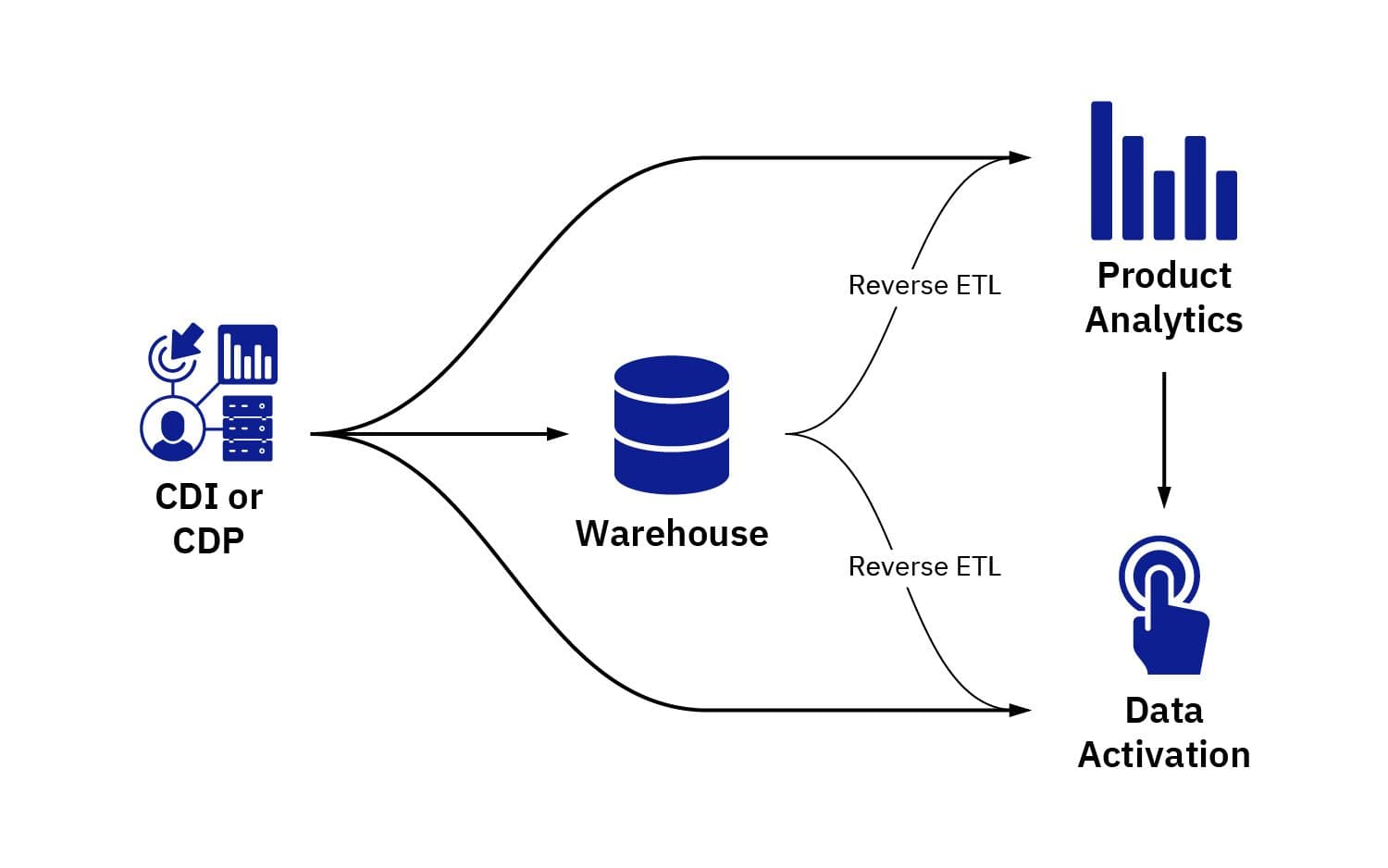

The rapid adoption of cloud data warehouses has given rise to Reverse ETL—a new paradigm in data integration that enables you to activate data that is already in the data warehouse by syncing it to third-party tools. This process and the underlying technology that makes it possible is also referred to as operational analytics.

Companies with dedicated data teams are investing heavily into consolidating customer data from various sources in the data warehouse. Reverse ETL tools like Hightouch, Grouparoo and, Census enable data teams to build data models using SQL on top of data stored in the warehouse (or bring their existing models by integrating tools like dbt) and sync those models to downstream third-party tools.

However, implementing and maintaining a Reverse ETL tool requires an in-house data team or at least a dedicated data engineer—something a lot of companies don’t have the resource or even the need for.

Destinations integrations by product analytics

Product analytics tools like Amplitude, Heap, and Mixpanel support a range of tools as data destinations, allowing you to build and sync user cohorts to downstream tools where data is activated. These integrations are evolving constantly with different tools offering varying capabilities.

The different ways of moving data for analysis and activation purposes

It’s worth mentioning that Amplitude has gone a step further and packaged its ability to sync audiences to third-party tools via Amplitude Recommend—there are some notable benefits to this approach:

- Instead of building cohorts in a product analytics tool to analyze the data and then building the same audiences in the CDP, CDI, or Reverse ETL tool, it can all be done in one integrated system

- Two-way integrations with engagement tools allow you to analyze your campaign metrics directly inside the product analytics tool, enabling you to measure the true impact of your engagement campaigns

Companies with limited data engineering resources can derive significant value from such integrated solutions that enable product and growth teams to move fast without relying on data teams.

Larger companies can also benefit from this approach due to the limited availability of data engineering resources and a massive backlog of data requests from various teams.

Leverage a modern data stack for growth and engagement

Maintaining a modern data stack for growth is not necessarily the responsibility of a growth team, but knowing how data is collected, stored, and moved around makes it much easier for growth professionals to derive insights from data and act upon those insights effectively.

Moreover, while growth and product people are the ones who use a bulk of the tools in this stack, the benefits of a modern data stack can be experienced across an organization—from demand generation and sales development to support and customer success.

Giving teams access to the tools that enable them to be more productive also allows them to upskill themselves and do more meaningful work—the two most important components that help attract and retain the best talent.

Ready to take your data further with a modern data stack for growth? Start by laying your data foundations with The Amplitude Guide to Behavioral Data & Event Tracking.

Arpit Choudhury

Founder, astorik

Arpit is growing databeats (databeats.community), a B2B media company, whose mission is to beat the gap between data people and non-data people for good.

More from Arpit