Inside Warehouse-native Amplitude: A Technical Deep Dive

Take a closer look at the new warehouse-native way to implement Amplitude

This blog post was co-authored by Chris Selden.

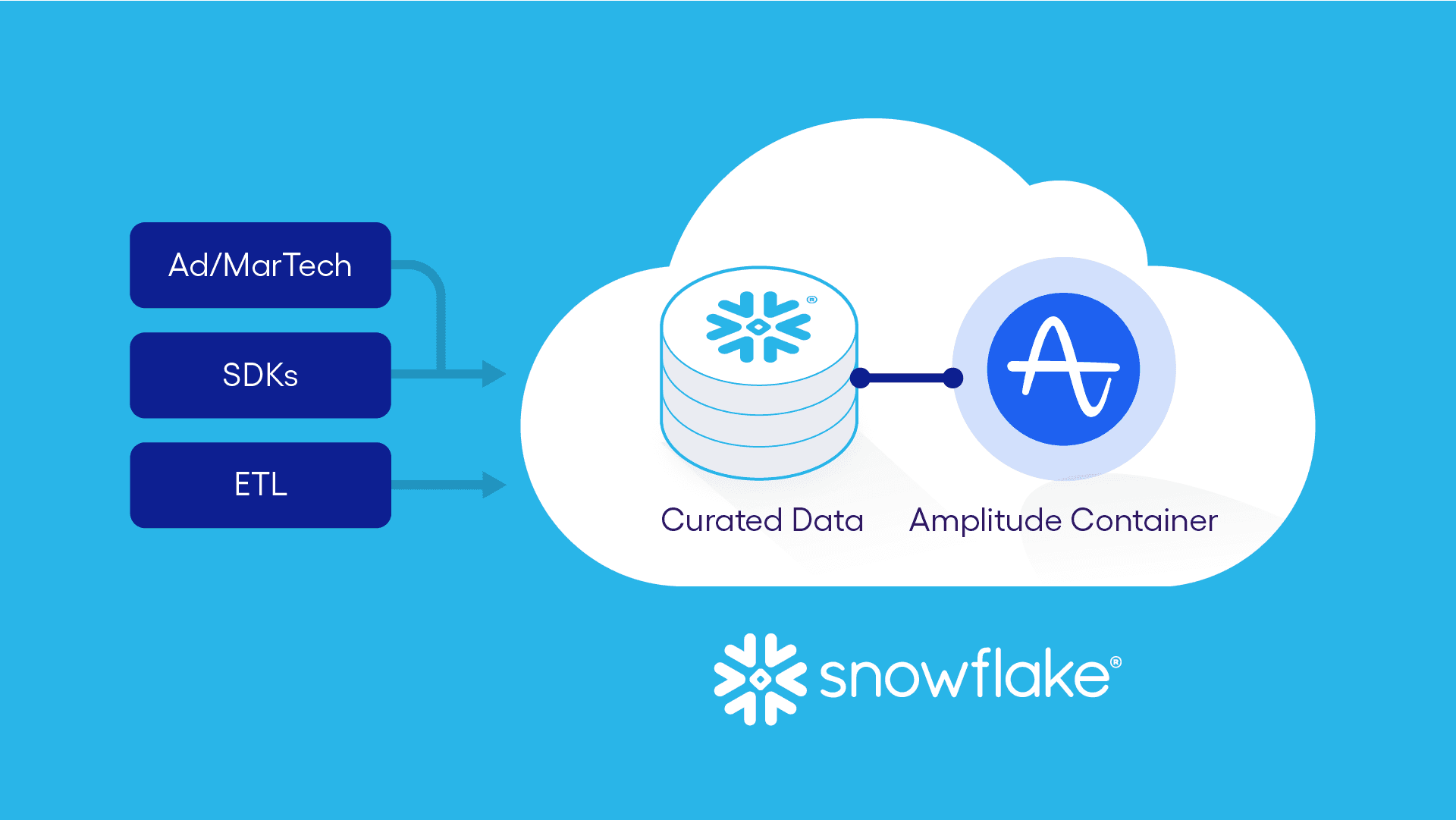

This month we're announcing a new way to implement Amplitude with Warehouse-native Amplitude. Previously, customers needed to pipe their data into the Amplitude platform to analyze it. With Warehouse-native Amplitude, organizations can analyze insights directly in their warehouse to empower users, streamline data management, drive down costs and easily uphold security and compliance policies.

Sign up for the waitlist to indicate your interest in joining our Early Access Program for Warehouse-native Amplitude.

What is Warehouse-native Amplitude?

Prior to our recent product updates, Amplitude was already fully integrated with our customers' data pipelines. We pull data from various sources so our customers can analyze information by making behavioral queries within the Amplitude platform.

With Warehouse-native Amplitude, we’ve made this process even better.

Warehouse-native Amplitude starts from a different angle: It assumes customers have adopted a data warehouse as a central element of their organization’s data strategy. This approach gives organizations the ability to consolidate all their data and insights in one place and ensure they can use the same security and regulation checks they already have in place to better maintain compliance and privacy.

Extract, transform, load (ETL) is a process that combines, cleans, and organizes data from multiple sources into a single data store so it can be analyzed. Warehouse-native employs a zero-copy paradigm, where data doesn't need to be moved, cleaned, or transformed before it is queried. As a result, data access is faster.

Additionally, using the most up-to-date data in the warehouse without needing to import or export means addressing issues with data drift. That helps build trust in the data so teams can use data insights with confidence.

The challenges of building Warehouse-native Amplitude

Transforming our analytics platform into a system that could run natively in our customers' data warehouses required a shift in our development approach.

Translating Amplitude to a Warehouse-native environment

Before Warehouse-native Amplitude, querying in the classic Amplitude platform took place via charts; the analysis wasn't executed in SQL. Our objective was to translate those charts into performant SQL queries so that data could be analyzed directly in our customers’ warehouses.

Each Amplitude chart has a definition with parameters, such as:

- Which events have been selected

- The filters that have been applied to those events (or user/group properties)

- The time range selected

- The query interval

We built a compiler that takes the parameters and the constructs on the chart definition and composes them into a SQL query.

To help with SQL compilation, we use a popular Python library called SQLAlchemy. The library manipulates the chart definitions and converts them into SQL strings that we can run within the data warehouse.

Amplitude customers were a key part of our development process. When deciding which aspects of the classic platform to translate, we reviewed the analyses that are possible today and prioritized the most important elements based on what our customers wanted to leverage first.

We also gave some customers early access and gathered their feedback to confirm if we'd translated the most important analyses from the current platform successfully. That feedback helped us define the answers to questions like:

- What types of analyses are most important?

- Where are the gaps in terms of data support?

- What are we not considering?

Updating our data modeling approach

Each customer has their own way of organizing data in their warehouse. The tables in their warehouse might not match the format of the data we analyze within the Amplitude platform. We had to rethink our data modeling approach to make it easier for customers to leverage the data as it already exists within their data warehouse.

Here are some of the differences in the data formats:

Number of tables

- In the Amplitude platform: Event data is stored in one big table, and we’ve made it very easy and performant to scan the whole thing.

- In customers’ data warehouses: There might be multiple tables that store things like users, facts, or events separately.

Type of schema

- In the Amplitude platform:All data follows the same schema, so we know what columns and properties we store.

- In customers’ data warehouses: We don't automatically know what schema customers are using. We can read some schema information from the tables, but the customer will usually need to tell us the data type of the table and the columns it contains.

How user data is stored over time

- In the Amplitude platform: We keep track of user properties over time and incorporate all relevant user data into one event table.

- In customers’ data warehouses: Customers might not store user data over time, or they may have separate tables for current user data and historical user data.

These differences meant we needed to adjust our data modeling approach to account for the different data types. Within SQL, this meant finding a way to unite all the tables that contained different columns and data types. We didn't only need to adapt from a query perspective; we also had to rethink the platform in terms of the user interface (UI).

Along the way, we realized that most of the different tables in our customers' warehouses corresponded to the different charts within the classic Amplitude platform. We worked to pull apart the individual pieces of the platform to map onto the tables in our customers’ warehouses.

Expressing Queries in SQL

Another challenge we faced while building Warehouse-native Amplitude was dealing with analyses that are difficult to express in SQL, like funnel and retention queries.

The examination of funnels and retention involves tracking events over time, which means they require sequential analysis. On large fact tables, self-joins—or analyzing connections between rows in a table—are often necessary for sequential analysis. However, SQL isn't great at analyzing sequential data and can struggle to efficiently handle queries on large fact tables with self-joins.

The performance of sequential queries can vary wildly depending on the type of algorithm used. Even after we managed to translate analyses to SQL, we had to find a way to optimize the queries so they'd continue to perform as we scaled them with large data sets.

As a result, we adopted a certain mindset: We're processing a data store that is fundamentally different from the original version of Amplitude. We were selective about which types of analyses we tackled and the functionality we would achieve in the short term.

First Up: Snowflake-native Amplitude

The first release of our new warehouse-native application, aptly called Snowflake-native Amplitude, will enable joint Amplitude and Snowflake customers to query Snowflake-housed data directly in Amplitude.

Snowflake-native Amplitude enables customers to access, analyze, and activate the wealth of self-serve behavioral insights from Amplitude without having to move their data out of Snowflake.

Amplitude was the first digital analytics platform to support the import and export of data from Snowflake. And we've been deepening our partnership and data-sharing integration with Snowflake for some time. This is why Snowflake is the first platform we're supporting with this new phase of Amplitude.

Snowflake-native Amplitude is a Snowflake Native App that runs in Snowflake’s newly announced Snowpark Container Services, launching in private preview, which extends Snowflake’s processing engine to provide developers the flexibility to register and deploy containerized software and applications in Snowflake-managed infrastructure. We're excited about the potential of Snowpark Container Services, and we're proud to be a launch partner.

Key features

- There's a new way to implement Amplitude—Snowflake-native—which queries data directly in Snowflake.

- Snowflake-native Amplitude enables customers to easily integrate and activate more data, reducing data drift.

- We translate analyses from the Amplitude platform into SQL queries that we can run on Snowflake.

- Snowflake-native is the next step in the deepening of our relationship with Snowflake.

- Snowflake is the first platform we're supporting with more to come.

- The support from Snowflake's Snowpark Container Services enhances data governance and security.

- Snowpark Container Services also make it incredibly easy for developers to leverage their existing infrastructure (‘lift and shift’).

- Snowflake-native Amplitude enables joint customers to take a hybrid data approach, enabling them to deliver relevant insights to teams in a way that suits their needs best.

How Snowflake-native Amplitude Works

Snowpark Container Services enables organizations to manage and run software and applications directly in their Snowflake account. They act as a drop-in replacement for Kubernetes (a system for managing containerized applications).

Since our Snowflake-native functionality eliminates the need for customers to pass data out of Snowflake into Amplitude, it helps organizations to keep their data more secure. The Snowpark Container Services also unlock many other exciting potential benefits.

Snowflake-native deepens our existing integration with Snowflake

A straightforward development experience

From the Amplitude perspective, the containers gave us a more sophisticated toolset to act as a native application within Snowflake. In general, Snowpark Container Services will enable you to "lift and shift" an application—making it easier to port over to Snowflake with minimal configuration changes.

Many services and applications are already deployed and run within containers, which allows them to work consistently across different environments. This means teams will be able to continue using their existing technology and development patterns as they transition to Snowpark Container Services.

Other Great Benefits of Snowflake-native Amplitude

Consistent data governance and access control

When Amplitude runs in a Snowflake account, the same governance policies and controls an organization sets in Snowflake are applied to all the data. Organizations can immediately leverage pre-defined access controls without any need for migration, enabling them to maintain consistent governance rules and access permissions across their data.

Improved data security and privacy

With Snowpark Container Services, customers' credentials and environment variables can be passed to the container, which means Amplitude doesn't need to look for or store customer credentials. Additionally, data moves directly from Snowflake to the end user's browser without passing through Amplitude's servers. Your data never leaves Snowflake. Both of these elements improve data security for our customers and help minimize privacy concerns.

What's next for Amplitude?

The Snowflake-native Amplitude release marks the first partnership to launch within our Warehouse-native framework. We will optimize and tackle other areas and types of analyses over time.

A hybrid approach to data

Our customers are interested in leveraging Amplitude's value as a behavioral data provider and connecting our platform’s customer journey data to their own data. In a hybrid approach, some data would be kept warehouse-native, and some would be sent to Amplitude—customers would be able to use both sources of data to inform their decisions.

This hybrid approach would enable teams to get the best of both worlds. First, they’d get up-to-date insights straight from their warehouse. Then, if any data needs to be optimized for exploration before analysis, they’d be able to access the data via the Amplitude platform as usual.

Combining both data sources would also open access to previously siloed data, not just making it easier to access data but making even more insights available across any team within an organization. Giving teams access to a wider range of information will help establish more trust in data, as they'll be able to cross reference and validate any insights.

Increased data activation

Another area we're looking into is how to help organizations activate more data. We’re especially interested in helping teams use data that has historically been tough to activate.

Transforming data to fit the requirements of downstream systems can be complex and takes time. We want to make it easier for teams to take cohorts they're using for analyses and use them in third-party destinations, like ad campaigns.

On a technical level, these are capabilities that we have today, but we want to make the activation layer even more useful for our customers. We can leverage the SQL compilation infrastructure we built to convert any of the cohort models in Amplitude into the relevant warehouse equivalents. We'd be able to use the same infrastructure to sync audiences with third-party destinations.

Join the Warehouse-native Amplitude waitlist

We’re excited to bring Warehouse-native Amplitude to the market to help support teams with better access to the insights they need to propel their businesses forward. Stay tuned because we have a lot more coming in this space.

Interested in Warehouse-native Amplitude? Register your interest in our Early Access Program by joining the waitlist.

Curtis Liu

CTO and Co-founder, Amplitude

Curtis is the CTO & co-founder of Amplitude. He developed the core of Amplitude's technology and brings experience from Electronic Arts and Google. He studied EECS at MIT and is two-time winner of Battlecode, MIT's largest programming competition.

More from Curtis