Learn as You Ship and Quantify the Impact of Every Release

Enable product and data teams to scale experimentation, enhance data consistency and trust, and drive ROI with new capabilities for Amplitude Experiment.

The current macroeconomic environment is forcing more product organizations to drive more innovation and maximize their investments through their experimentation programs. But, because their current stack is built upon disparate tools, it is causing inconsistent data, patchy tracking, and too many headaches created by managing duplicative data sets.

As a result, more and more teams are looking to Amplitude Experiment to eliminate the biggest blockers standing in their way to scale experimentation and learn from every release:

- Connects customer data with experimentation natively, without needing to rely on kludgy integrations and expensive data pipelines.

- Reduces the time analysts need to run the statistical analysis

- Resolves user identity across devices and platforms

- Enables organizations to analyze experiments using consistent metrics and tracking ensuring reliable data and analysis

- Ensures that developers can deliver targeted experiences safely

Today, I am extremely excited to announce the newest features and capabilities to Amplitude Experiment. Now, teams can scale experimentation, enhance data consistency and trust, and drive ROI with one unified platform.

Guiding teams to trusted data

Workflow improvements

After hearing from our customers, we know one of the challenging aspects of experimentation is following best practices to ensure accurate results. In order to help teams deliver trusted data to stakeholders, we built Amplitude Experiment to have a workflow-based design ensuring every team uses best practices for their experiments every time.

Experiment customers can now benefit from a brand new creation flow as well as a better experience for targeting, allocation, and rollouts for both experiments and feature flags to make it faster and easier to run more tests and make decisions, faster. Additionally, customers can now easily designate experiment type whether it is a traditional hypothesis test to maximize conversion or a “Do No Harm” test to ensure a new experience does not have any detrimental effects to your key metrics.

In the planning phase, customers also have access to a duration estimator and a revamped sample size calculator to provide guidance about how long each experiment will take to reach statistical significance, making planning much more straightforward.

New bucketing capabilities

In this launch, we have also added new approaches to bucketing to provide the right experiences to the right customers. With group-level bucketing a new beta feature, B2B customers with the Accounts add-on for Amplitude Analytics, have new ways to target specific accounts for their experiments. This enables customers to group and target experiments as they need to, rather than be limited to targeting only individual users, providing more flexibility to set up tests that align with their business needs.

Guiding teams to clearer insights

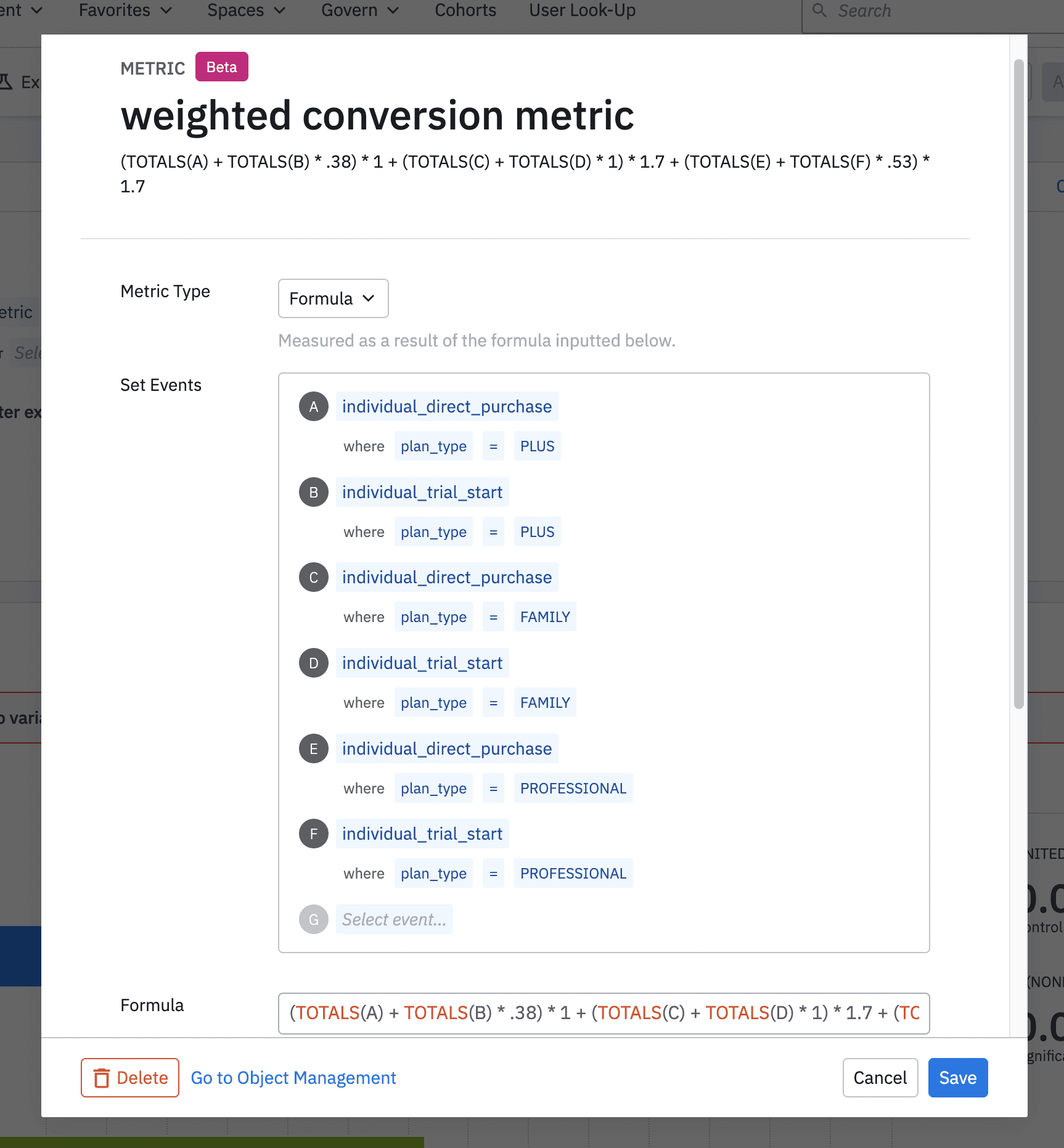

One of the primary advantages to have your experimentation solution natively integrated with your customer analytics is the ability to harmonize metrics definitions. With formula metrics, customers can now use division, multiplication, addition, and subtraction to build new formula-based metrics to deliver tests and insights based on a wider range of use cases, including weighted averages. This ensures better flexibility for your experiments, opening up new use cases for every customer.

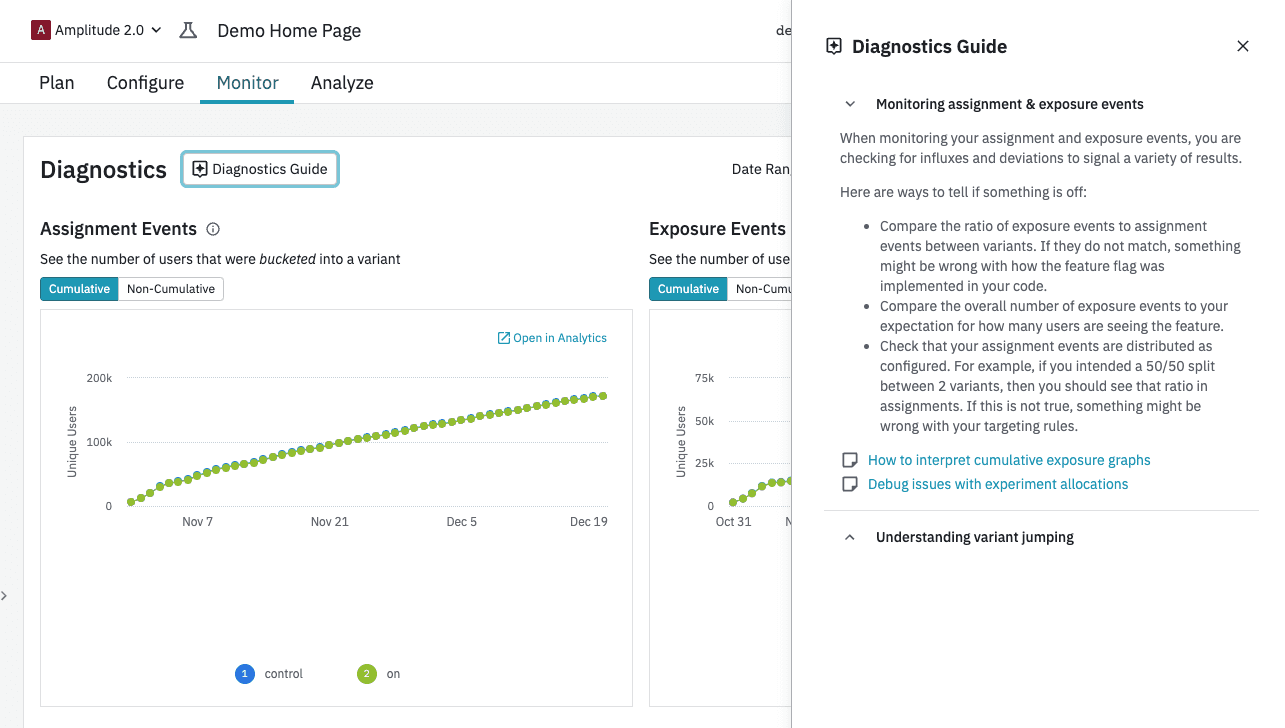

One challenge many teams who aspire to deliver experimentation at scale is that teams may not have a lot of experience with A/B testing. With new capabilities for guided learning in Experiment, teams have easy access to relevant help articles and contextual guidance to make the right decision.

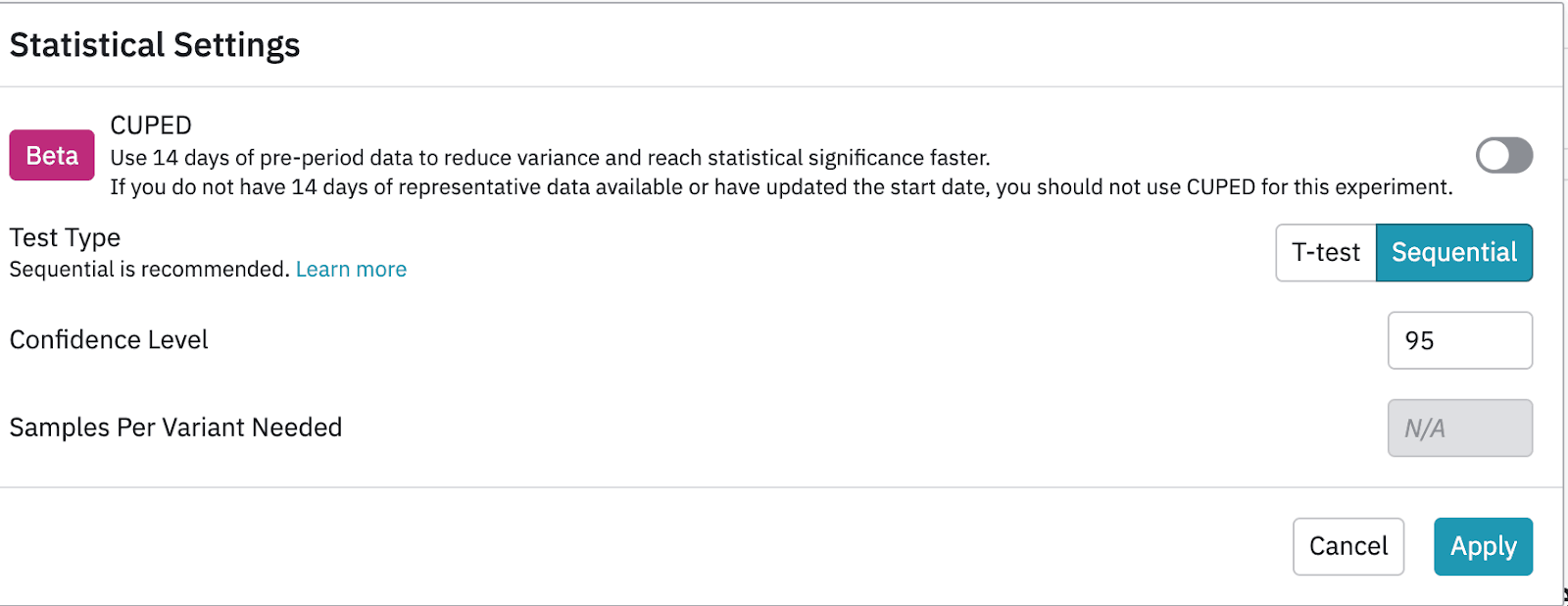

And finally, we also added CUPED, an advanced statistical technique to reduce variance, to Amplitude Experiment as well. This will ensure teams can get to reliable results and clear insights much faster, by using pre-experiment data. Check out our recent blog post to learn more about this powerful new capability.

Guiding teams to faster action

Teams invest in experimentation to deliver faster innovation but can need guidance to help them understand what to do next. With this set of investments, Experiment customers can now benefit from significant improvements with cross-functional experiment workflows, managing the experiment lifecycle, and automatically detecting errors using machine learning.

Program management improvements

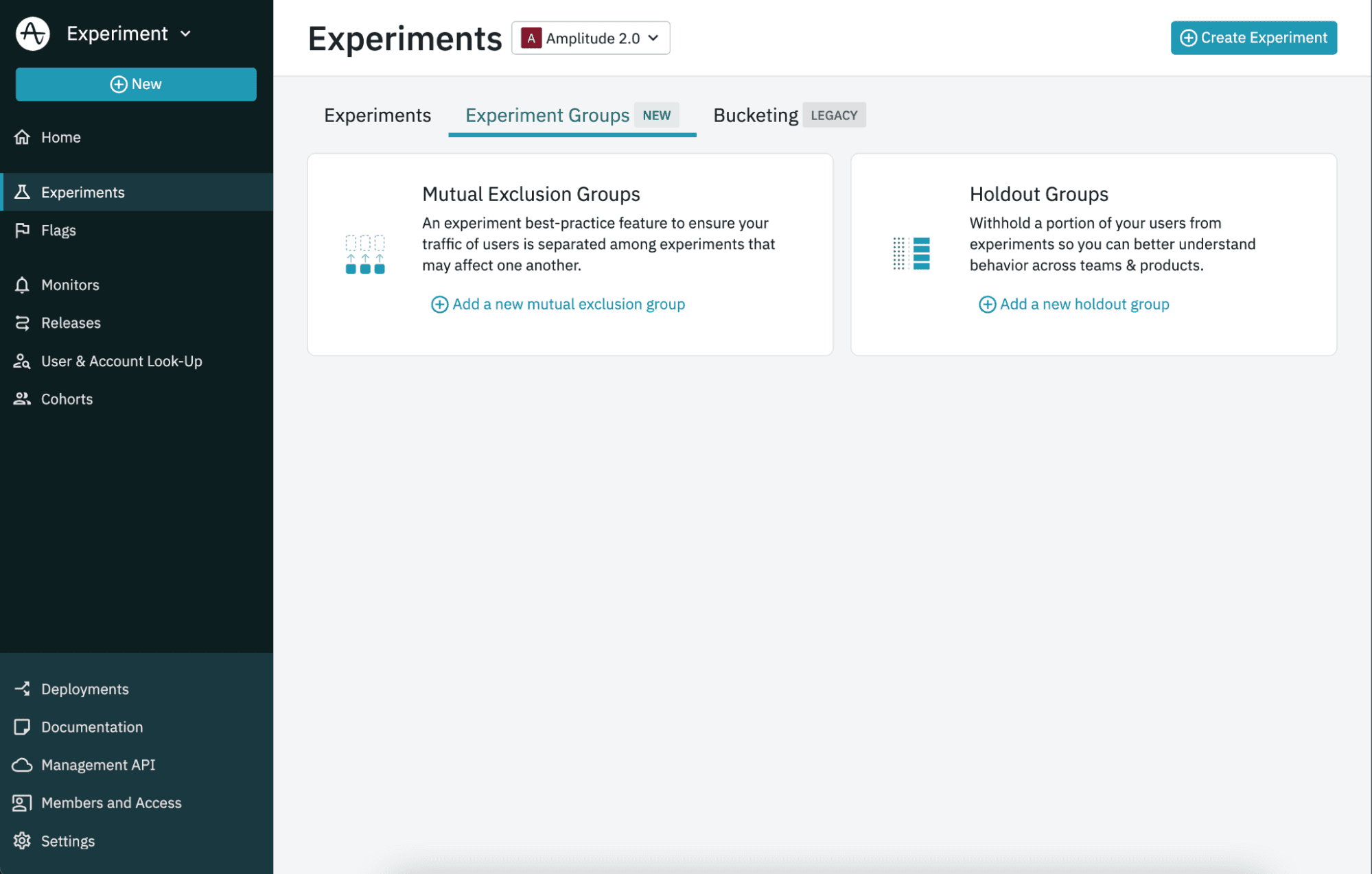

With our latest launch, we have made significant investments to provide customers with superior program management capabilities. With new holdout groups, customers can now ensure subsets of customers are never targeted with experiment variants, providing more ways to establish baselines for teams running multiple experiments at the same time.

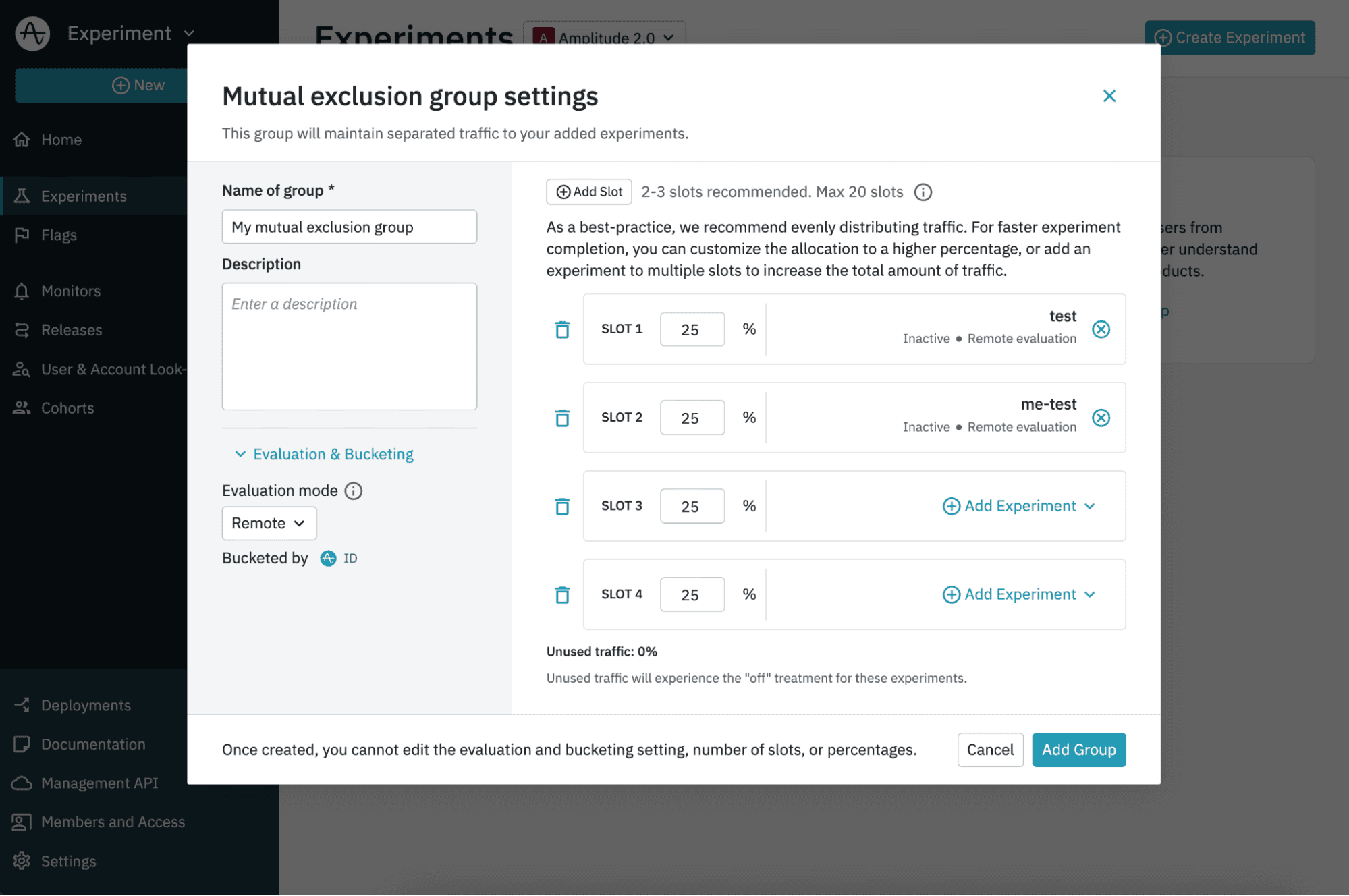

We also improved the functionality and experience to build mutual exclusion groups, ensuring that your users will not have to deal with colliding experiments which could degrade your user experience.

Lifecycle improvements

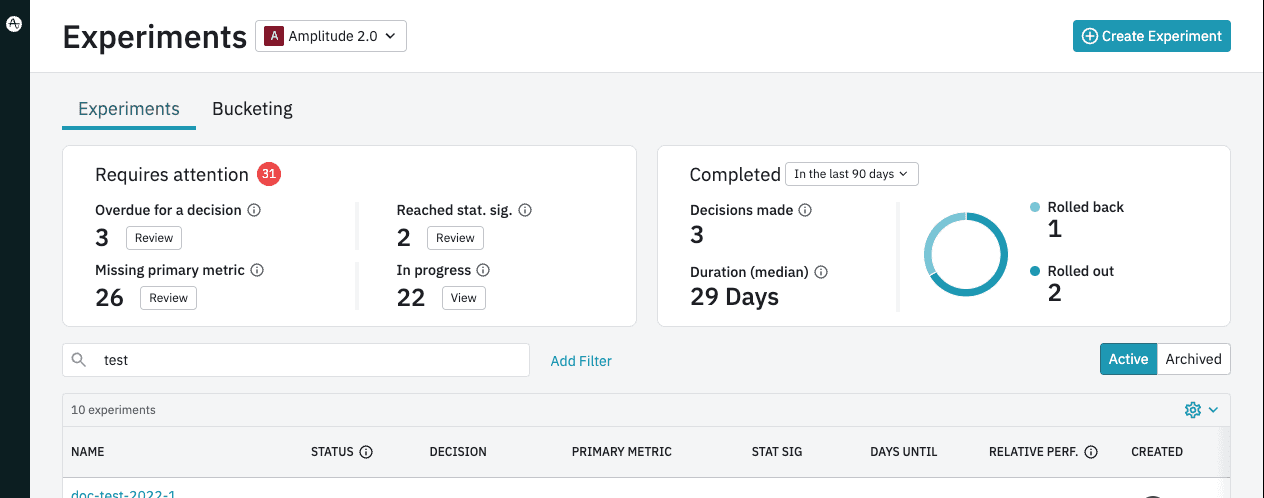

In order to facilitate a culture of learning as teams ship new releases, product organizations need to manage a large volume of experiments and make sure they can keep track of every experiment throughout its lifecycle. We added new capabilities on the homepage including new summary cards to highlight which experiments need decisions made and how many experiments have been completed recently. These updates provide your team at-a-glance understanding of every experiment with improved filtering and new notifications so that your team will be automatically notified when an experiment reaches its end date or statistical significance.

And, now customers can easily archive and restore experiments as well as complete experiments as necessary, so that product organizations can keep moving forward to deliver faster innovation.

Automated notifications and guardrails

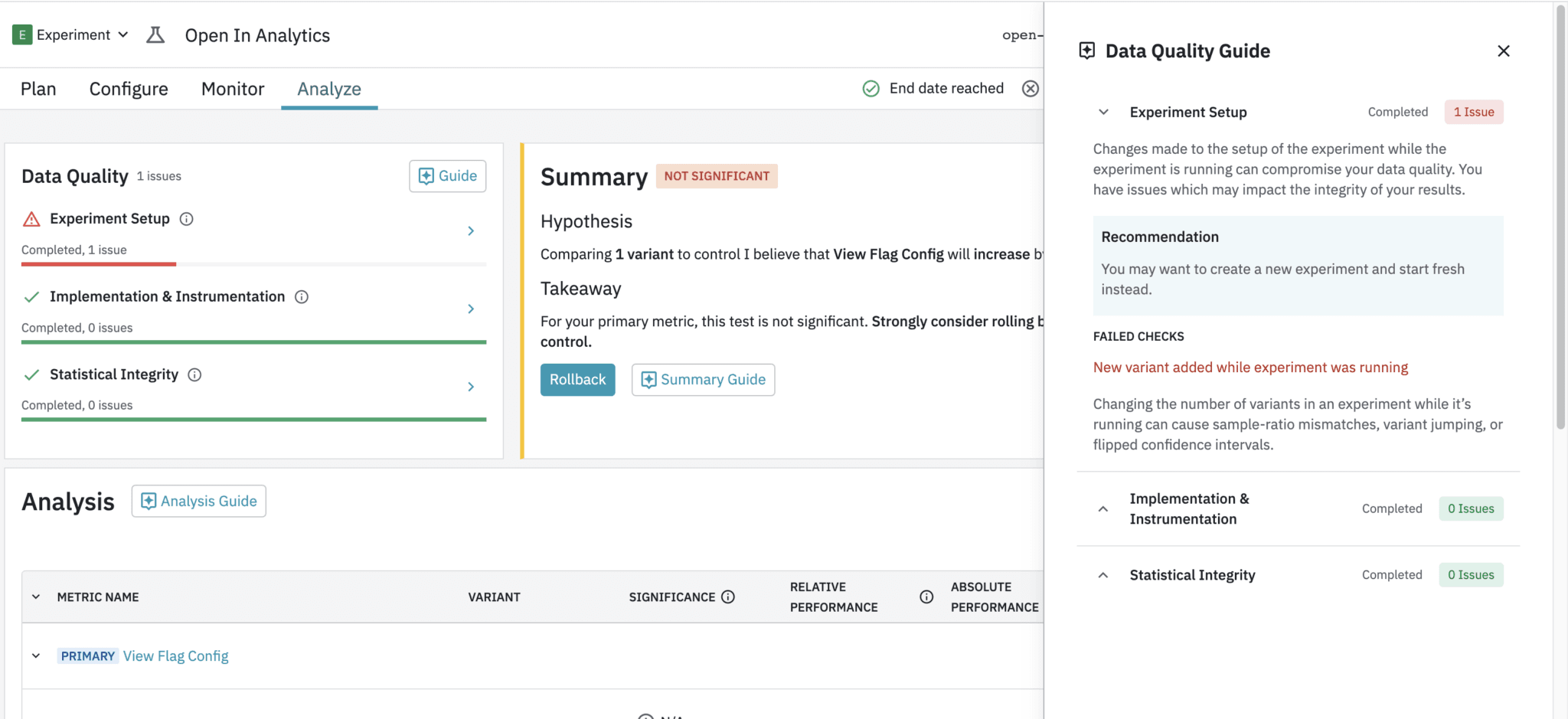

Customers also need a guide to help them remediate data issues proactively in order to solve issues faster and keep their programs running smoothly. With the new data quality checklist, customers are now automatically notified when:

- An experiment may be impacted by a sample ratio mismatch (SRM)

- Exposure events are occurring without assignment events

- Variant jumping is detected

- Decreasing traffic occurs

- Suspicious uplift detected

- Abnormal variance, standard error, or confidence intervals

Building better experiences for developers

While all of these enhancements are game-changers for Amplitude Experiment, that’s not all. We also have made considerable investments to improve our developer experiences including:

- Cohort support for local evaluation—without sacrificing performance

- New CRUD functionality for our Management API

- New SDKs for Go, Python, Ruby, React Native, and more

- And, the ability to automatically track assignments to assign user properties without exposure for local evaluation

To learn more about why we are continuing to double down on developer experiences, check out our latest blog post from our Director of Engineering for Amplitude Experiment Larry Xu. Want to learn more about Amplitude Experiment? Click here to request a demo and see all of the latest features and capabilities in action.

Wil Pong

Former Head of Product, Experiment, Amplitude

Wil Pong is the former head of product for Amplitude Experiment. Previously, he was the director of product for the Box Developer Platform and product lead for the LinkedIn Talent Hub.

More from Wil