Announcing Multi-Armed Bandits: Now Available in Amplitude Experiment

With new ways to test and learn, marketing and product teams have even more flexibility to optimize digital experiences.

In the past 18 months, Amplitude Experiment has made massive progress in helping unite product, engineering, and data teams to optimize experiences and learn from every release. Some of our team’s highlights include our new guided experimentation workflows, adding CUPED to enable experiments with less traffic to succeed, and providing our Plus plan customers access to unlimited feature flags to build better products.

Today, I am thrilled to announce we have added multi-armed bandit experiments (MABs) to Amplitude Experiment. This new experiment technique provides marketing and product teams more flexibility to optimize experiences and algorithms in new ways.

What are multi-armed bandits?

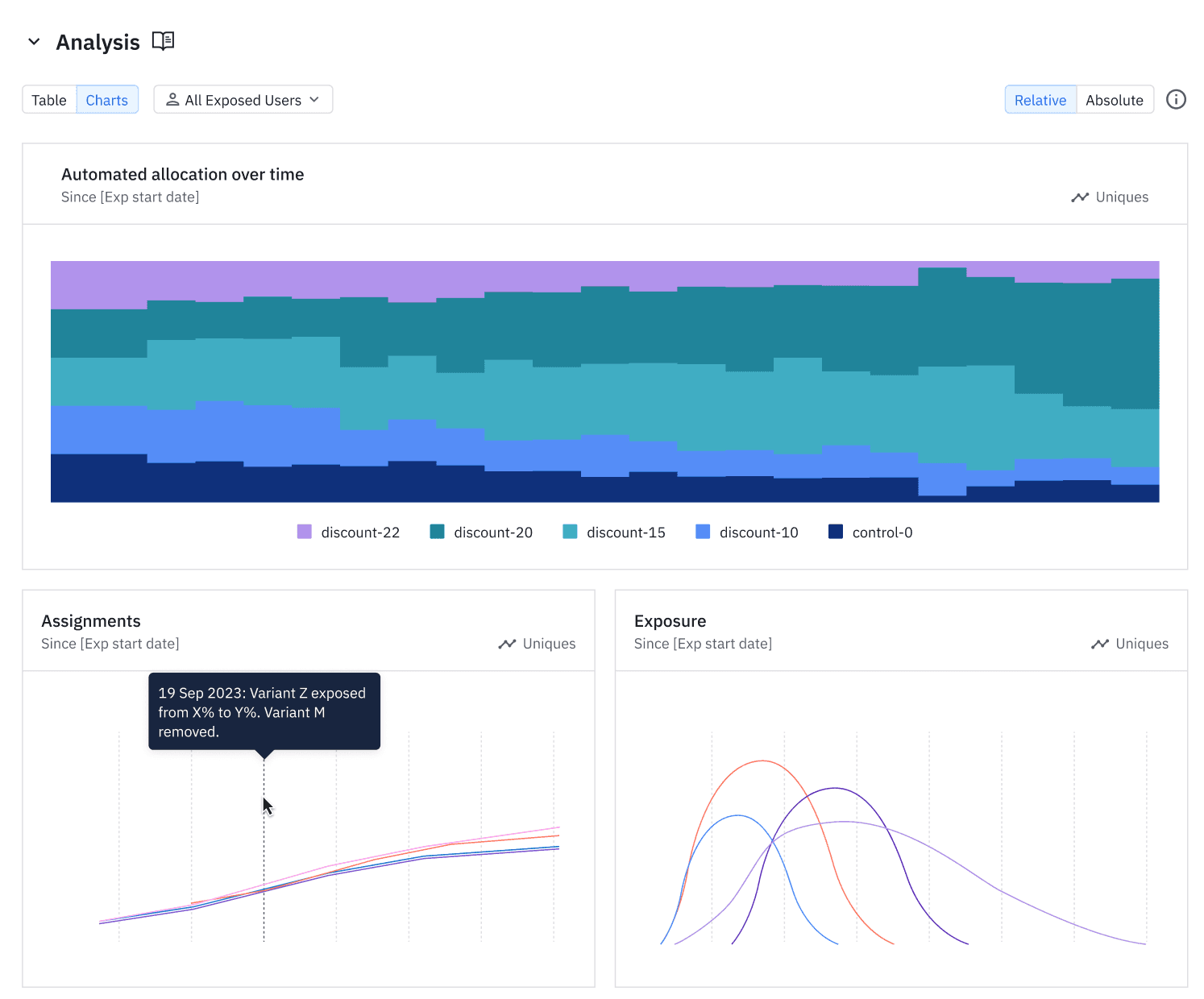

Multi-armed bandits sit at the intersection of testing and personalization, and use the power of behavioral data and real-time stats to understand which variant is winning in a particular test.

As the system learns, it will automatically route more traffic to the winning variant accordingly. This allows your business to maximize your conversions as your test runs, which can often result in major lifts in your ultimate business outcomes like sales revenue or sign-ups.

With multi-armed bandit experiments, teams are able to use Amplitude’s advanced algorithms to automatically route traffic to winning variants as the test runs. This makes it easy to maximize your opportunity to drive growth when you have one chance to win.

Key benefits of multi-armed bandits

Multi-armed bandits offer several benefits that make them a strong choice for your experiment:

- Real-time optimization: With multi-armed bandits, the algorithm adapts in real time to changes in user behavior, market dynamics, or other external factors.

- Higher efficiency for conversion rate optimization (CRO): By dynamically allocating resources based on performance feedback, multi-armed bandits eliminate the need for manual intervention and guesswork. MABs enable businesses to make the most efficient use of their resources, particularly for CRO use cases like landing pages or messaging.

- Faster results: Traditional A/B testing can be time-consuming, requiring large sample sizes and extended testing periods. Multi-armed bandits, on the other hand, deliver faster results by prioritizing the most promising options early on, accelerating the optimization process.

- Better return on investment (ROI) for marketers: By continually optimizing toward the best-performing variants, multi-armed bandits help marketers achieve higher ROI across their campaigns and web experiences.

When should I use multi-armed bandits?

Multi-armed bandits are not ideal for every use case, but they are a powerful tool when used correctly. Because multi-armed bandits quickly adapt based on the most effective variant, they are particularly useful for time-sensitive scenarios.

Let’s assume your growth team wants to run a promotion leading up to Black Friday. If they tried to run a classic A/B test before the promotion and implement changes based on what they learned, customers might not be thinking about Black Friday yet, which would yield ambiguous results. Their actions and behaviors might differ if you run the test immediately before Black Friday, but that won’t leave enough time to enact change and will lead to missed conversions.

Because MABs can maximize conversions within this short window for Black Friday, the test will adjust traffic in real time to capture the most effective strategy. This approach enables immediate insights into which variants resonate with customers during time-sensitive periods—balancing immediate results with learning opportunities.

For product teams, MABs are ideal for search and recommendation algorithms and AI models. User behavior will drive the selection of the best models that drive desired behavior, like click-through rates or adding items to a cart.

Bandits are a powerful way for modern product and marketing teams to harness the power of behavioral data to automatically tune their products to their most desirable versions.

How can I learn more about multi-armed bandits?

Check out our help center article, get a demo, or contact your customer success manager to learn more about this powerful new capability in Amplitude Experiment.

Wil Pong

Former Head of Product, Experiment, Amplitude

Wil Pong is the former head of product for Amplitude Experiment. Previously, he was the director of product for the Box Developer Platform and product lead for the LinkedIn Talent Hub.

More from Wil