Warehouse-Connected Experimentation is Better for Self-Service—and for Data Warehouses

How self-serve solutions unlock innovation while actually complementing your data strategy

As a data leader, your data warehouse is practically your dream house. You’ve invested time and effort in it to build a centralized data strategy, good data governance, and a single source of truth. So when your organization is looking for how to run better experiments, a warehouse-native solution that leverages all the investments you’ve made probably seems like the dream option.

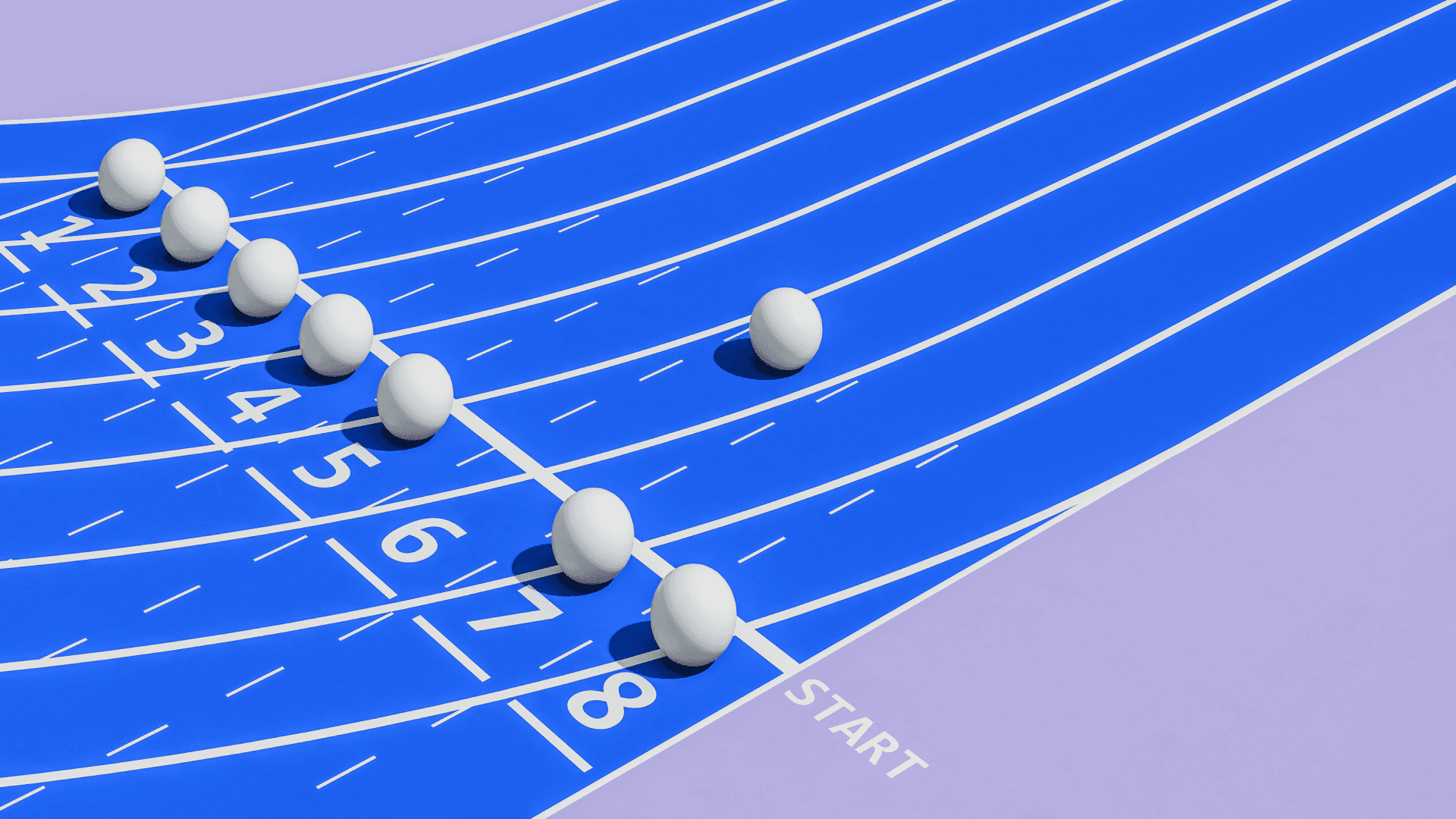

In practice, though, warehouse-native experimentation can quickly turn into a nightmare. Product and engineering teams can’t set up their experiments themselves, so you’ll have to do it for them—but you can only do that as fast as your team’s capacity. Before you know it, the new and ad hoc test requests will pile up into a bottlenecked backlog, and all your team’s time and effort will be stuck on the neverending experiment queue instead of doing the valuable analysis that was the whole point of the warehouse to begin with.

That all may seem like a necessary tradeoff for maintaining the control and governance benefits of your warehouse. The alternative would be data chaos. But what if there were another solution, one that could balance centralized control with decentralized access?

A warehouse-connected experimentation platform like Amplitude Feature Experimentation is that solution. Its self-service model integrates with your data warehouse rather than being stuck inside it, unlocking testing access across your organization without compromising data integrity. It frees others to innovate faster, frees you to focus on more impactful work—and makes the dream of experimenting with your warehouse a reality.

The pitfalls of warehouse-native experimentation

Warehouse-native experimentation sounds great in theory, especially from a centralized data perspective. Metrics, assignment logic, and exposure tracking are fully controlled by your data teams, ensuring clarity, consistency, and accuracy. Yet this control comes at a steep cost:

Slower innovation: Every new experiment typically involves complex setup tasks—defining metrics, assignment and exposure events, validating data—that only your data teams can perform. With other teams simultaneously demanding these services, the resulting backlog dramatically slows down experimentation cycles since they have to wait until your teams finish their work to start their experiment. What might ideally take hours ends up taking weeks, ultimately reducing the agility of your organization’s innovation.

Data team burnout: Beyond delays, the constant flow of experimentation requests can overwhelm already overworked data teams. Instead of putting effort into high-impact strategic analyses, like propensity modeling, user segmentation, or predictive analytics, your data scientists become stuck handling repetitive, tactical experiment setups. This often leads to burnout, reduced job satisfaction, and high turnover among your most valuable team members.

Higher costs, lower ROI: The more experiments you run, the more warehouse queries you need to analyze your results—which means higher query, compute, and storage costs. But because these costs are variable, it’s also harder to know if they’re worth it. You lose insight on your total cost of ownership, your budget predictions, and the overall justification for your spend.

Can a warehouse-native solution truly drive experimentation at scale? Many organizations are increasingly finding that, while warehouse-native has powerful potential, it isn’t set up to empower their teams for successful innovation.

Unlocking innovation with warehouse-connected experimentation

Adopting a warehouse-connected self-service experimentation tool doesn’t mean compromising your data integrity or your centralized governance. In fact, it’ll enhance your existing warehouse strategy by empowering more teams to be successful with your data investments.

Reduce dependencies to accelerate experimentation

Self-service experimentation tools like Amplitude Feature Experimentation remove the dependency bottleneck by enabling product and engineering teams to independently set up, run, and analyze experiments. They have intuitive interfaces designed for non-technical users, no need for SQL experience, and yet still allow for seamless collaboration with your data science teams.

This shift can dramatically accelerate your organization’s overall experimentation velocity. Instead of weeks of waiting, product and engineering teams can run experiments on their own, in quick succession, and even simultaneously. And while they can still get review and support from your data science teams, they won’t burn you out with constant requests.

Maintain (and expand) well-governed structures

Data leaders naturally worry about the risks of decentralization—data drift, lack of governance, and loss of control. The great thing about an advanced warehouse-connected system like Amplitude is that it’s still semi-centralized, allowing access without losing control through:

- Data mutability that avoids drift and ensures accuracy

- Robust governance capabilities

- Data access controls

- AI governance assistance

- Roles and permissioning

It’s also easy to reinforce best practices by creating shared templates and standardized experiment workflows. These help teams to easily understand and replicate successes, learn from failures, and continuously improve their experimentation methodology.

With these mechanisms in place, non-data teams can run experiments faster while still working within clearly defined guardrails. Since your data scientists retain full oversight, experimentation remains accurate, consistent, and reliable—even at scale.

Seamlessly integrate with your existing warehouse

A common misconception is that self-service experimentation tools create data silos, fragmenting your centralized data strategy. On the contrary, modern self-service platforms like Amplitude are warehouse-connected by design—they integrate seamlessly into your existing infrastructure rather than competing against it.

Experiment data, results, and insights flow directly into your existing data warehouse or data lake, maintaining a single source of truth. All experimentation metrics and outcomes are automatically synced to your warehouse, made accessible to data teams, and consistent. You don’t replace your warehouse investment—you enhance it.

Free your data team to do their best work

When non-data teams can self-serve experimentation, your data scientists regain significant capacity. They shift from tactical, repetitive tasks back to what they were hired for, and what they find most meaningful: strategic analysis, propensity modeling, predictive analytics, and in-depth insights.

Your teams will feel more productive, engaged, and valued when they can focus on high-impact, meaningful projects. This boosts job satisfaction and retention, also ultimately improves your organization’s analytical capabilities at large.

Maximize return on your warehouse investment

Any business initiative ultimately comes down to ROI. A data warehouse is a significant investment, and it makes sense you’d be protective of that as a data leader.

Warehouse-connected experimentation tools don’t diminish your investment, though—they amplify it. With more teams having faster access to high-quality experimentation, the value of your data warehouse becomes more and more apparent through:

- Reduced time-to-insight, enabling faster decision-making

- Increased frequency of experimentation, driving more product innovation

- Improved productivity for teams across the board, saving significant time and resources

- Predictable costs and lower total cost of ownership

Experimentation that enhances your warehouse

Adopting self-service experimentation isn’t about replacing your carefully crafted warehouse approach—it’s about complementing it.

A warehouse-connected solution like Amplitude Feature Experimentation gives your non-data teams the autonomy to innovate at speed while maintaining the data quality, governance, and control you rely on. It integrates with your warehouse, revealing and expanding its ROI. And, maybe most importantly, it frees up your data team to do higher value work with the warehouse.

As a data leader, you know that everyone in your organization can benefit from better data. That’s the dream. Warehouse-connected experimentation makes it easier for them, your data teams, and you, to unlock those benefits and see the true value of your warehouse—and your data.

Katie Barnett

Former Director, Product Management, Amplitude

Katie is a former director of product management at Amplitude. She's focused on helping customers use Amplitude to gain user insights to build better products and drive business growth. Previously, she was a staff product manager at Gladly and a senior product manager at AppNexus. She's also worked in strategy & business development at Bloomberg and investment banking at Goldman Sachs.

More from KatieRecommended Reading

How Complex Uses AI Agents to Move at the Speed of Culture

Feb 17, 2026

4 min read

The Last Bottleneck

Feb 17, 2026

11 min read

How NTT DOCOMO Scales AI-Powered Customer Experiences

Feb 17, 2026

9 min read

How Amplitude Empowers Mercado Libre to Be AI-Native: Scaling Intelligence Across Latin America's Largest E-Commerce Platform

Feb 17, 2026

8 min read