Why You Should Think Twice About Warehouse-Native Experimentation

Avoid the hidden costs and time sinks with a better path forward: warehouse-connected experimentation.

Are you considering a warehouse-native experimentation solution? You may actually be walking into a hazard zone.

Imagine you’re a product manager with a new personalized feature you’re excited to release as soon as possible—maybe a redesigned checkout flow or a new search recommendation engine. It's almost ready to go, and your last step is to run an experiment to answer that critical question:

“Will my feature make an impact?”

In this example, also imagine you're working in a warehouse-native environment. So, you submit a ticket to your data team—they'll define your metrics and your assignment and your exposure events while you set up the experiment. And then, you … wait. You wait for your ticket to come up. Then you wait for the data team to do their thing. And when it's all ready, and you can finally start the experiment—you realize it's been weeks now. (And what if it turns out you set up the test incorrectly? You might be forced to go back to square one…)

Meanwhile, your competitors are iterating ahead of you, and their new personalized experiences are capturing the users you were so excited to serve.

This is the reality for product teams that rely on warehouse-native experimentation. While using your existing data warehouse may seem like a cost-effective and scalable solution because it limits data drift, in practice, the complexities often make it a slower and more expensive approach.

But, what if you, as a product manager, could run experiments that sync to your environment without all those obstructive complexities? You can—with a warehouse-connected experimentation solution like Amplitude Feature Experimentation. This powerful yet straightforward approach to experimentation gets product managers to faster, data-driven decisions by:

- Unlocking self-service experimentation

- Optimizing user experiences in real-time

- Using a more predictable cost model

- Integrating with enterprise warehouses to keep data in sync

Scale experimentation with self-service

With a warehouse-native solution, teams rely on a managed service using a hub-and-spoke model to run experiments. In this model, one central data team supports product and growth teams by setting up, running, and analyzing experiments on their behalf.

Since the teams with the most experimentation experience can lead the charge for all experiments and help their colleagues succeed, this may sound great in theory—but it can quickly become complicated.

As more experiments are run, teams have to shift into support ticket mode to set them up and analyze results, since there are more people and teams that need to run tests than can write SQL. The data engineering team has to write custom queries to set up each test, validate and reconfigure data pipelines on an ongoing basis, and troubleshoot discrepancies when something goes wrong.

For product managers, this translates to constant bottlenecks, slow progress, and less control over experimentation.

In contrast, warehouse-connected solutions are designed for speed and usability. Instead of requiring data engineers to manually set up experiments, they allow PMs to self-serve through a structured user experience, easy onboarding, and simple recommendations—while still collaborating with their data and engineering teams.

With a truly self-service model, more teams can design, run, and analyze experiments independently, without waiting on engineering teams or needing SQL skills.

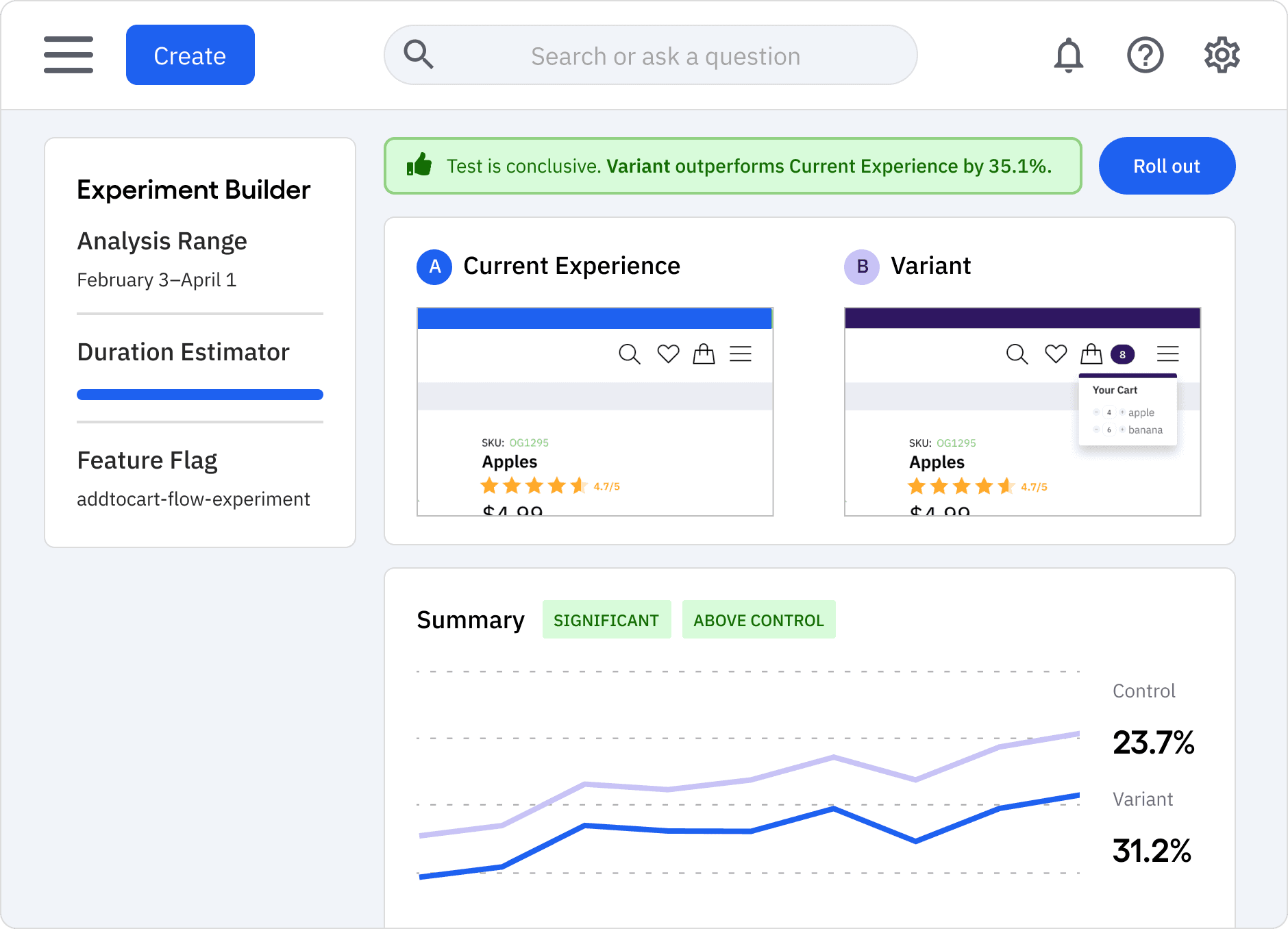

A warehouse-connected solution like Amplitude unlocks self-service to scale experimentation faster.

Optimize experiences in real-time—with one platform

Even if you were somehow able to supercharge your data team to be as fast as self-serve, a warehouse-native solution also has an inherent speed blocker: batch processing. Experiment results are only available after they’ve been pulled, cleaned, analyzed and aggregated in the data warehouse. This latent period can often take as long as 24 hours before data is made available for analysis—an unavoidable bottleneck for product development and decision-making.

On top of that, batch delays are compounded by integration. Warehouse-native experimentation solutions function as yet another point solution in your stack. This means that while they query your warehouse directly, they are fundamentally disconnected from the rest of the tools your teams use to improve your digital experiences.

You’ll need to build and maintain integrations across analytics, session replay, heatmapping, web experimentation, and the rest of your stack to get the insights your product teams need to build world-class product experiences—let alone run a single A/B test.

With a well-designed warehouse-connected solution, though, product managers can see results as they happen—without any batch processing delays. The moment an experiment has data to analyze, warehouse-connected makes these insights immediately available.

Even better? Warehouse-connected solutions offer a broader range of capabilities than experimentation or feature flagging on their own, reducing the need for separate integrations.

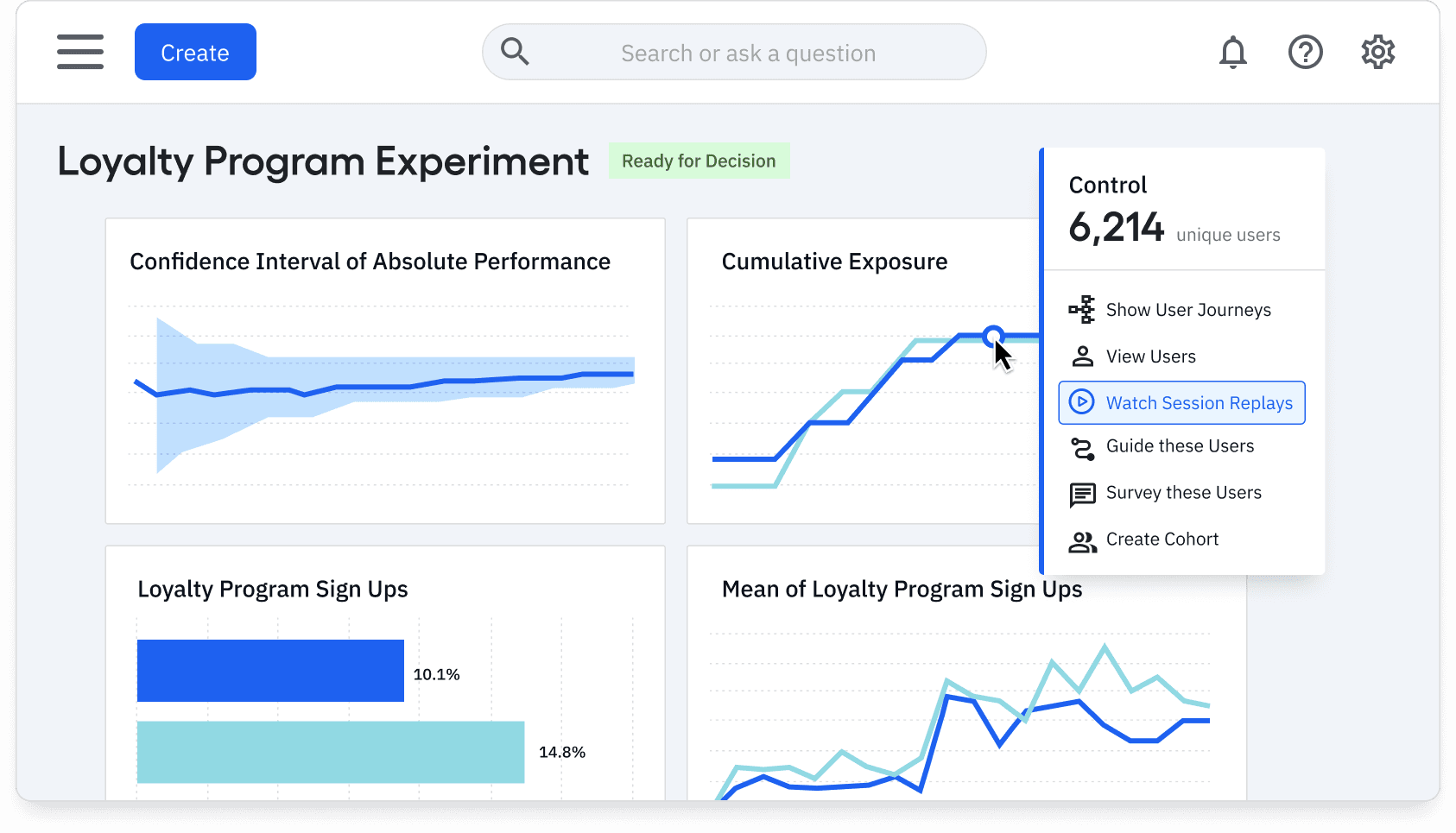

For example, Amplitude’s data processing was built to perform orders of magnitude faster than general-purpose data warehouses, making Amplitude Feature Experimentation one of the fastest solutions on the market. When combined with the rest of Amplitude’s Digital Analytics Platform, teams can watch how users engage with their experiments or applications using Session Replay, optimize their Guides and Surveys, connect to audiences downstream with Activation, and more—all in real-time. Connecting all of these capabilities with a fundamentally open ecosystem, a unified data model, a seamless user experience, and AI-powered workflows unlocks massive opportunities for companies looking to drive growth.

The Amplitude Digital Analytics Platform connects analytics, experimentation, session replay, and more, all in one place.

Lower total cost of ownership

Many companies assume that running experiments in their own data warehouse is the cheaper option based on a vendor’s quote. But in reality, warehouse-native solutions often lead to higher costs because of:

- Larger Data Science Teams: Since warehouse-native solutions require constant support from data engineers and data scientists, companies often need to hire (and retain) more data science team members to set up, maintain, and analyze experiments.

Skilled data professionals are already hard to find and retain. 88% of organizations struggle to meet customer expectations because they don't have enough data science professionals on staff today. Warehouse-native experimentation solutions make this problem worse since every experiment adds to the queue, bottlenecking behavioral data and insights. - Higher Warehouse Costs: Every time an experiment needs analysis, the warehouse-native solution executes complex queries in your data warehouse, driving up query, compute, and storage costs. As the number of experiments your team runs increases, so do your warehouse costs.

- Vendor Costs and Implementation: Even after the initial cost to sign up with a new vendor, you’ll still be on the hook for continued contracting, implementation, and maintenance costs. While those licensing costs may appear trivial, they can add up quickly when it comes to the total cost of ownership.

With a warehouse-connected approach, self-serve experimentation moderates the growth needs for data teams (and keeps them from burning out). Teams only pay for what they use since the cost of compute and storage is already built into the pricing model, ensuring a more predictable cost model.

These built-in advantages help demonstrate why teams drive higher ROI at a lower total cost of ownership with warehouse-connected experimentation solutions.

Get more value from enterprise data

Warehouse-native experimentation solutions focus on eliminating data drift by ensuring that queries only take place in the warehouse. This helps data teams ensure they can use experiment data in the more advanced analysis they are running—but that’s not the only way to accomplish this goal.

Warehouse-connected experimentation allows any teams running self-serve experiments to automatically sync their results to enterprise systems like Snowflake using mutability. This not only keeps data from drifting—it allows data scientists to augment their advanced analysis with real-time behavioral insights.

For example, a leading media company uses Amplitude’s real-time insights alongside data in Snowflake to power propensity modeling. By predicting the likelihood of a user signing up for a newsletter or plan based on behavioral data, they’ve been able to craft personalized pricing offers that increased signups by 35% and significantly improved their revenue per user.

A warehouse-connected approach gives every team what they need to move faster and win as a team. Product teams have the speed and real-time insights they need to iterate faster, and data teams get behavioral insights to enhance their analysis in the warehouse.

Closing the loop: A better experience for product managers

Think back to that imaginary product manager role you took on earlier—you were waiting (and still might be) for answers to your experiment queries.

Now instead, picture using a warehouse-connected solution. You step away from the endless support queues, and you’re able to shift your focus to building faster with:

- Self-serve experimentation—reducing the burden on data scientists and delivering faster iterations

- Real-time experiment results—you don’t have to wait weeks for answers

- Lower total cost of ownership—lower compute costs and reduced overhead

Warehouse-connected experimentation providers like Amplitude empower product teams to move at the speed of innovation, without the headaches of warehouse-native solutions.

If your goal is to optimize user experiences, move fast, and reduce costs, cloud-native is the clear winner. It’s time to ditch the support queues—and start experimenting smarter.

Katie Barnett

Former Director, Product Management, Amplitude

Katie is a former director of product management at Amplitude. She's focused on helping customers use Amplitude to gain user insights to build better products and drive business growth. Previously, she was a staff product manager at Gladly and a senior product manager at AppNexus. She's also worked in strategy & business development at Bloomberg and investment banking at Goldman Sachs.

More from KatieRecommended Reading

How Complex Uses AI Agents to Move at the Speed of Culture

Feb 17, 2026

4 min read

The Last Bottleneck

Feb 17, 2026

11 min read

How NTT DOCOMO Scales AI-Powered Customer Experiences

Feb 17, 2026

9 min read

How Amplitude Empowers Mercado Libre to Be AI-Native: Scaling Intelligence Across Latin America's Largest E-Commerce Platform

Feb 17, 2026

8 min read