The Winds of Change Are Blowing: The (Recent) History of Cloud-Based Business Data

Looking back at previous cycles of business technology to predict what comes next

The five-year period stretching from 2017–2023 was as significant to industry and commerce as any other five-year period since the Information Age began in the early ‘90s. In this span, the world experienced a number of significant events that dramatically affected consumers and businesses in every vertical across the globe.

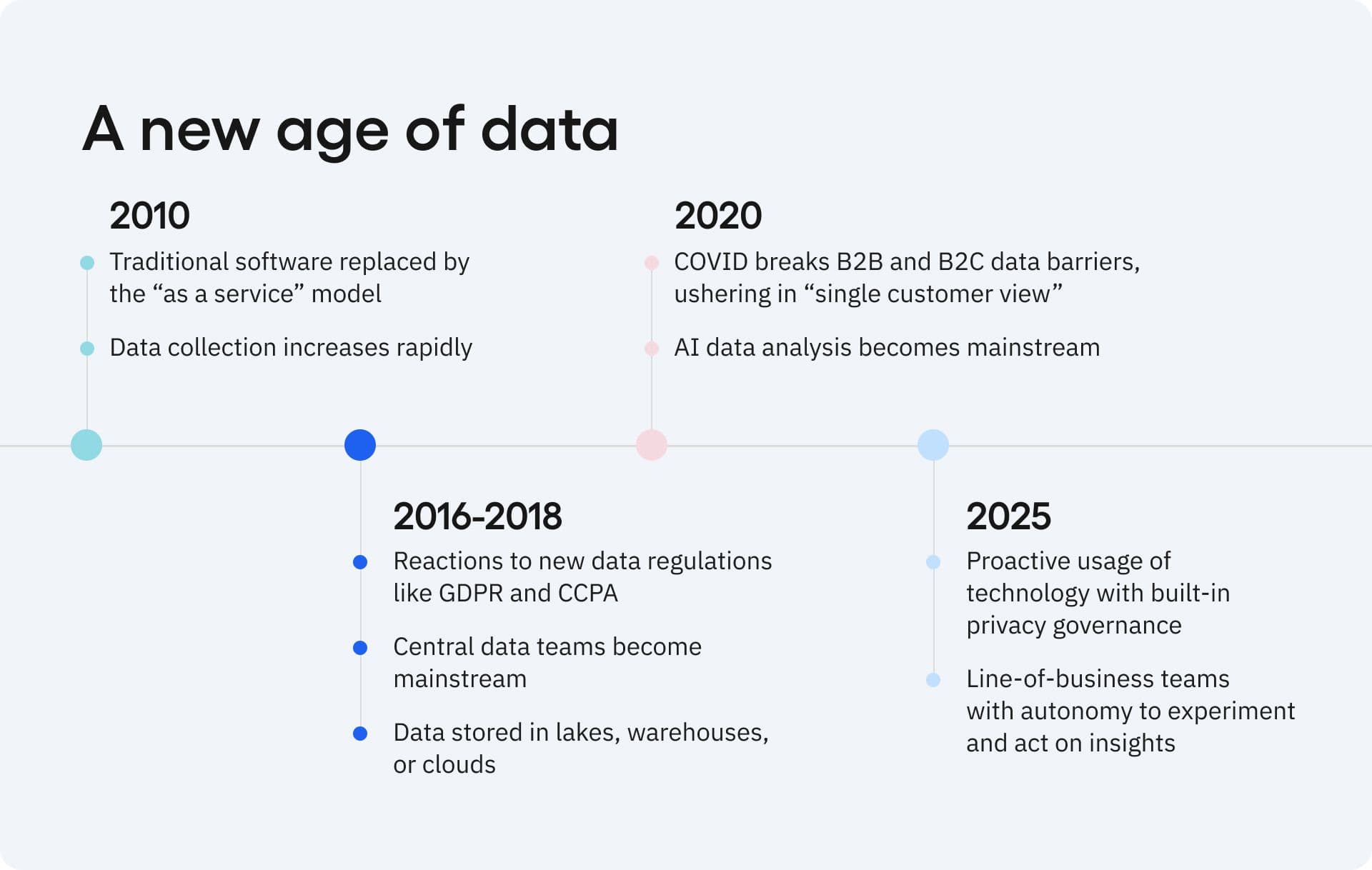

Before this period, hyperscalers had strengthened their impact on markets by providing Software, Networks, Infrastructure, and Platforms as a service. During the 2010’s almost every organization had virtualized their services to the consumer via this “as a service” approach with a select group of enterprise-class providers such as AWS, Microsoft, and Oracle. These “as a service” environments allowed organizations to scale their hardware and software needs with unprecedented speed and security. Teams also scaled security, access, and business continuity controls required to operate consistently, with optimal cost efficiency.

In the late 2010s, more technical innovation emerged to complement the hyperscaler impact on digital strategies. Data, the final tier of computing, became an additional pillar to the “as a service” family. Although data warehouses and lakes had been in the market already, techniques like “headless” API-first designs and containerization technologies like Kubernetes and Docker brought the data clouds into prominence. Alongside the previously mentioned hyperscalers, Snowflake and Databricks offered scalable methods for data sharing, data integration, and machine learning. Together, all these technologies would provide the foundation for the computing upheaval that was Artificial Intelligence.

Business data moves to the cloud

Throughout this period of rapid innovation, business and technology entities merged tighter together. IT had been emerging as a competitive advantage (rather than a cost center that occasionally saved labor with automation) for some time but in the early 2010s, it became abundantly clear that technology was now a precursor to running a successful business. When the industry started to recognize this, two significant events took place: 1) the formalization of data privacy laws, and 2) the COVID-19 pandemic that forever changed global commerce. Together, those events cemented IT as a necessary driver of corporate success.

As data clouds emerged, the data landscape faced conflicting priorities. Pulling in one direction, businesses realized the value of analyzing customer data to make decisions about revenue optimization, resulting in an explosion of demands on internal data teams. Pulling in the opposite direction, consumers increasingly demanded to manage that same data, which led industry regulators and governments to create new data privacy laws. These new regulations mandated consumers’ rights to access, modify, and delete data about them in a framework of litigated consequences that compelled businesses to invest even further in data management. In short, data analysis was opening pathways to new revenue, but data governance mechanisms were required to avoid losing those gains in compliance penalties.

The General Data Protection Regulation (GDPR) and California Consumer Privacy Act (CCPA) brought entirely new ramifications to companies that didn’t make serious overhauls to protect consumer data. The impact was earth-shattering. Even giants like Google and Meta lost lawsuits for failing to keep up with the newfound power of consumer rights established in GDPR and CCPA. To keep up with the increase in data collection, validation, and security, the consent management platform emerged as a popular solution.

Cloud expansion meets new restrictions

Before 2020, new mandates for data management were understood as a cost-reduction problem. Customer data platform architectures offered a solution to that problem: the “single customer view.” This hub of centralization became a single point of control for legal and infosec teams, solidifying an efficient hub-spoke model of data pipelines. It also created a new channel for marketers and product teams to explode efficiency.

To make the data landscape even more complex, hardware manufacturers introduced device restrictions. Apple began restricting iPhone devices, which limited businesses’s ability to collect data about their customers’ intent, affinity, and location. In 2017, consumers received the right to opt out of data tracking from their apps, and Apple subsequently stopped providing identifying device data to business technologies.

Additional data limitations continue to emerge from Apple’s code book, such as blocking all tracking technology from informing a business that a user has read their retargeting email. For businesses looking to make decisions based on consumer information, there was a very clear message: data was no longer easy and free; it had to be earned, managed, and nurtured appropriately.

Data was no longer a free resource. It had become a currency that could be withheld or exchanged based on the value it provided. Then the pandemic hit.

The dawn of a new era

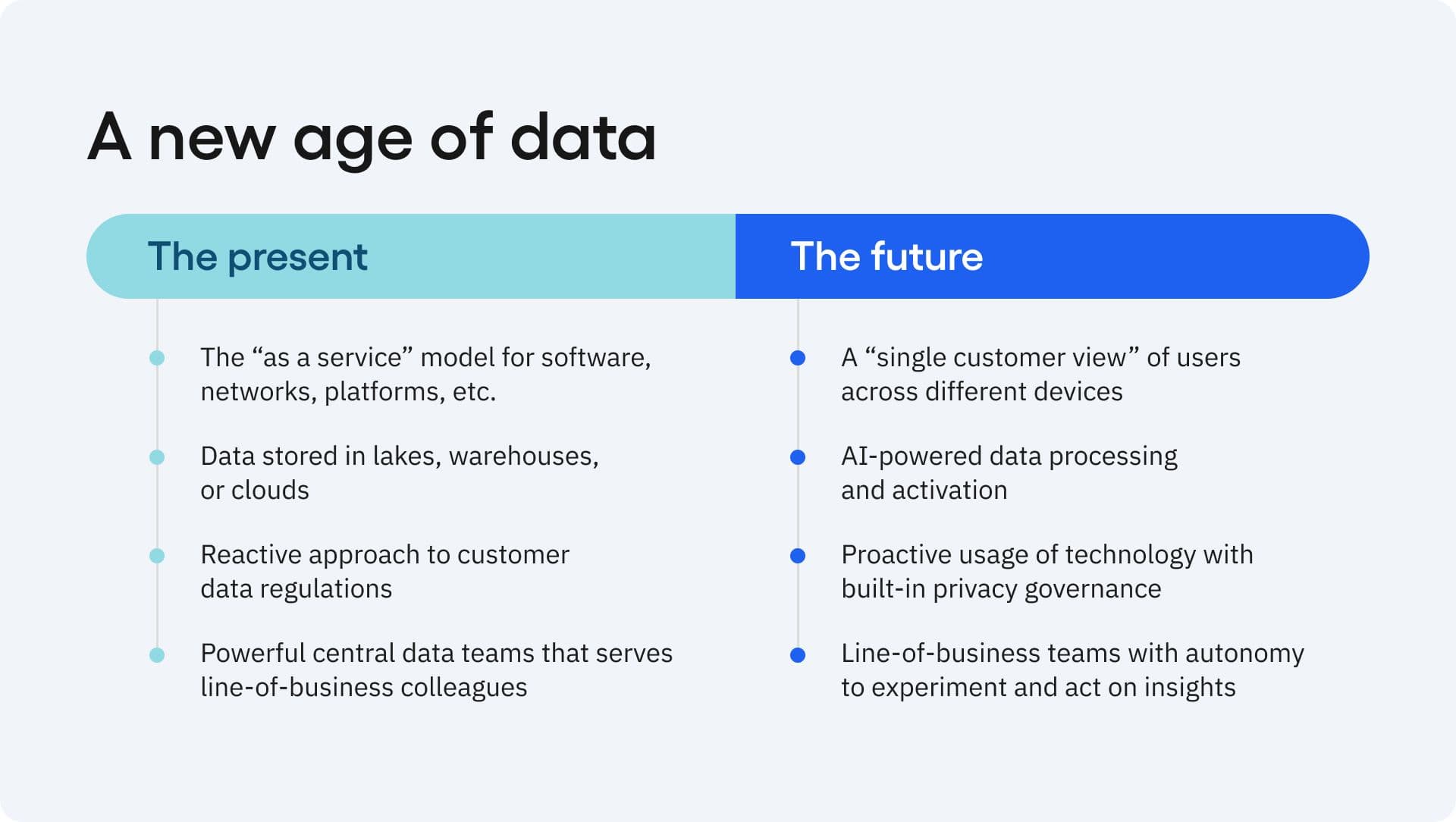

In retrospect, previous generations of business data infrastructure have clear commonalities. They started with an inciting event—the move to the cloud kicked off the as-a-service model, the COVID pandemic led to device-agnostic customer models, etc.—and were being defined by the technological response to that event. When those eras kicked off, the teams that won were the first to build new tools to respond to a change in the game. They used IT to capitalize on their information environment and create competitive advantages.

The teams that emerged as the winners of these shifts were not the ones that chose to do business as usual with a minor change. They didn’t simply ask how to do the same old things with new tools. Instead, the giants that defined the eras were the companies that asked what new things were possible and reorganized to specialize in those uncharted capabilities.

Today, we’re at the early stage of a new wave of business technology, one defined by new possibilities to analyze and act on data. Previous periods have created the playing field (a connected, digital environment that is accessible across different devices and channels), the players (internal and external users who blend B2B and B2C properties), and established a set of rules (new customer data regulations). The inciting event is a new wave of data collection and activation technologies created to navigate that environment.

By establishing an IT environment that utilizes this new generation of tools, companies can massively expand their data usage to new lines of business such as marketing, product, and customer service. It’s not just about giving teams access to data for the same old analysis, it’s about creating ways for those teams to act directly on the insights from that data and track their own performance.

The teams that win in the modern landscape will not be the ones with the most powerful data scientists. They will be the companies that find ways to equip non-data teams with instant access to information about the customers they serve and let them directly personalize their offering to those customers based on their own experiments.

This is the first blog in a series about how marketing teams can capitalize on data analysis and activation in this new era. Stay tuned to learn more about how marketing teams can collect new data, run their own experiments, and take instant action on the results.

Ted Sfikas

Field Chief Technology Officer, Amplitude

Ted Sfikas is Amplitude’s Field CTO for the Go To Market team, delivering Digital Strategy, Martech, and Customer Data expertise to clients worldwide.

More from TedRecommended Reading

How The Economist Gets Insights in Seconds, Saving Analysts Hours

Feb 13, 2026

7 min read

Amplitude Expands AI Visibility Tool

Feb 5, 2026

7 min read

How Ramp Network Turned Data Into 30% Higher Conversions

Feb 5, 2026

5 min read

Why Hackathons Are the Best Kept Secret to Drive GTM Innovation

Feb 4, 2026

6 min read