The Ultimate Guide to Product Features Analysis

How to locate top-used features in your product and understand which features drive the highest user engagement in the app

Browse by category

Feature analysis is the most common and the most challenging project in product analytics. Which product features are the most popular and drive the highest growth and engagement? What are the feature value and ROI of product initiatives? What features are the strongest candidates for optimization, monetization, or paywall? And which should be sunsetted?

You need to do a feature analysis to:

- Understand your value proposition

- Appropriately package your pricing

- Tailor your messaging and GTM strategy

- Prioritize product initiatives

- Discover opportunities for new feature development

- Simply connect with users more

Reforge, Paddle, and Amplitude shared high-level approaches to feature analysis. Amplitude also released a video course introducing their Engagement Matrix: Identify Your Most Popular Features with Engagement Matrix. Also, Timo from DeepSkyData put together a consolidated guide to help us understand what product features are, how to group them, and report activity in his Use feature analytics for better product (using Substack as an example):

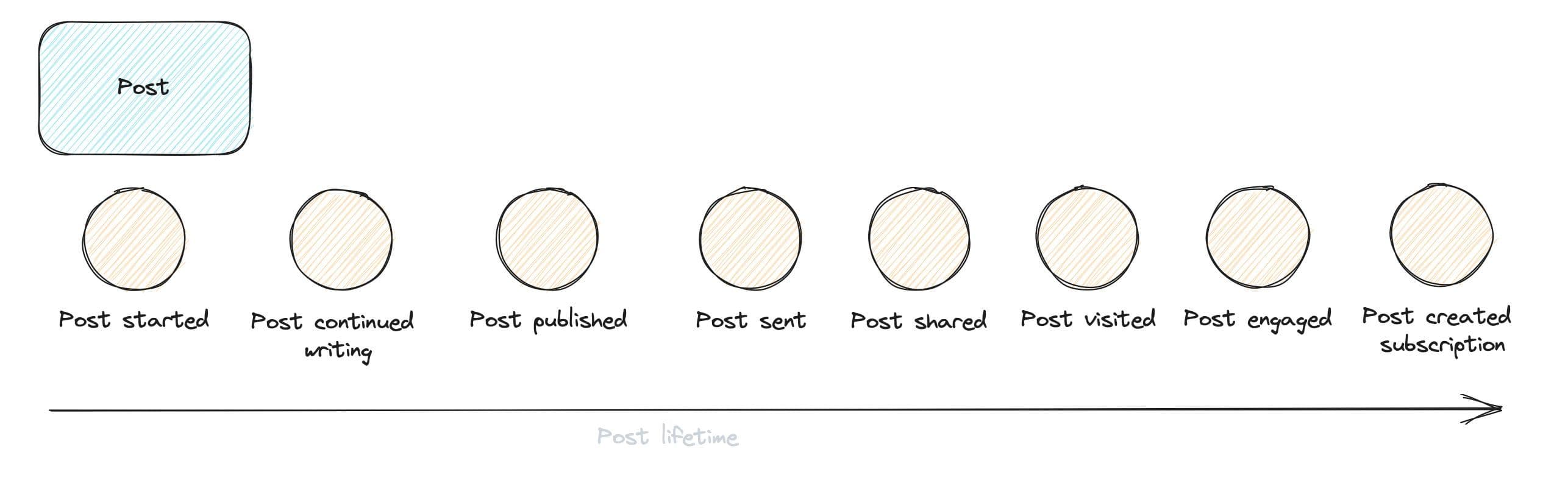

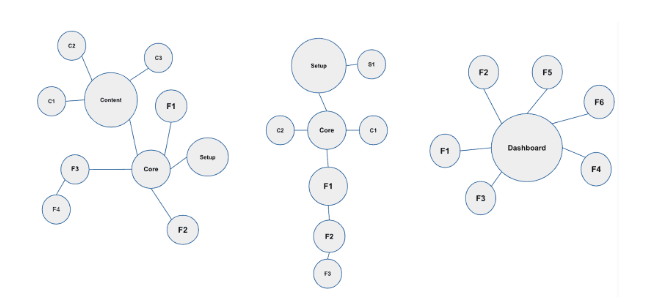

How to group product features. Image by Timo Dechau, deepskydata

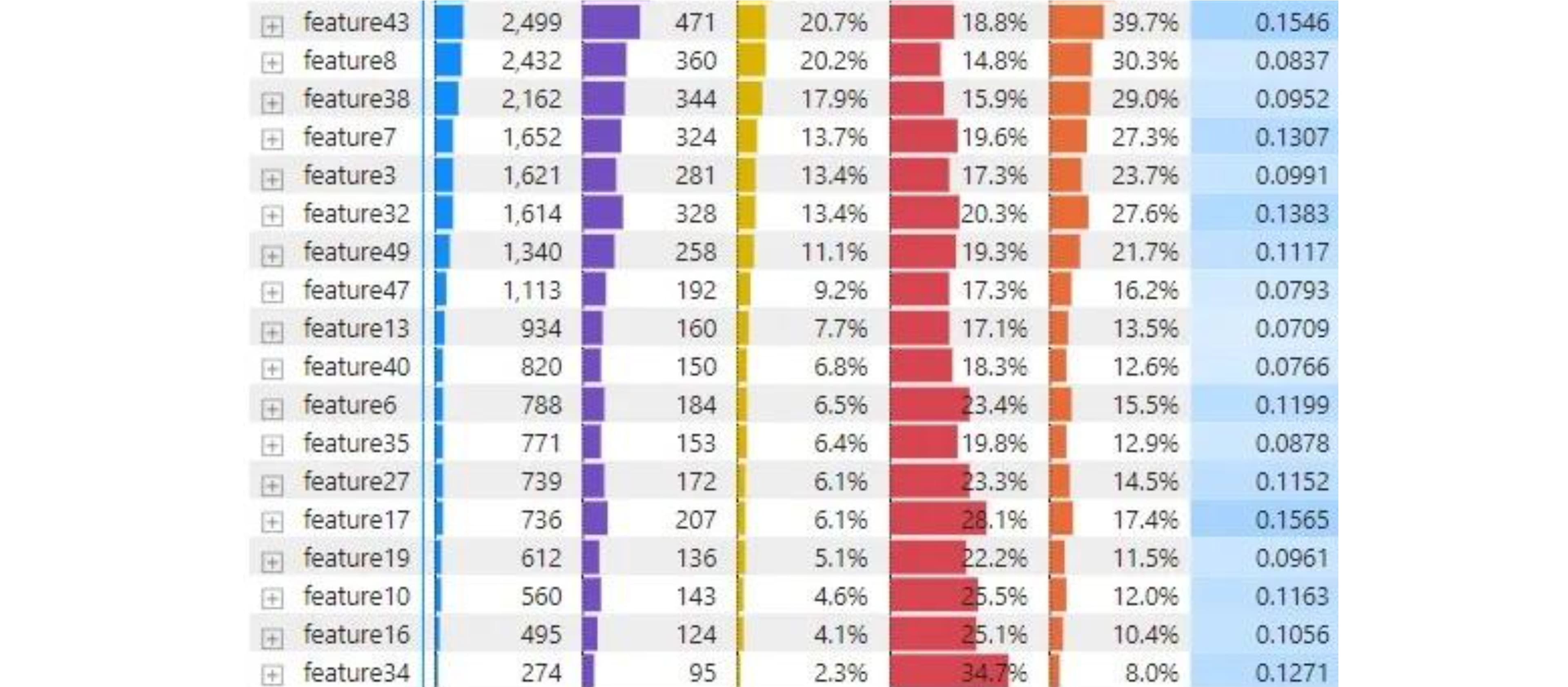

Paul Levchuk, an expert on analytics and growth, offered a more scientific approach to feature analysis in his series How do you measure the success of a product feature? Paul is using the MCC calculation—a correlation coefficient between two metrics (read his Product feature retention deep dive — MCC coefficient):

A list of product features with calculated MCC coefficient. Image by Paul Levchuk

In this publication, I want to take a step further and offer my framework for doing a feature usage analysis that can be done in popular product analytics tools like Amplitude. I have used this approach at VidIQ, Change.org, and now at MyfitnessPal, as it’s applicable for most B2C, B2B, and SaaS apps and is easy to borrow and interpret. I’ll use MyfitnessPal and Strava as app examples and demonstrate my analysis in Amplitude.

Data Analysis Journal

Why feature analysis isn’t easy

What makes feature analysis unique and challenging is that unlike root cause analysis, activation analysis, personas, or activity frequency analysis, for feature usage, you need to have every user action in the app or website tracked (obviously, provided you have the appropriate permissions from your users), and this tracking should be mapped to the appropriate feature (or a group of features) classification.

Depending on your product's nature and maturity, this task can be daunting and can require comprehensive documentation, catalogs, or taxonomy.

There is also no plug-in template or easy solution to fit all features because every product is unique and has its own functionalities. There are more simple utility apps that don’t have a diverse set of features (e.g., Zoom, Google Translate, Calculator, Sleep Cycle, etc.) and complex apps with many layers and trees of user flows (Duolingo, Pinterest, Strava, Yelp, etc.)

Things to keep in mind before working on feature analysis:

1. Align on definitions of a feature

There is no hard-coded definition of what a feature is or should be, and it’s up to your product team to align on the functionalities you wish to consider as features. It can be a mix of ML-based recommenders, notifications, and in-app screen modes and settings. Some features may not necessarily require proactive user interaction (e.g., dark mode screen view, no-ads experience, reminders). Measuring engagement for such features is very tricky.

2. Cluster or group your features

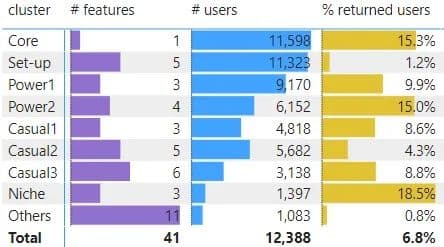

Some features can belong to multiple groups or clusters (e.g., in the Strava app, posting an exercise on the newsfeed can be part of the Activation, but it can also belong to the Community features or belong to the Progress tracking). Such features naturally will have more volume of usage, but this does not necessarily mean they are more popular. To address this, you can bucket features into clusters or groups and set a baseline of expected usage for every group to ensure you do not over-count usage. For example, features can be grouped into community, onboarding, core, creator, etc. Or you can follow Paul’s approach and cluster them into core, power, casual, set-up, niche, and other:

Cluster stats. Image by Paul Levchuk.

For Freemium models, you may need to break this into free and paid cluster groups.

3. Identify transitional features

Users often have to use one feature simply to access another they intend to use (e.g., a dashboard in the Apple Health app can be a feature itself, or it can also be a path to other health reports). You need to have a way to differentiate and, if needed, exclude transitional usage from the actual feature usage.

This is why borrowing playbooks, templates, and benchmarks for feature analysis can be risky, as your app feature design and layout are unique.

4. Recognize features are dynamic

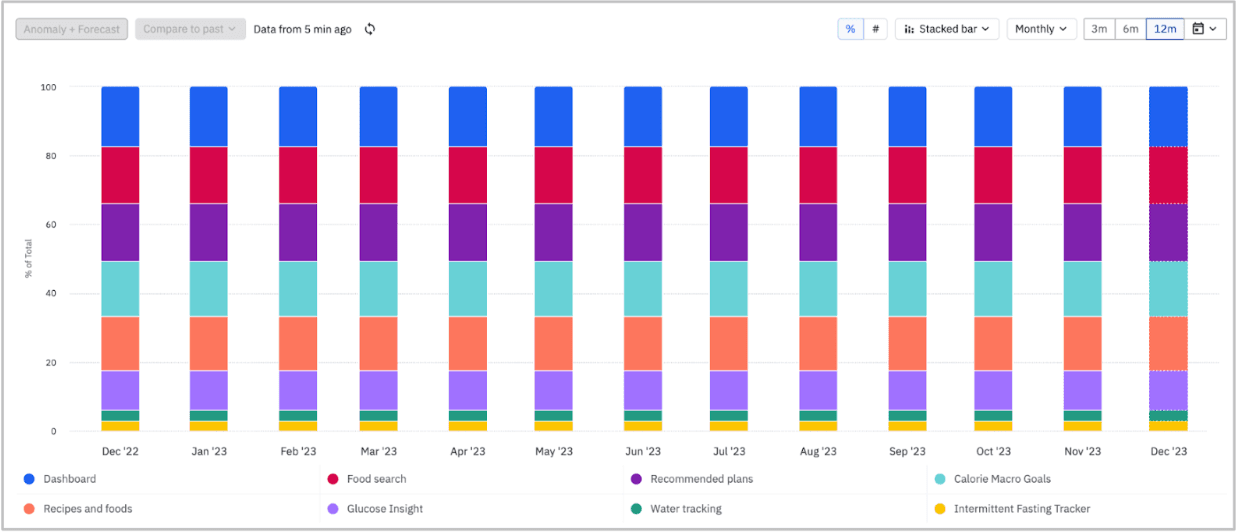

Every company iterates and expands its offerings. For example, in the past year at MyFitnessPal, we released many new features, such as Dashboard, Intermittent Fasting Tracker, Glucose insights, Personalized plans, and more, while also continuing testing and iterating. The feature analysis I ran last year is no longer applicable today, unlike my analyses for Personas, Activation, LTV, or Retention. You have to revisit your feature clusters to make sure they are still appropriate, and their tracking didn’t change.

Getting started—create a feature matrix and catalog

For such analysis, I need to work with two types of documentation. While people may refer to them differently, I classify them as Feature Matrix and Feature Catalog or Taxonomy. These two forms serve different objectives, ownership, and usage.

1. Feature Matrix

The feature matrix is a high-level consolidated view of your product features across your app or business. Its main objective is to document and track your value offering to your user base.

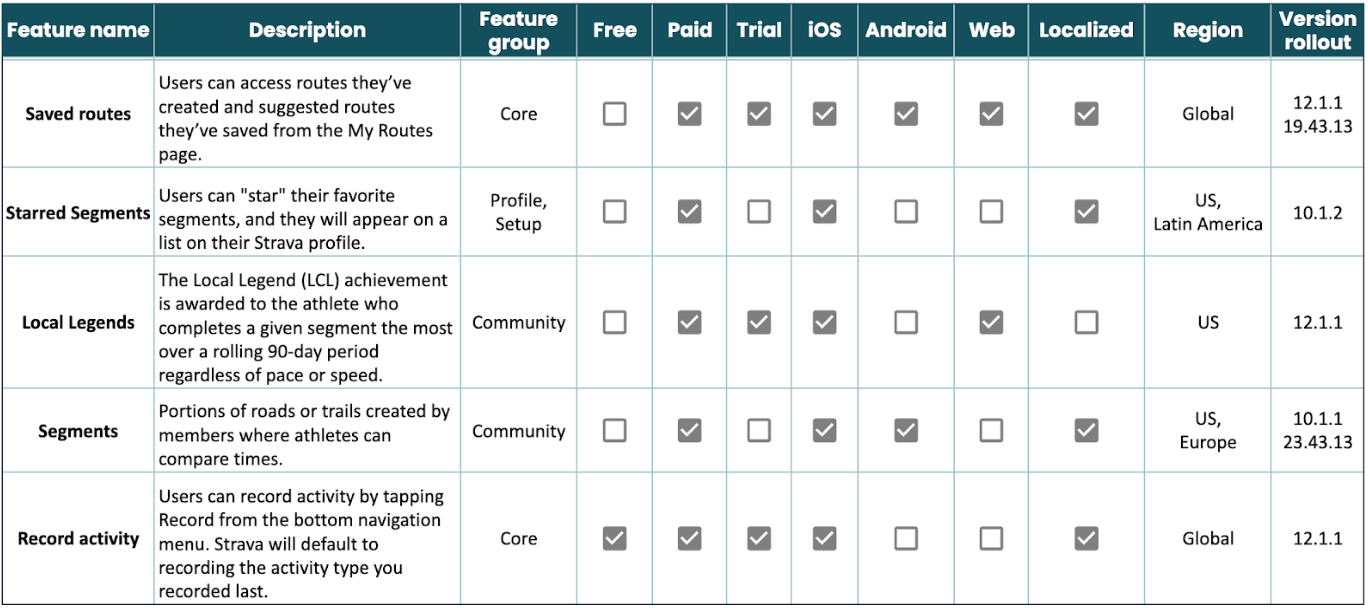

I am using the Strava app as an example to illustrate how the feature matrix may look:

Example feature matrix

I call it a "matrix" because it consolidates the audience and expected usage across the app or website for every feature. I will use the matrix to set a baseline for the volume of user traffic in my analysis.

I have seen teams document this in Wiki, Notion, or Google spreadsheets. The product team usually owns this documentation. Feature matrix is not expected to change often; it is more of a static documentation.

2. Feature Catalog

A Feature Catalog (or taxonomy or tracking plan) is a detailed feature breakdown via user actions and attributes. It's a mapping of events, transactions, sessions, clicks, and hovers to the appropriate feature grouping. It has to be a live view updated with every new app version release or product change.

Many feature catalogs exist, from simple and short to detailed and complex. Here are just a few:

- Amplitude | Taxonomy Template

- Avo | Analytics Tracking Plan Template

- Practico | Analytics Tracking Plan

It takes time and effort to put this together. Once the catalog is created, its maintenance should be merged with the feature development process to capture every product iteration. I recommend including this step in the app development cycle and not closing the sprint until the catalog is updated and reviewed. The data governance team should own its maintenance. If you don't have that delegation, it becomes mutual ownership between the product, engineering, and analytics teams.

How to do feature usage analysis in Amplitude

Step 1. Create events for features

Once you have a Feature Catalog, you could replicate it in Amplitude, which might not be easy, depending on your feature's volume and complexity.

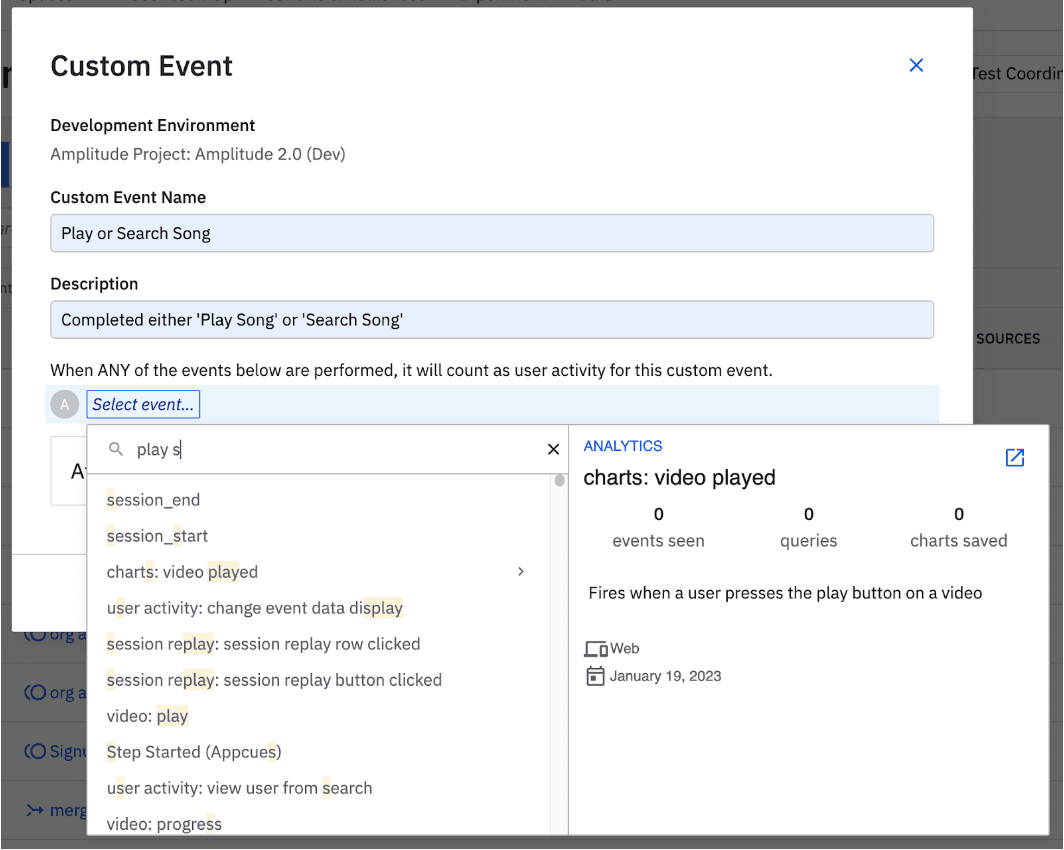

What worked for me was creating a custom event for every product feature that would merge multiple user actions related to one feature. It also allows you to loop all smaller sub-features into one usage and specify filters (if needed):

Creating custom event in Amplitude

If your plan doesn’t have access to custom events, you can similarly set up this merged event analysis through the use of an in-line event.

Another way to run such analysis is using a common screen view event with Grouped by option to split views on screens (not actual data; using an example for reference):

View of merged multiple user actions

If your analytics is cleaner, you might not need to create a custom event for every feature. You can just use Grouped by option for Any Active Event to get a similar distribution (see a demo chart example).

Step 2. Set the baseline for active usage for each feature

Once your features are created in Amplitude, the next step is to set baselines.

Remember, not every user can access all features, especially with paywalls or localizations. That’s why it’s important to allocate only relevant user activity rather than using all traffic for your top-used features analysis.

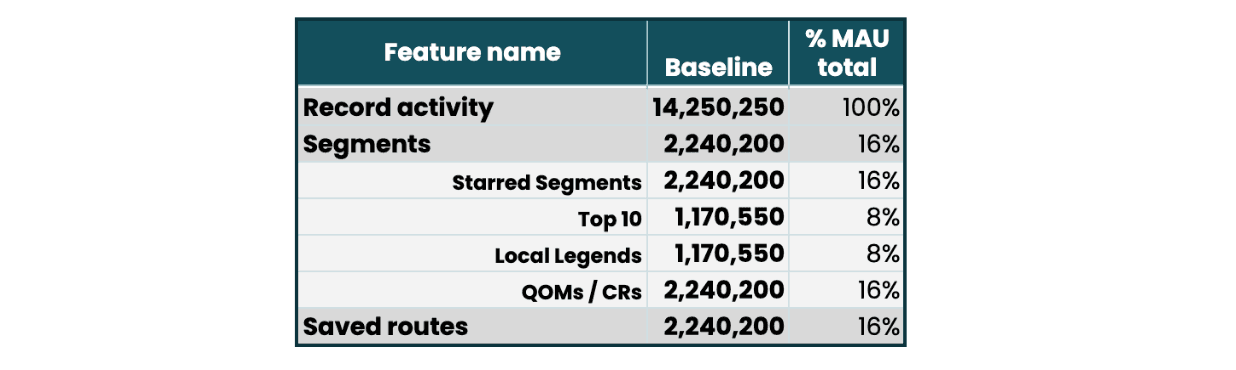

Within the Feature Matrix, you can set the initial expected baseline of the maximum number of Active Users with access to each feature. Then, calculate the percentage distribution of monthly active users across your features. For example, in the Strava app, there are both free and paid features, where only a small percentage of premium users among the total MAU are expected to access paywalled features. So, the distribution of active users in my analysis could be something like this (using estimates, not actual data):

Segmenting active users based on feature access

In this Strava example, some features are nested under the core Segments feature because you need to click on Segments to access other features (such as Starred Segments, Top 10, Local Legends, and QOMs/CRs).

- Baseline refers to the total number of Active users in your app who can access the feature in a month.

- % MAU of total shows the distribution of MAU volume across your app.

You can use the Segmentation chart and apply the Active users filter to get the Baseline, then click on Active % to access the MAU of the total (see a demo chart example).

Step 3. Locate “transitional” traffic in Amplitude

One user action can touch multiple features in your app. For example, users can access Newsfeed to post a story instead of reading updates or accessing their profile screen via the homepage instead of using the menu.

At MyFitnessPal, a Dashboard view can be a path to log a meal. In other words, users go to the Dashboard page simply to access a button to log food and not interact with the Dashboard. However, the total Dashboard views or DAU metric won’t reflect this “transitional” usage. To accurately measure the number of users interested in interacting with the Dashboard, we should exclude the “passing by” traffic.

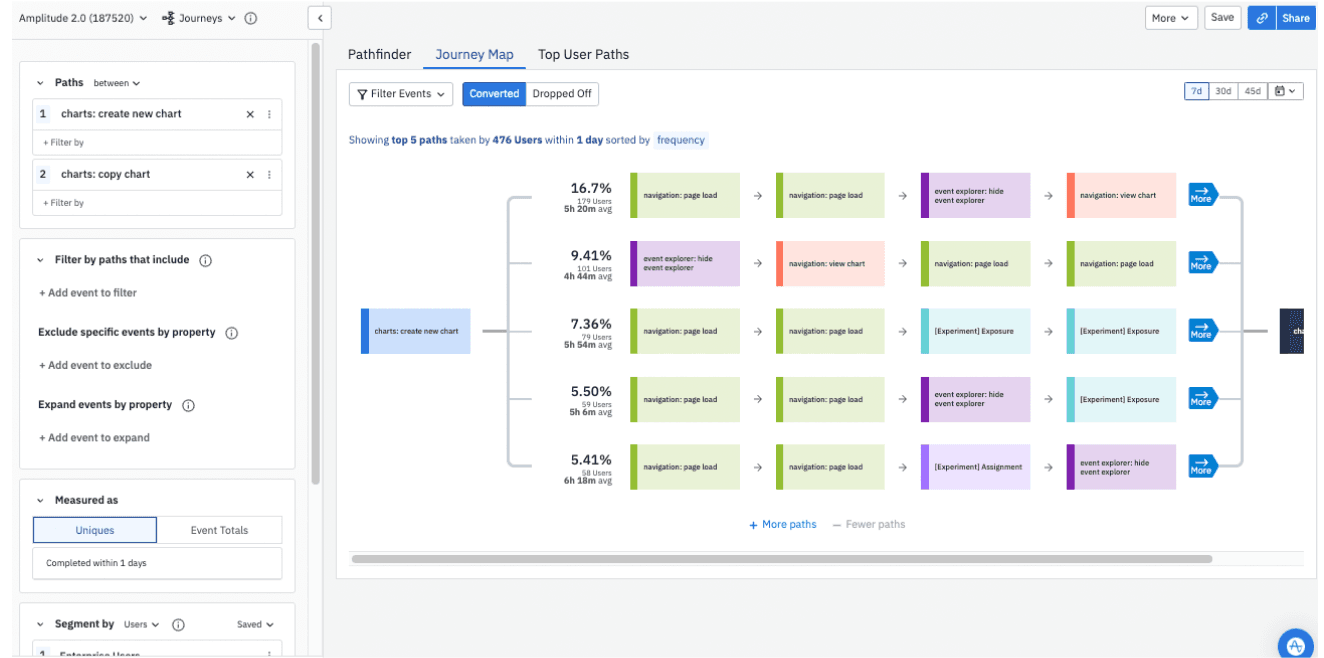

If you are using tools like Amplitude, one of the quick ways to locate “transitional” traffic is by using their Journey maps feature, which lets you see how users navigate between different screens and features:

View of Amplitude Journey Map

Journey maps are the best to demonstrate which features in your app are simply gateways.

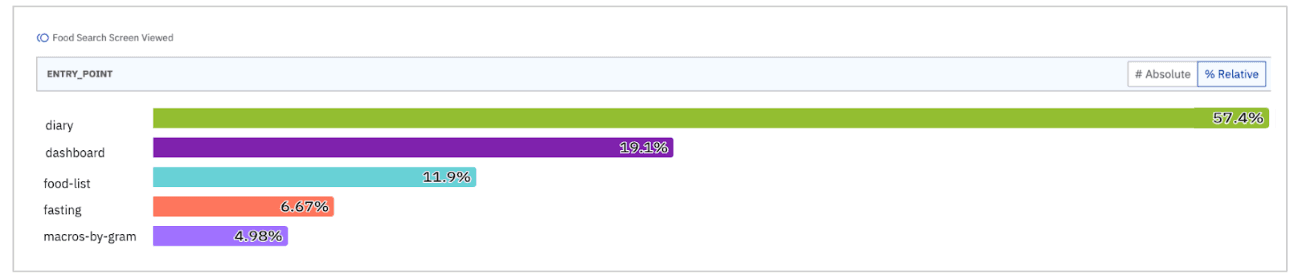

Another way to understand the user journey is to add an access_point or entry_point property to destination screens. For example, I want to see where users came from to the Log Your Meal screen. Using Amplitude Segmentation, I can pick a Food Log Screen View event and group it by entry_point property (not actual data; using an example for reference):

Using event data to segment user journey

This example demonstrates that over half of users came from the Diary feature and at least five features in the app direct users to the Food Log Search screen.

❗Even if a portion of users navigates from the Dashboard to the Food Search screen, it doesn’t imply that the Dashboard doesn’t provide value for this “transitional” traffic. The feature might serve as a reminder to log a meal or encourage users to activate the product.

Step 4. Getting product usage metrics for analysis

In Amplitude, you can measure the feature usage across different product metrics, such as DAU, total traffic, retention, average events per user, and more.

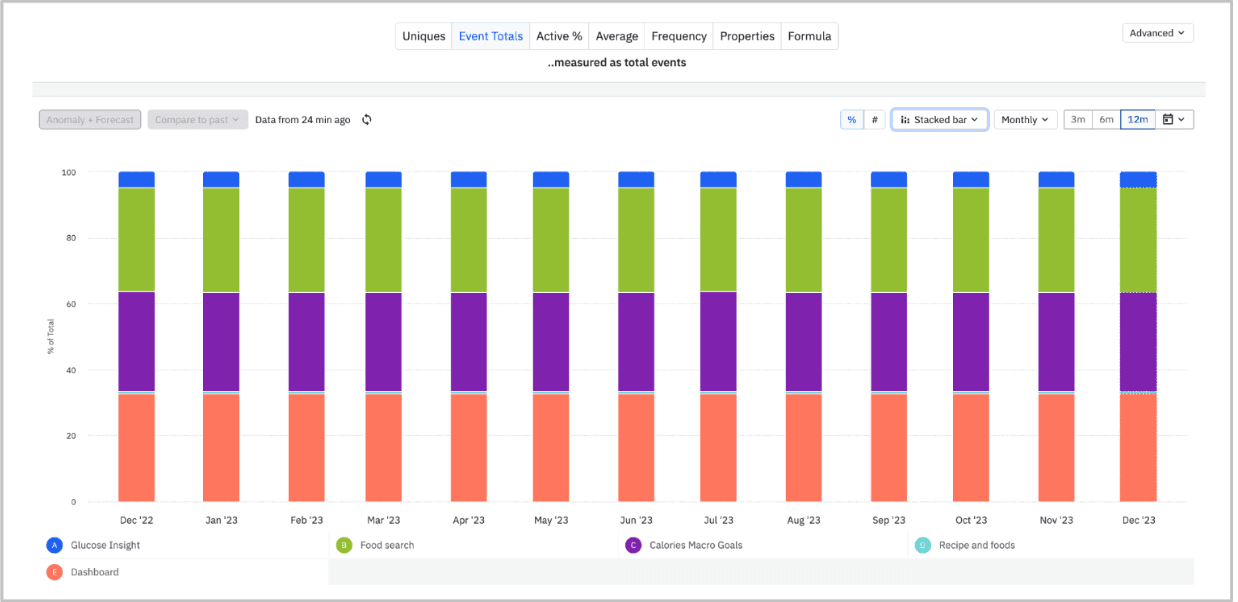

For example, to get total feature usage within an event segmentation chart, click → Event Totals → Stacked bar → Pick % instead of # (See a demo chart example). I set the Monthly view in the chart below to get MAU usage. This returns a nice distribution of feature usage for every month in 2022 (not actual data; using as an example for reference):

Feature usage by month

Then, you can do the same for other metrics:

- Retention: Build a retention analysis

- Conversions: Funnel Analysis

- User sessions: The User Sessions chart: Track engagement frequency and duration

Pay attention to:

- All MAU users across your app have the same access to your features. If you have a paywall or paid features, you need to split the usage into two charts—one for free and one for paid and pull data for each.

- If only a portion of your MAU can access features (e.g., only English-speaking users because not every feature is localized), then you have to apply appropriate filters to ensure you do not underestimate usage, e.g., within the Segment by, set Language = English.

Putting it all together and making sense of the data

The analysis below focuses on understanding user interaction with your product, measured via the frequency and depth of user engagement.

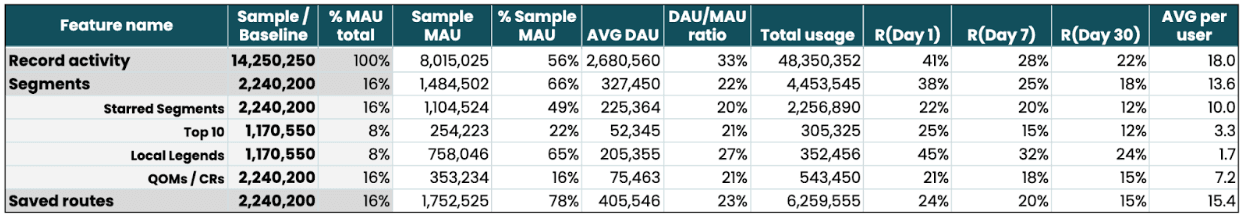

Once I have the Features Matrix ready and data pulled from Amplitude or database, I use this analysis to read the data (using Strava features for reference. Not actual data):

Analyze user interaction

- Baseline/Sample: Number of registered active users who have access to this feature. Use a Feature matrix to estimate a total sample.

- % MAU total: % of users out of total MAU who have access to this feature.

- Sample MAU: Unique active users who interacted with a feature at least once a month.

- % Sample MAU: % MAU who has interacted with a feature at least once a month.

- AVG DAU: average number of unique DAUs who interacted with a feature. You will use this metric to calculate the average usage per user and DAU/MAU ratio.

- DAU/MAU ratio: share of iterating MAU that interacted with a feature on any given day.

- Total usage: total user interactions with a feature in a month.

- R(Day 1), R(/Day 7), and R(Day 30): Retention as % of users who returned to use the feature again on Day 1, Day 7 or Day 30.

- AVG per user: the average number of times a user interacted with a feature in a given month.

In this example, the Saved routes feature has the highest usage (% MAU in a sample) despite fewer people accessing it (as it is a Premium feature).

As you need to click on Segments to access other features (such as Starred Segments, Top 10, Local Legends, and QOMs/CRs), Segments are expected to have the highest total usage and MAU among all paid features. However, once we expand Segments into more sub-features, we notice that users favor Starred Segments and Local Legends more than others.

Local Legends has a small total usage because, as a paid sub-feature, fewer users can access it; however, it drives the highest user retention - R(Day 1), R(/Day 7), and R(Day 30). The percentage of MAUs using it (% Sample MAU) and DAU/MAU ratio are also the highest. It clearly stands out with the strongest engagement.

The Record activity is the top feature in traffic volume (with high Total usage and average DAU), but a smaller percentage of MAUs choose to use it (only 56%). What's interesting about the Record activity feature is that the average usage per user is the highest. This suggests the presence of either extreme outliers or a segment of power users who particularly love this feature. This also can be a tricky case of low DAU usage (Read more: A Deep Dive Into User Engagement Through Tricky Averages). More analysis is needed to break that average down.

Depending on the question you want to answer, you might consider adding additional metrics such as the average days per month users interacted with a feature, weekly and monthly retention, % of users who did recurring or repeated interaction, % of paid MAU, and supplement this view with last year’s feature usage report.

Takeaways

- Feature analysis is unique to your product. It’s important to track user actions mapped to your product features. I find the feature matrix and the feature catalog or taxonomy to be very helpful documentation to consolidate product features with user flows.

- Companies continuously iterate by moving paywalls, expanding localizations, testing new products on only segments of users, or rolling out features to specific app versions. Since not all MAUs will have equal access to all the features, analysts begin by setting the expected baseline of users who can access a particular feature.

- After defining your baselines, it’s important to identify transitional or gateway features. These features naturally have high usage and retention but may not necessarily offer the same value as smaller sub-features.

- For your analysis, you might consider excluding features that don’t require proactive user engagement, such as ML-based recommenders, notifications, in-app screen mode (e.g., dark-screen mode), or no-ads experience.

- For feature analysis, use product metrics that indicate both the frequency and depth of user engagement. Look at what % of total MAUs have access to the feature (Sample, % MAU total), then what % of users choose to interact with it (Sample MAU, % Sample MAU, AVG DAU), how often (DAU/MAU ratio), how much (Total usage, AVG per user) and how many users are likely to come back (R(Day 1), R(Day 7), and R(Day 30)).

Big thank you to Paul Levchuk, Stu Kim-Brown, Sonia Wong, Timo Dechau, MyFitnessPal, Strava, and Amplitude teams for their valuable feedback on a draft of this post!

To read more about user engagement analysis and studies, subscribe to my weekly newsletter about data science and product analytics: Data Analysis Journal.

Join the Amplitude Community!

Have more questions? Chat with Amplitude users in our Slack community.

Join here!

Olga Berezovsky

Senior Manager, Data Science and Analytics, MyFitnessPal

Olga Berezovsky is an analyst and data scientist. Born in Ukraine and based in San Francisco. She is the head of a product analytics team at MyFitnessPal and previously worked at Change.org, Microsoft, First Republic Bank, and quite a few big and small startups. Olga is also the author of the beloved analytics newsletter, Data Analysis Journal. A rare mix of practitioner insights along with thought leadership, Olga's newsletter brings a fresh perspective to the age-old problems of measuring retention and deeply understanding user behavior. Read more from Olga here: https://dataanalysis.substack.com/

More from Olga