How SafetyCulture Cut 1,800 Events Down to 700

A data governance case study

It started with a single alert: 280 new event types created overnight. That was the moment Rinky Devarapally knew her team’s data tracking had spiraled out of control.

For today’s How I Amplitude, Rinky Devarapally, Data PM at SafetyCulture, takes us through how her team cut their events by almost two-thirds. It took a combination of technical solutions, cultural change, and more than a tiny dose of grit to get there.

Let’s dive into Rinky’s story.

“Our data team had zero control over what was entering our Amplitude environment, yet we were still responsible for maintaining and making sense of it all.”

Key highlights

The problem: What caused our event volume to explode?

At SafetyCulture, our wake-up call came in the form of an incident. Well, several incidents, but one big one in particular—when Amplitude started to glitch. We received an overage fee too, so our VP of Data reached out to our data engineer to see what was happening.

The culprit: Engineers had been sending performance-related data to Amplitude. Almost 280 events came through overnight because of a PR release. It was a small thing that engineers probably didn’t think much about, but it had a massive impact on our data warehouse and Amplitude.

This incident revealed a deeper preexisting problem—we had accumulated over 1,800 events and were now nearing 2,000. Many of them were poorly named, poorly documented, or both. Some events had cryptic names or hex codes that made their purpose unintelligible. The volume was causing latency issues and unexpected costs.

So when we saw an overnight event count increase of 20%, it was quite a shock. An extra one or two events might have been the straw that broke the camel’s back for our system—this was 280 straws.

Our tech stack

Before I dive into what went wrong, it’ll help to give you a quick lay of the land of our tech stack.

At SafetyCulture, we rely on Amplitude for product analytics, but that’s just one piece of our data infrastructure. We use Segment for event orchestration, Redshift for our data warehouse, and Tableau for visualization. Under the hood, we have dbt handling our data modeling, S3 for our data lake, and Databricks for more advanced analytics needs.

But here’s where things got messy. Events were flowing into Amplitude through multiple paths with zero control. Some came through Segment (our CDP), while others were sent directly from our front end to Amplitude. This meant our data team had zero control over what was entering our Amplitude environment, yet we were still responsible for maintaining and making sense of it all.

The result? Four major problems:

- No standardization across our taxonomy

- Events with cryptic names (including literal hex codes) that nobody could decipher

- No process for event orchestration—engineers could release events whenever they had availability

- Zero documentation at any stage of the process

How we solved the problem

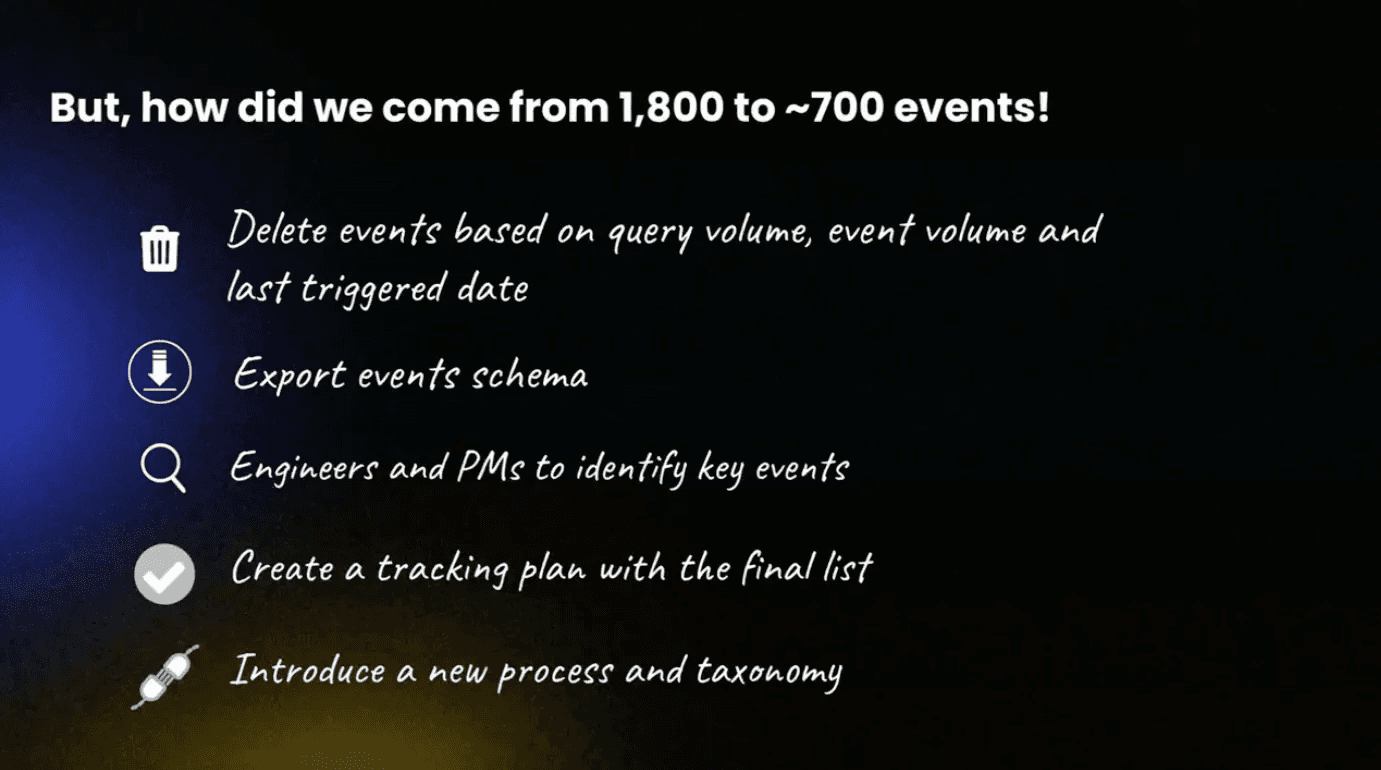

Step one: Delete unused events

First, we identified events that weren’t being used recently or often by looking at the query volume, event volume, and last triggered date metadata available to us in Amplitude.

We also worked directly with the Amplitude team (huge thank you to them!) on a deep clean that brought our event count down from 1,860 to around 1,100.

Step two: Export and analyze

We exported the full event schema to get a complete picture of what we were working with. This became our master document for the cleanup process.

Step three: Identify key events

Next, we went team by team, working with engineers and product managers to identify which remaining events were actually crucial for their work. I won’t sugar-coat it—this was tedious. You have to chase people down and really push for answers, but it’s essential for understanding what’s truly valuable.

Step four: Create the tracking plan

With our final list of validated events, we created a tracking plan in Segment. This became our single source of truth for what events should be allowed through.

Step five: Implement new process and taxonomy

This is where the real transformation happened, though it could not have started without first doing everything else. To go fast, you must first go slow.

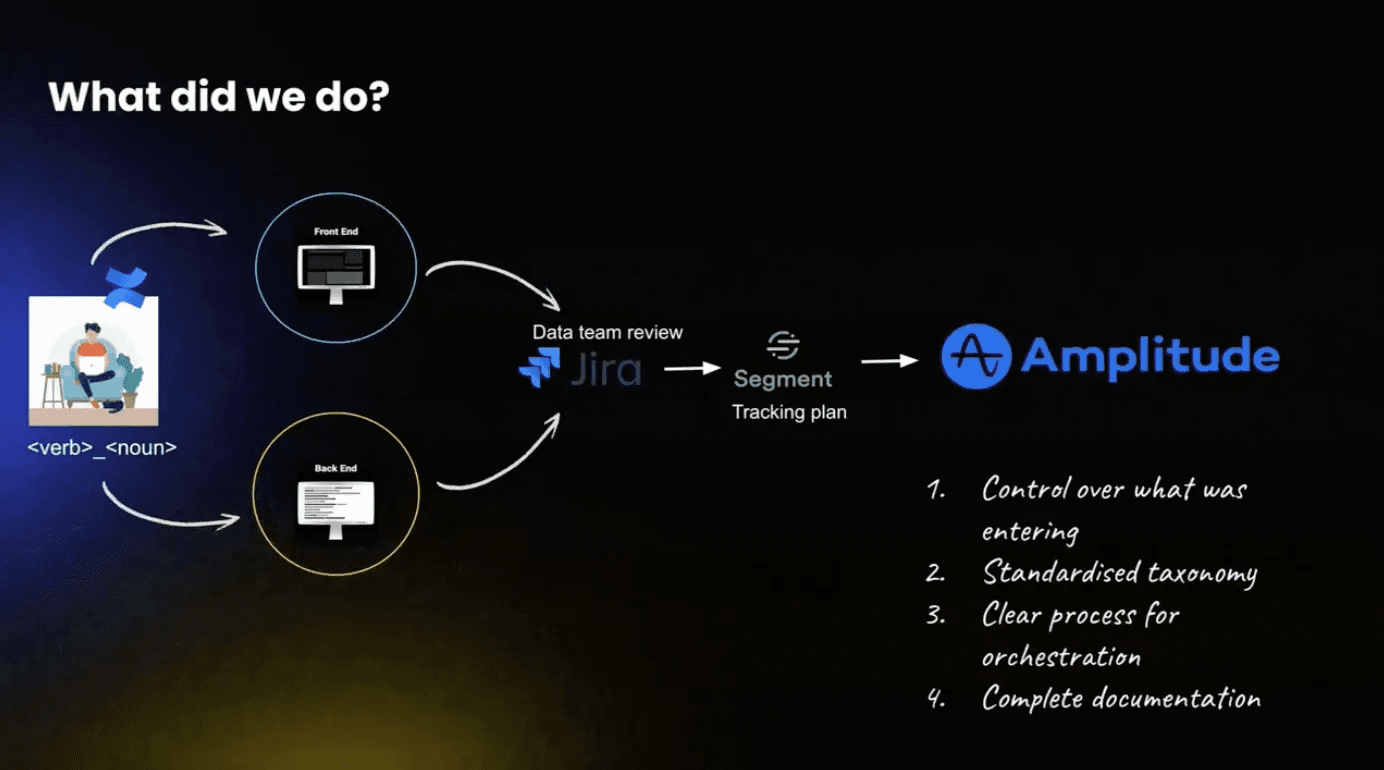

The new process looks like this:

- Engineers can create events from either frontend or backend systems

- But before any event reaches Amplitude, it must:

- Go through a data team review in Jira

- Pass through Segment’s tracking plan

- Meet our new taxonomy standards

This new system gives us four key benefits:

- Complete control over what enters our analytics environment

- A standardized taxonomy that everyone follows

- A clear process for event orchestration

- Proper documentation at every step

The game-changer was using Segment’s tracking plan as an enforcement point. Any event not in the tracking plan gets automatically blocked and diverted to a separate warehouse. This creates a natural feedback loop—teams quickly learn to follow the process when they see their events aren‘t showing up in Amplitude.

Is it more time-consuming up front? Yes. But that extra time investment prevents the much larger time sink of dealing with thousands of poorly tracked events down the road.

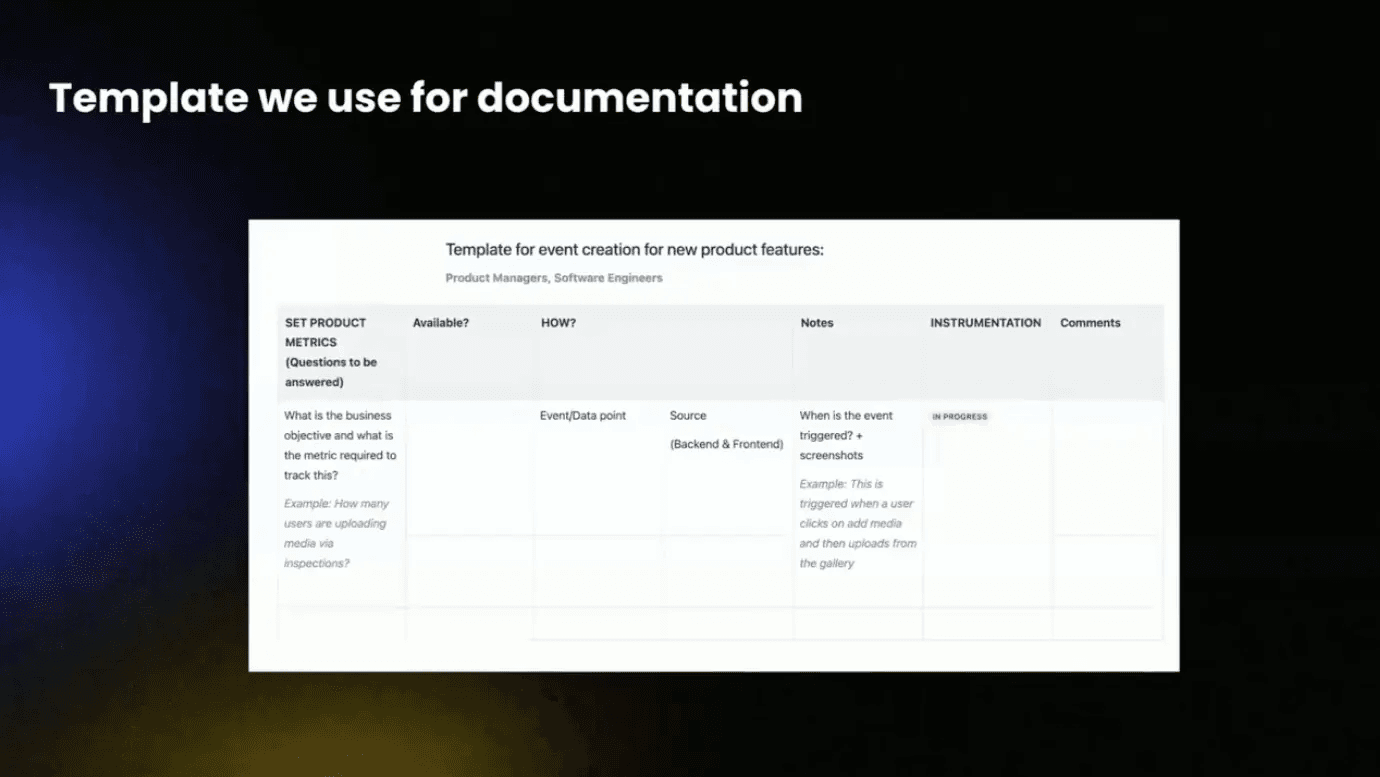

In case helpful, here’s the template we use for documentation:

Key takeaways for implementing data governance

- Start with the questions: Start with the product metrics and questions you want to answer rather than what you want to track. If there’s no question associated with what we’re tracking, there’s no point in tracking it.

- Focus on events first: While property governance is also important, I recommend starting with event governance if resources are limited. Properties can be a lot to handle, so tackle events first, then look at properties after.

- Make documentation accessible: We keep detailed documentation in Confluence rather than Amplitude because it’s more accessible to the broader organization and can be linked to PRDs and engineering documentation.

- Enforce standards through tools: Using Segment’s tracking plan functionality to block non-compliant events creates a technical enforcement mechanism for governance policies.

- Invest in cultural change: While it takes time, building a culture of data governance is crucial for long-term success. This means being willing to chase down teams initially while building understanding of the process’s value.

Join Rinky and connect with more peers and experts using Amplitude every single day.

Rinky Devarapally

Data Product Manager, SafetyCulture

Rinky Devarapally is currently a Data Product Manager at SafetyCulture, where she previously served as Product Analytics Lead and Senior Product Analyst. She's been using Amplitude for over three years and is passionate about helping teams build strong data governance practices that scale.

More from Rinky