What A/B Testing Calculators Get Wrong

Experimenters need to see a range of MDEs over time, not a static sample size.

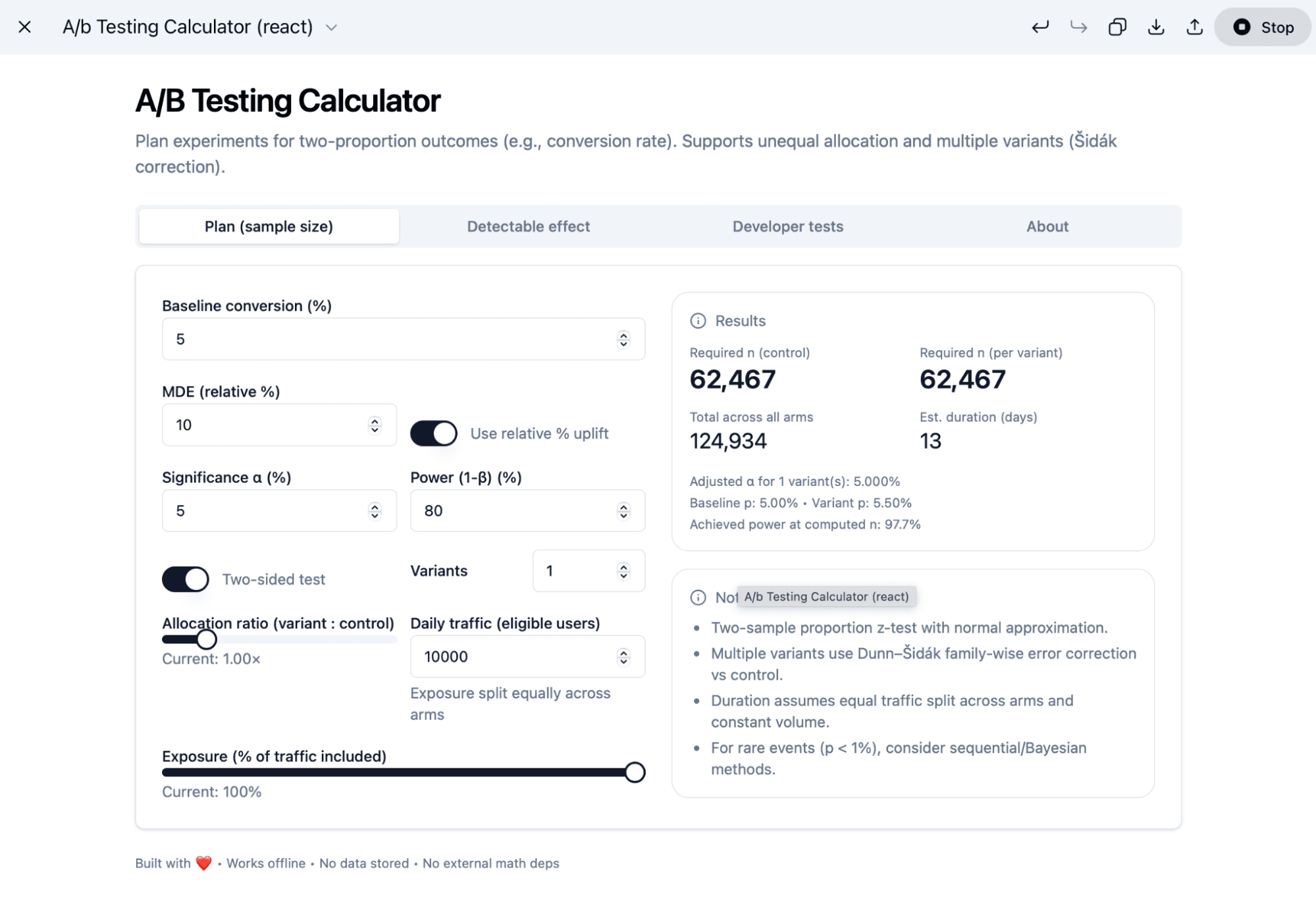

Search the web for A/B testing calculators, and you'll find a slew of free tools that all do the same thing—calculate sample size. They all look roughly like this:

- Inputs for conversion rate, minimum detectable effect (MDE), and statistical significance level

- Output is amount of sample needed per variation

In fact, the focus on sample size is so common that you can even ask ChatGPT to build an A/B testing calculator for you, and it’ll come up with the same formula. Here’s an A/B testing calculator I built in one shot with ChatGPT 5:

And because these calculators are so widespread, it’s easy to ignore a simple truth about them: they’re fundamentally misaligned with how experimenters should be working. They fail to prioritize constraints, and they don’t focus on the actual job to be done for the person running the test. So why do we keep using them?

I believe the industry is overlooking what product managers and experimenters truly need: not a sample size calculator with a single answer, but an MDE calculator with a range of results over time.

Building with constraints

The basic idea with today’s calculators is that you adjust a set of input variables, and the calculator outputs the sample size you have to hit for your experiment to render usable results. Those variables are MDE, conversion rate, statistical significance, and power.

Off the bat, though, it doesn’t make sense to group MDE with the rest of your inputs because it’s a flexible metric—you can change your MDE target if you want. The others are more like control variables or constraints for your experiment:

- Statistical significance and power have industry and company standards, almost always set at 95% and 80% respectively.

- Conversion rate is fixed, either as part of your experiment design or just with the results you actually get. You can change the time frame over which you’re looking at your goal metric and make sure you’re removing outliers, but for the most part, it doesn’t change.

And if we look at sample size as the output variable, we find more constraints:

- Sample size is a function of traffic rate, number of variants, amount of traffic exposed, and time.

- The overall traffic rate is independent of the experiment. That makes it a constraint rather than a dial to turn.

- The number of variants and exposure amount can reduce sample size, but they can’t increase it past the overall traffic. They’re constraints imposed by the experiment design.

To emphasize the point, you cannot magically will more traffic to your application to make the test go faster. The core variable part of sample size is time.

It’d make the most sense to log your constraints in their own part of the calculator as control variables. And given those constraints, the output of the calculator would be the relationship between the two variables you have control over: MDE as a function of time.

Setting expectations: The job to be done

Yes, I did swap the input/output of time and MDE on purpose. As long as the overall output of the calculator is the relationship between them, it’s an improvement. Representing MDE as a function of time is the superior approach, though, because it aligns with the jobs to be done for the person running the experiment, usually a product manager.

The process of experimentation begins with a well-founded hypothesis. “By changing the way checkout works, we believe we can improve 7-day conversion to purchase.” In that statement, we have the feature bet to be tested and the goal metric.

The next step is to share this plan with leadership. Whether it’s pre- or post-launch, sharing the hypothesis always elicits the same question: “How long will the experiment take?” Or perhaps it’s phrased in a similar way: “When do you expect to know the results?”

The job of the product manager in this exact situation is to set expectations. While there are times we can conclude experiments early, there should always be an answer regarding how long the experiment will run (assuming past traffic rates hold). A response of “4 weeks” may be a sufficient answer—but it may also draw its own response: “Does it have to take that long?”

With MDE as a function of time, the product manager knows precisely how to answer this question. We know the MDE we can detect at both shorter and longer timeframes, enabling a conversation about those trade-offs and whether we will sufficiently learn what we need to in that timeframe.

Moving towards duration

Product managers often face limitations imposed by their market and positioning, and face pressure to deliver clear answers about results. Current A/B testing calculators don’t align with the realities of those constraints and needs.

Instead, these tools should prioritize your constraints as the initial inputs, and should then offer a range of expected MDE results based on the experiment’s duration. This approach allows PMs to collaborate more effectively with leadership, as they gain a clear understanding of experiment timelines and potential outcomes, all while factoring in their inherent constraints.

This framing empowers PMs to succeed by setting realistic expectations. Unfortunately, too few tools on the market adopt this perspective, overlooking the critical job of expectation setting for product managers. We should demand better tools, starting with a focus on experiment duration.

Eric Metelka

Director of Product Management, Experimentation, Amplitude

Eric is Director of Product Management, Experimentation at Amplitude. Previously he was Head of Product at Eppo and created the experimentation practice at Cameo. He is focused on helping customers set up and scale their experimentation practices to increase their rate of learning and prove impact.

More from EricRecommended Reading

How The Economist Gets Insights in Seconds, Saving Analysts Hours

Feb 13, 2026

7 min read

Amplitude Expands AI Visibility Tool

Feb 5, 2026

7 min read

How Ramp Network Turned Data Into 30% Higher Conversions

Feb 5, 2026

5 min read

Why Hackathons Are the Best Kept Secret to Drive GTM Innovation

Feb 4, 2026

6 min read