The Early Days of Web Analytics

In the earliest days of the Internet, there was one relatively simple method to measure the traffic your website saw: use the server log.

This is the first post in a series on the Evolution of Web Analytics. In our second post in the series,* Zynga Analytics at Its Peak, we discuss data practices and stories from a pioneer of data-driven products and analytics: Zynga.

Early analytics tools didn’t always work.

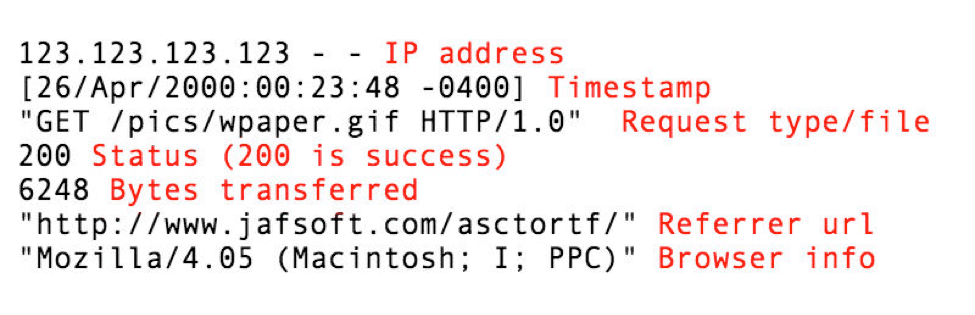

The ability to view the number of visitors to a website is a relatively new invention. If you happened to be one of the 600 websites on the World Wide Web in 1993 and you wanted to measure your website traffic, you dug into the server log.

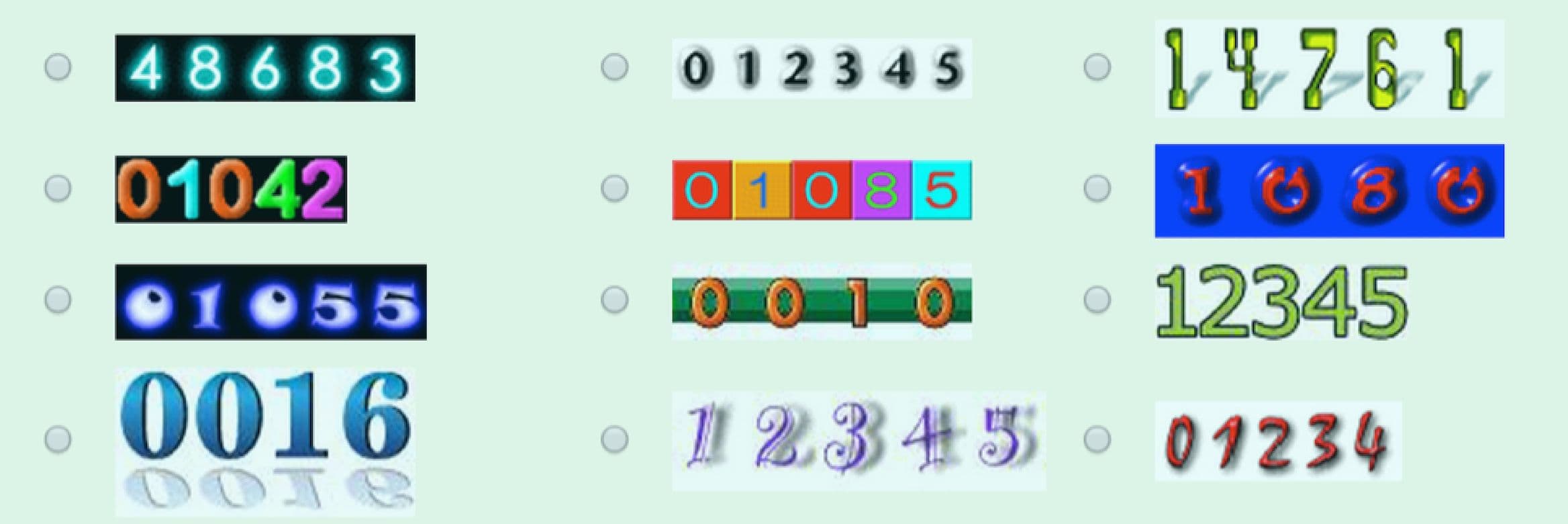

The web server log is, simply put, the list of all the activities a server performs. When someone visits your web address, the browser makes an HTTP (hypertext transfer protocol) request to view the files on your page. A record of each request is kept in the server log. In it, you can see the visitor’s IP address, when the visitor landed on your site, the browser they used, and more details that are, well, pretty difficult to understand in aggregate.

Breakdown of a fictional line of server log via JafSoft.

Good luck to any non-computer scientist trying to make sense out of thousands of lines of server logs. Yet, these logs of information are still as relevant today as they were in the 90s, with a crucial difference: the interface to view the data. The advent of log analysis interfaces changed everything and spawned a massive industry of web analytics. Let’s look back at the evolution of web analytics and how we got to where we are today.

A Very Brief Historical Time Line of the Internet & Web Analytics

The internet has dramatically evolved. Starting as merely an idea to store and exchange data wirelessly, it took almost a century to turn this idea into action. After the first successful dialogue, however, everything changed — fast. In the span of just a few years, the internet innovated away from something only computer scientists could use and towards a resource available to billions of people.

Early 1900s to 1960: Researchers begin experimenting with wireless data transmission.

The first known idea of the internet was conceived by Nikola Tesla early in the twentieth century. Four decades later, while the notion of “the internet” was still not fully formed, others, such as Paul Otlet and Vannevar Bush, began experimenting with ways to digitally store and access information.

In the 1960s, J.C.R. Licklider, from MIT, proposed the “Intergalactic Computer Network” and completed the first successful attempt at wireless data transfer. This was not the internet we know today, but a critical lead-up to shaping the online experience we all know and love.

1990: The internet is born.

The internet as we know it today was made by Sir Tim Berners-Lee when he successfully created a way to run a dialogue that allowed you to access information through HTML. At the time, this was known as the World Wide Web.

1993: The first rudimentary web-analytics solution is released.

~600 websites are online. Webtrends, known as one of the first web-analytics providers, goes to market. This was made possible largely by the development of log-file analysis a few years after Berners-Lee invented the internet.

1995: The first log-file analysis program becomes available.

Stephen Turner, creates Analog, the first log-file analyzer to show you the “usage patterns on your web server.” (source) In this year, Amazon.com, Craigslist, Match.com and eBay go live.

1996: More players in the web-analytics market emerge.

With Analog released just one year prior, the internet becomes accessible to people other than computer scientists. For the first time, marketing teams can join in on the action.

This creates demand for web-analytics tools that help companies understand and increase traffic. Barriers to entry are low, but competition slowly begins to rise. Web-Counter becomes the industry leader in this space, with a specialty in counting site visitors (also known as hit counting). Trailing behind are Accrue and Omniture.

The aesthetics of the internet changes. Better web design and visuals replace static pages with basic text and links.

2005: Google Analytics makes its first appearance.

Over the course of the decade after hit counting is invented, the internet continues to evolve quickly. JavaScript tags replace hit counting as the best means of determining website performance.

The number of websites grows, resulting in more web analytics companies entering the market. Among these is Urchin, which is acquired by Google in 2005 and eventually becomes what we know as Google Analytics.

2006: In-depth optimization tools are launched.

Pre-2006, any web-analytics tool provided little more than an overview of site performance. This all changes when Clicktale enters the scene, giving marketers a way to track in-page user behaviors to improve page performance, conversions, and the overall user experience.

2014: Amplitude launches.

Cofounders Spenser Skates, Curtis Liu, and Jeffry Wang launch Amplitude, a product intelligence and behavioral analytics platform. Two years later, the company is named a Forbes 2016 Cloud 100 Rising Star. Four years later, they’re projected to be on track to a billion-dollar valuation.

Now, let’s dive a little deeper into these phases of change.

Analytics in the Earliest Days of the Internet

The early, highest-tech web-analytics solutions were programs that helped you interpret every entry in the server log. A good log analysis software could help you pick out the requests made by bots and crawlers from those made by human visitors in the log — that’s good if you’re worried about being crawled and if you want accurate data on the people who view your site. The log could also tell you who drove the most traffic to your site, or if somebody new was linking to you.

Though the server log has potential for sophisticated analysis, in the early 1990s, few sites took full advantage of it. Web-traffic analysis typically fell under the responsibility of the IT department. The server log was especially handy when managing performance issues and keeping websites running smoothly. “Companies typically didn’t have defined site goals and success metrics,” a writer at marketing blog ClickZ observes. “Even if they did, very rarely was the tool set up to track those key metrics.”

As the web developed, the log became harder and harder to interpret. In the earliest days, most URLs hosted only a single HTML file of plain hypertext, so one page view generated one HTTP request. Once pages started to incorporate other files (images, audio files, video, oh my!) browsers had to make multiple HTTP requests per page visit.

Also, as browsers became more sophisticated, they developed a technique called caching, temporarily storing a version of a file in the system to avoid issuing requests over and over again for repeated visits. But because a new HTTP request isn’t being issued, these repeated views don’t show up in the server log.

With the tools available in 1997 (WebTrends, Analog, Omniture and Accrue) it could take up to 24 hours for a big company to process its website data. Server-log-analytics companies hustled to keep up with the rate at which the web was growing, with varied success. In the late 1990s, a solution arose from an unlikely place: the internet of amateurs.

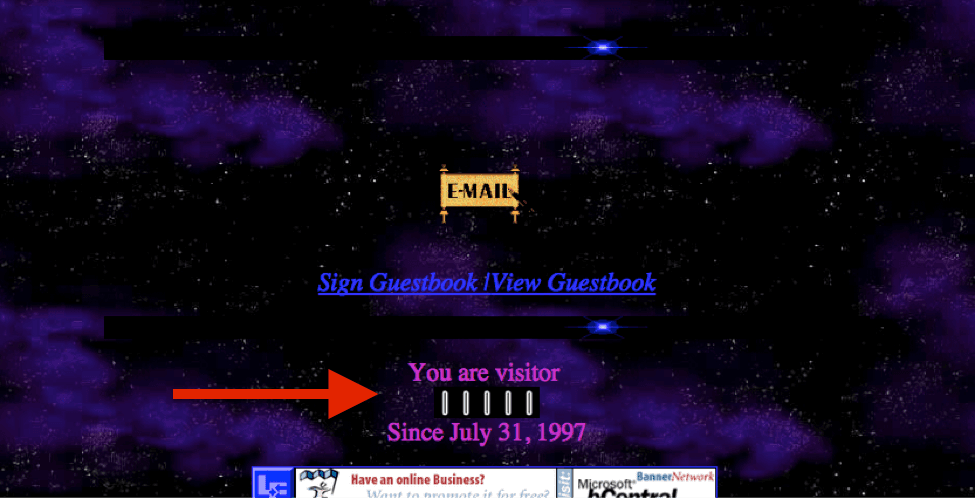

The First Appearance of Web Analytics: Hit Counters

The debut of web analytics was not exactly glamorous. The first web-analytics tool emerged in the form of “hit counters.” Hit counters — also known as web counters — were chunks of code that used a simple PHP script to display an image of a number, which incremented every time the script ran. It wasn’t a sophisticated metric and was often kind of obtuse, but hit counters were damn easy to use, even if you knew next to nothing about the web. A user could simply select a style they wanted from the hit-counter website and then copy-paste the generated code.

Some hit counters were better than others. Later hit counters came with a hosted service, which let you log in to see slightly more sophisticated website stats than the basic hit count. Others were not as fancy. They did a variable job of filtering out HTTP requests from bots. A lot of script counters were SEO spamming techniques: They would hide links in the copy-paste code to get lots of link referrals.

Despite their shortcomings, hit counters were your best bet for knowing how many people were visiting your website, an insight that was quickly growing in demand.

Before hit counters, you really only had server logs. The problem was that not everybody with a website had access to their server log, and not everybody who did knew what to do with it. More specifically, server-log analysis required website owners to have access to their server-log files and to know how to manage that data and interpret it.

A subset of the variety of hit counter styles still available to you, if you want one for some reason.

In comparison, hit counters introduced a way to automatically send the data to somebody else to analyze. Because the hit-counter images were hosted on a website owned by the people who made the hit counter, the HTTP requests for these images ended up in their server logs. The data was now successfully being gathered, stored, and interpreted by a third party.

Second, a website owner did not need to know what they were doing to read a hit counter. They might misinterpret the data or miss a lot of its nuance, but it was clear what a hit counter was at least supposed to estimate: how many times people have visited this website. Most of the time, this was not useful data, but it made many an amateur feel like they were part of the World Wide Web. And when their site did experience a relative surge in traffic, they had a means to discover it.

Hit counters were in the right place at the right time

Bear in mind that the mid to late ’90s saw the rise of the extreme-amateur web developer: The X-Files fans who filled pages with photos of every moment of visible romantic tension between Scully and Mulder; teenagers with LiveJournal accounts about being teenagers with LiveJournal accounts; retired conspiracy theorists.

These web developers weren’t about to pour a lot of money into their sites, and they often hosted their sites with free providers that didn’t give them access to their server logs. Being amateurs, many of them were OK with this. Their pages were relatively simple, and their traffic relatively low, so they weren’t too worried about monitoring performance. Even if they had access to their server logs, there wasn’t much incentive for them to fork over a couple hundred dollars for log-analysis software or to learn to use the complicated free versions.

As more and more amateur web developers emerged, most were eventually met with one obvious, burning question: How many people are looking at my website? Hit counters filled that gap in knowledge.

The Next Web-Analytics Tools: Powered by JavaScript

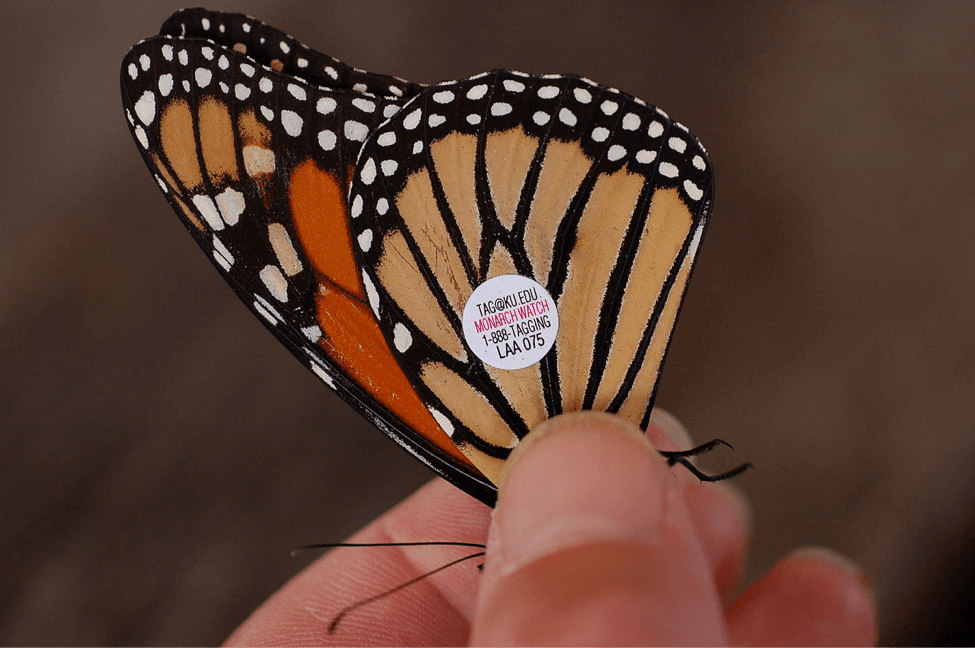

A tagged butterfly, photo by Derek Ramsey.

Hit counters were a primitive example of web-page tagging, which is a technique employed by most analytics software today. In field biology, tagging is when an animal is outfitted with a chip, which can be monitored remotely as a means of tracking the animal. Web-analytics tagging is similar. The “tag” is a file — the images in a hit-counter script, for example — embedded in the web page’s HTML. When someone HTTP requests a page, they also HTTP request the tag, sending data about the user and the request to whoever is collecting the data for analysis.

Later analytics tags developed to use more sophisticated scripts — usually written in JavaScript — to send along different information than HTTP requests. Tag-based analytics can track more flexible information, like how much somebody is paying for an item, or what size their screen is. They can also monitor interaction with specific elements on the page.

In the late 1990s, tagging-based-analytics companies started to proliferate. Some sold software similar to the old server-log analytics packages: They used tags to track user behavior but sent this data to a client-hosted, client-managed database. More common, though, were web-hosted-tag-based analytics solutions, which stored your data in a web-hosted database owned and managed by the analytics company. This solution was much cheaper and easier to implement for the rapidly growing number of smaller tech companies.

These new hosted-analytics solutions included Omniture, WebSideStory, and Sawmill. Their solutions also tended to come with simpler, less-technical interfaces, as analytics itself was undergoing a metamorphosis.

“Marketers [were] starting [to think] of ways they could use this data if they had better access to Web analytics,” ClickZ chronicles. But, starting out, they usually didn’t know what questions to ask or how to answer them. Their companies’ understaffed IT departments often ran out of the resources and patience it took to help. These new tagging-based analytics tools took the reliance off of IT, log-file processing, and the servers. From ClickZ:

“Suddenly interested marketers could go to one of those companies, have some basic tags placed on the site, and get more accurate data. It looked like all the issues were solved, and [marketing departments] could finally get the information they needed — still rarely tied to overall business goals, but a step in the right direction.”

Google Analytics Buys Urchin, and a New Era Is Born

The founding team of Urchin, an early web-analytics company.

Server-log-analytics solutions stuck around, however, because many big companies had already heavily invested in them, and they continued — and continue — to offer some advantages tagging-based solutions don’t. For one, it’s easier to track a user’s various requests through the site when you have their IP address, as is included in every server-log entry. Server logs also track the behavior of crawlers, which don’t execute JavaScript — knowledge of crawler behavior can be very useful. Page tagging in JavaScript also fails to track users who don’t have JavaScript enabled in their browser; the server log works just fine for every browser.

One of the best and biggest of the companies providing server-log solutions was Urchin. Back when some companies took 24 hours to parse their server logs, the first version of Urchin’s software could do it in 15 minutes. This gave them a huge advantage. Some of their first customers were major web hosts, which meant that they soon were the standard analytics solution for anybody who used those hosts. This included NBC, NASA, AT&T, and about one-fifth of the Fortune 500. In addition to their client-hosted server-log software, Urchin, they also offered a web-hosted tag-based analytics program, Urchin On Demand.

Seeing the trend toward less-technical analytics users, the Urchin team strove to develop software that was powerful but still usable by nonprogrammers or statisticians. One of the founders said in an interview,

“[Urchin’s] value was to democratize the web feeling, trying to make something complex really easy to use.”

In 2004, Google representatives approached the Urchin team at a trade show. Months later, in 2005, the acquisition was announced for a rumored $30 million. Most of the founders became executives at Google, and Urchin On Demand became Google Analytics.

Google proceeded to build out Google Analytics into the most widely used analytics solution in the world. And they proceeded to let Urchin, the client-hosted server-log solution, languish. Many an Urchin customer cried foul. Others observed that there was a clear trend away from server-log-analytics solutions, in general.

“Log file analysis tools have been disappearing from the market in the last few years, most probably because the JavaScript tools are easier to implement,” one blogger wrote. “This became more prominent as the web analytics community has branded itself as a marketing profession, raising the necessity to have simple tools that enable the end-user (marketer) to make changes, rather than IT professionals.”

That was a blow to any company invested in the client-hosted Urchin solution. But Google’s focus on Google Analytics ended up working out for them — they’re far and away the market leader in the Analytics and Tracking industry. According to BuiltWith.com, almost 28 million active websites use Google Analytics. New Relic is its nearest competitor, trailing far behind with under 1 million active websites.

How Web Analytics Changed Over the Years

Even the earlier days of Google Analytics placed a strong emphasis on everybody’s favorite vanity metric: page views. This powerful technology was being employed to answer the question: “How many people are looking at my website?” In some sense, they were glorified hit counters.

In-depth analytics tools were needed, and the first few years of market players fell short of delivering in this critical department. One by one, web-analytics companies began to emerge. As they did, demand for deeper insights on user behavior grew, resulting in even more sophisticated players in the web-analytics space.

Industry competition prompted innovation. It was not long before page views were replaced by more informative web-analytics performance metrics such as traffic sources, conversion rate, and customer lifetime value. And, best of all, these powerful insights became easily accessible to everyone on your team as the tools became easier to use. This helped teams harness the ability to collect data on every step in the user experience to understand exactly how people engage with your website and leverage this information to drive satisfaction, conversion, and retention.

But the future of analytics was yet to come. Catalyzed by the rise of mobile analytics, it would go even further. The next step in the evolution of analytics was on its way: event tracking. Unlike the early days of web analytics, we would soon see a shift from page-view-centric website analytics to event-centric product analytics.

Thanks for reading! Don’t miss our next post in the Evolution of Analytics series: stories from the Zynga trenches.

Alicia Shiu

Former Growth Product Manager, Amplitude

Alicia is a former Growth Product Manager at Amplitude, where she worked on projects and experiments spanning top of funnel, website optimization, and the new user experience. Prior to Amplitude, she worked on biomedical & neuroscience research (running very different experiments) at Stanford.

More from Alicia